Introduction

The influence of AI in various areas of commerce is much larger than what was initially anticipated. AI isn’t just seen as a force multiplier; it’s the new age of business where organizations are betting on its potential even to wipe out certain workforces. Would it be the reality of work? Only time will tell. Soon.

However, it is certain that AI is proving to be a worthy companion, making teams more efficient, automating redundant tasks, managing data, systems, and processes, and even narrowing the skill gap between, say, a new security analyst and an experienced one, thereby reducing overheads and operational hiccups. However, looking at the pace at which the technology is taking over, we’ve got to ask, especially in cybersecurity discussions. Is AI adoption really imminent, or a hype play?

If you want to run automation for business, everyone knows they should be using AI in some capacity. However, the vast amount of available information often leaves people feeling overwhelmed about where to begin.

And when it comes to cybersecurity, it’s not just defenders who’re antsy about using it to their advantage. Adversaries are hooked on AI impunity – testing the exploitative boundaries of AI to create, automate, and test threats.

More on that in the blog later, but even for AI on the defensive side, it is in a state of frenzy – fast, erratic adoption, often without adequate oversight. Not because AI itself is dangerous, but the rules of its usage haven’t caught up with the up-and-coming use cases. So, with tactics evolving and AI entering the scene, do we discard the old playbook of defense and adopt a new one? AI has become this catch-all term that promises transformation, but most teams are still trying to figure out what “smart” really means for ways of working and security in general.

And that confusion creates two extremes: organizations that jump in blind, and others that stay in one place because they don’t know where to start. In this blog, we’ll be able to address some of the most pressing issues and provide essential resources to help you get started and mature towards AI integration in your cybersecurity.

Let’s start with the basics.

What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) refers to machines or systems that simulate human-like understanding, reasoning, and decision-making based on the data they are trained on. These systems learn to perform complex tasks through supervised and unsupervised learning, deep learning, and reinforcement learning, helping the systems perform functions that typically require human intelligence to make context-aware decisions.

How are AI systems for cybersecurity developed?

AI systems are developed on an interactive development ecosystem that follows a structured process involving training, validation, and testing.

- During training, large datasets (including network logs, security events, and traffic insights) are labeled and used to teach the model the difference between normal and anomalous activity. This enables the model to optimize its parameters and minimize errors. In cybersecurity, deriving clean data for systems to train on is often difficult, as real-life attacks are often ambiguous.

- Validation ensures the trained model is tested on different datasets so that it performs well on unseen data and prevents overfitting. Overfitting occurs when the model learns too much from the training dataset it is built on, including irrelevant or noise within the data, making it difficult to work on new data.

- In the testing (inference) phase, the trained model is evaluated on new data to assess how well it generalizes to real-world situations.

This iterative AI lifecycle – from design to deployment and monitoring – evolves through continuous feedback and data refinement to deliver a more accurate, reliable system and keep up with evolving threats.

Why is AI used in cybersecurity?

Organizations today are digital-first, so the need for secure and available systems increases concurrently. Whether it’s a bank fighting credential stuffing, account takeover attacks, fraud, or a telecommunications provider protecting its data from tampering and leaks, the scope of cybersecurity is constantly expanding. But traditional tools are failing to keep up, both in terms of managing evolving threats and also spotting them.

Artificial intelligence has been able to offer the very leverage that can fill the gap. Using automation and intelligent machine learning algorithms, businesses are able to considerably improve their detection, prevention, and response capabilities against newer, conventional, and other AI vector attacks.

AI is transforming cybersecurity because of its ability to process massive volumes of data, detect anomalies, and predict potential threats faster than traditional systems. In a landscape where cyber threats evolve daily, including zero-day vulnerabilities, phishing campaigns, and sophisticated fraud tactics, AI provides speed, scalability, and adaptability.

AI-driven security systems can anticipate emerging threats, detect abnormal behavior by learning from past attacks, and support human analysts in responding proactively rather than reactively.

However, AI isn’t just being employed by defenders. In the hands of adversaries, the very capability in our favor has turned against us. The asymmetry doesn’t help either; an attacker only has to succeed once, while defenders must be on point every time.

Also, while defenders can’t overlook the ethical, regulated implementation of the technology, the attackers don’t need governance, ethics, or risk frameworks; they just need results. That’s why AI-powered threats are outpacing AI-driven defense right now.

Unquestionably, AI is powerful, but it isn’t conscious; it doesn’t understand intent or ethics. Therefore, to really understand and stop attacks, uncover their complete tracks, AI needs humans to interpret why something looks wrong, weigh trade-offs, or make accountable decisions. Defense today needs to be adaptive, data-literate, not just automated.

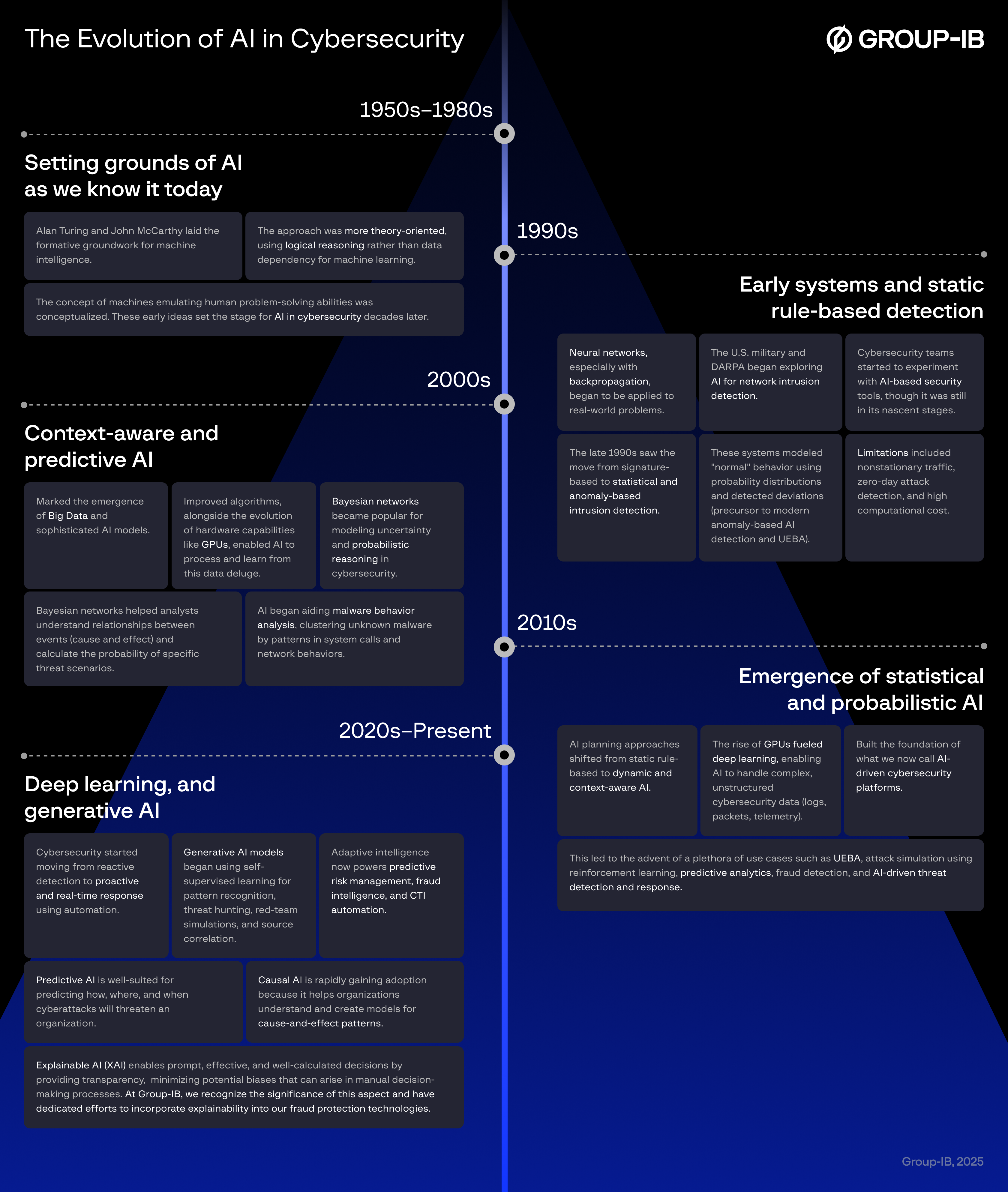

The Evolution of AI in Cybersecurity

Artificial intelligence had its big bang moment in the ‘90s, revolutionizing almost every sector.

So, how could cybersecurity escape its influence? For a long time, artificial intelligence in cybersecurity was a curiosity, something examined in boutique research labs. Today, those relatively crude and limited-function precursors have morphed into a powerful force reshaping knowledge, content, and decision-making in every industry.

What are the current applications of AI in cybersecurity?

Malware classification and analysis

For a long time, cybersecurity has relied on signature-based and heuristic detection systems to spot known patterns, whether in malware code, network traffic, or log activity. However, with machine learning in cybersecurity, teams can now detect new malware strains that are not linked to any known signature.

By analyzing user behavior and hand-crafted features, such as unusual word choices, shady domain names, or atypical activity timing, ML helps recognize subtle patterns that indicate zero-day malware or malicious links, forming the base of modern AI threat detection. They can even detect malicious links by studying token patterns and odd domain combinations, uncovering threats that would otherwise slip through rule or decision-tree-based systems.

Vulnerability detection through AI-fuzzing

AI fuzzing is an automated software testing technique that bombards “fuzz targets” with unexpected or invalid data to find exploitable flaws or vulnerabilities. It is used to test hardware, software, and applications to identify which types of inputs are more likely to trigger vulnerabilities in systems.

Threat detection and response

Malicious network activity or compromised assets must be identified and addressed before the infection escalates into an attack. However, modern, expanding infrastructures produce multiple signals daily, the analysis of which in real-time is nearly impossible. To identify critical vulnerabilities in a timely manner and act on them, automation is crucial. AI automates threat correlation and prioritization, helping teams identify critical events and get the complete context of a threat.

Network analysis

Monitoring systems spew tons of data — network traffic, user sessions, activity times, bandwidth use, protocol activity, etc. It’s too much for analysts to analyze and manage in real time. AI models, when integrated into Network Intrusion Detection Systems (NIDS), help analyze network traffic continuously, identify abnormal patterns, and determine if deviations indicate potential attacks (like lateral movement, data exfiltration, or C2C communication).

However, instead of flagging every anomaly (which can generate a lot of false positives), AI in cybersecurity correlates signals across multiple sources, such as user behavior, endpoint, geography, and time of use, and the relationship between assets, users, and data flows, shifting from volume-based alerts to AI-driven contextual alerts.

Threat correlation and contextualization

Indicators from multiple security tools, underground forums, commercial sources, dark web, etc., may seem inactionable if we look at them as standalones. Only when these indicators are tied to threat actor profiles, TTPs, attribution data, MITRE ATT&CK framework, and the Fraud Matrix can we see the hidden intent, motivations, scope of attack campaigns, and malicious connections (IPs, hosting artifacts, phishing campaigns, emails, etc.), insights that would take weeks to uncover manually.

Correlation gives security teams the foundation to identify, prioritize, and respond to real threats, not just alerts. Not only does it enhance an organization’s situational awareness, but it also gives tactical leverage by understanding attacks, attack groups, and underlying connections or TTP overlaps beyond surface-level indicators.

Contextualizing the data based on your business’s industry and region tailors your defenses to stay ahead of an evolving threat landscape.

Threat scoring and prioritization

When AI models are trained on datasets of network behavior that represent what normal or baseline is, they do not see every deviation as a potential attack but assign a risk score to every change in network activity (based on the determined severity of the activity and its context).

For example, a privilege escalation by an admin during scheduled modifications is normal (low risk), but a privilege escalation by a user at late hours is typically high risk. Now, if you think this might not appear to be a high-risk activity at the surface level, it is here that context, such as past sessions or follow-up activities after access, truly matters.

Each of these measurable risk signals is interpreted through a scoring algorithm (typically between 0–100 or low/medium/high risk) to determine how likely an activity could enable an attack. This risk scoring is, however, dynamic, meaning it continually adapts and improves based on feedback and enhancements from security teams, accurate event prediction, new threat intelligence, and other factors.

Simulating and learning from attacks

Through AI attack modeling and simulations, security teams can identify previously unseen threats, predict potential attack vectors, pinpoint vulnerabilities, and understand real-world attacker behavior to enhance preparedness. It is instrumental in determining the kill chain, building defenses, and supporting intrusive cybersecurity engagements such as red teaming.

Additionally, reinforcement learning helps develop adaptive AI systems that learn from simulated attacks and refine their defenses over time. Teams can also leverage GenAI to understand threat actors, TTPs, and get answers to critical questions, such as “Where am I most vulnerable?” through natural language queries.

Threat feed enrichment

Not only can AI-based threat intelligence help identify and respond to baseline indicators of threat, but it can also be employed to add context to those baseline indicators — such as raw Indicators of Compromise (IOCs) to uncover additional intelligence.

For example, a phishing URL (http://secure-login[.]example[.]com) might be detected by an email protection solution or retrieved from a threat intelligence feed. Automation can then help enrich that URL with data from sources like Passive DNS, WHOIS records, sandbox analysis, and reputation services.

This helps the team take additional measures beyond simply blocking the URL, such as initiating high-confidence response actions, sharing reports, and strengthening defenses proactively.

UEBA (User and Entity Behavior Analytics)

UEBA is a key part of AI-driven cyber defense that uses machine learning, algorithms, and statistical analyses to detect real-time network attacks.

UEBA tracks both users and entities ( profiles/models of users and entities based on behavior over a period of time) for signs that indicate potential threats. It’s strength lies in correlating anomalies across sources in real-time and converting them into a single incident chain.

It combines both signature-based detection (pattern recognition for known attacks) and anomaly-based detection, which identifies outliers in networks, systems, applications, end users, and devices. The advantage of this method is its ability to discover new threats without relying on predefined signatures.

Emerging capability* Predictive Analytics

Predictive analytics in cybersecurity refers to the use of machine learning (ML), artificial intelligence (AI), user behavior analytics (UEBA), and statistical algorithms that are continually refined to identify patterns indicating potential attacks targeting a region, business, or industry.

It hinges on data collection and mining — combining internal telemetry (logs, systems, and application data) with external telemetry (threat intelligence, dark web sources, and open data). The collected data is then analyzed to uncover historical patterns and anticipate future threats.

While predictive technology alone holds promise, without AI, statistical and rule-based models remain limited in scale and depth. It is the integration with AI that enables pattern recognition at scale, dynamic risk prioritization, continuously updated contextual insights, and faster time-to-response.

Graph analysis

Automating the examination and interpretation of data on very large graphs is of use in predictive analytics, helping organizations identify correlations and unusual patterns in the network, enrich indicators, search for backends, identification of hidden connections in attack campaigns, etc.

Fraud Prevention

AI and ML are integral to modern fraud prevention solutions, especially for attack-prone industries like financial services, to enhance their digital, web, and mobile applications, helping them spot and thwart attempted attacks through monitoring, user behavior analysis, biometrics intelligence, and fraud intelligence to map emerging fraud scenarios with precision. Using existing prevention layers, such as sessional and transaction-based anti-fraud systems, these technologies help map emerging fraud scenarios with precision. From a fraud intelligence standpoint, Group-IB’s AI-driven fraud matrix identifies and analyzes fraud tactics and techniques, connecting the dots between incidents to anticipate the next move.

Threat Intelligence

Automation helps in the collection and correlation of threat data to extrapolate actionable insights. It helps teams concentrate on strategic intelligence (based on TTP-level and above indicators) instead of manually looking at threats and managing them.

This helps build geopolitical and situational awareness, and in threat-hunting and pivoting activities, all to inform corporate security decisions and ensure better knowledge and risk management. Automation also helps predefine response actions based on threat profiles to enable swift containment and mitigation.

Threat hunting automation

AI helps threat hunters find unknown threats more effectively. By automating hunting queries and IOC enrichment, AI speeds up searches across enterprise networks. It can apply YARA and Sigma rules at scale to identify malware samples, suspicious files, and attacker behaviors that might otherwise go unnoticed.

The outcomes of these automated hunts can then be enhanced with real-time cyber threat intelligence (CTI) to confirm whether an indicator or anomaly actually indicates a threat. AI also automates hunts based on new intelligence, continuously adjusting to evolving TTPs.

Dark web investigation

Dark web investigations go beyond scouring the untraceable, non-indexed parts of the web to gather information that could potentially be exploited for crimes. They require established techniques, tools, and methods to identify criminals in the dark web, including underground markets, discussion forums, and more, to collect digital evidence that can be analyzed, processed, and shared with authorities and law enforcement to disrupt illicit operations. While manual analysis helps, AI can identify all of an attacker’s accounts far more reliably and quickly.

Phishing detection

Phishing attacks consistently rank among the top threats to businesses worldwide, taking a large share of cyber incidents, thanks to evolving social engineering, the use of AI to improve attack effectiveness and frequency, and the growing use of platforms beyond email that make it easier to reach and exploit users.

But, as phishing moves beyond email, defenses need to as well – combining upgraded email protection with brand protection and even AI-powered text and image analysis to detect phishing content.

Attackers now use localization and cloaking techniques to disguise malicious links and make phishing more effective. To combat this, organizations can build scam intelligence and stop phishing, impersonation attacks, and scams proactively using AI-driven digital risk protection solutions. These help detect threats to a specific brand in the digital space in real time and send them for blocking. They can also issue proactive takedowns to stop new scams before they begin operating.

Emerging capability* AI-patching for vulnerabilities

Security teams can utilize AI in cybersecurity to identify vulnerabilities and automate the generation of security patches. These patches can then be tested for efficacy in a simulated or controlled environment. GenAI enables a shift from rule-based systems to contextual analysis to help join the hidden links that reveal the complete chain of threat activity.

LLM models are also employed to develop self-supervised threat-hunting AI, autonomously scanning network logs and data to provide adaptive and dynamic threat responses.

Updating baseline indicators to capture evolving threats

Real-time indicators (IP addresses, compromised identities, IOCs, device fingerprints) enrich anti-fraud and other systems within detection workflows, which then help with threat validation and automated response.

Data loss prevention

AI tools can interpret complex contexts across different data types, creating processes, rules, and procedures to further prevent sensitive or personal information from being exfiltrated. In each of these cases, the goal is to ensure organizations can get ahead of attacks — stopping them before they execute malware, exploit endpoints, infiltrate networks, or move laterally throughout enterprises.

AI in the wrong hands: How are adversaries leveraging AI to propagate crime?

The widespread advancements and availability of AI models fundamentally alter the cyber threat landscape by lowering barriers related to expertise, resources, and time for attackers.

The offensive potential is vast, extending from the automation and personalization of social engineering to enhanced vulnerability discovery, exploitation, to scaling misinformation campaigns. Organizations cannot afford to delay the adoption of AI in defense, nor can they face the challenge alone.

Deepfakes and synthetic media

Threat actors are increasingly exploiting generative AI to weaponize identity itself, with damages from deepfake incidents reaching US$350m by Q2 2025, according to a report by Resemble.ai. What began with crude face-swaps and pre-recorded lip-syncs has rapidly advanced into live deepfakes and synthetic biometrics, enabling criminals to impersonate executives, customers, or family members in real-time.

These techniques also extend beyond social engineering scams: groups like GoldFactory have demonstrated how stolen facial recognition data can be repurposed into AI-generated likenesses to bypass Know Your Customer (KYC) checks, open fraudulent accounts, or secure unauthorized loans.

Learn more about the escalating deep fake fraud from Group-IB’s Cyber Fraud Analyst, Yuan Huang, who shares insights in a recent interview.

AI-assisted phishing and vishing: hyper-scaling fraud

AI is no longer just a text generator for phishing emails; it is actively embedded into fraud infrastructure. AI-assisted scam call centers are a prime example. Threat actors are deploying synthetic voices to answer initial queries, while LLM-driven systems coach human operators in real time with persuasive responses.

This hybrid human-AI approach doesn’t fully replace scammers but makes them far more efficient, scalable, and consistent. The result is phishing and vishing campaigns that are harder to detect, more personalized, and significantly faster to execute.

Want to see a deepfake vishing attack in action? Check out this interesting experiment conducted by our experts.

AI-assisted reconnaissance

The reconnaissance phase, which is an important part of defensive cybersecurity (where experts understand attack phases and how they are initiated), is also used by cybercriminals to find security caveats to exploit. Now, with AI, which has automated and accelerated the information-gathering process, what would otherwise require manual labor and hours to scavenge can be done in minutes.

AI can analyze vast data from various sources – scraping social media, public records, and network traffic to identify potential targets and vulnerabilities, correlating this data to make target selection and external surface scanning more efficient and effective. For example, AI has increased the speed and scale of credential-stuffing attacks, turning manual typing and testing of passwords into automated tests of thousands of accounts in a minute through AI scripts and tools.

Social engineering at scale

Social engineering is where AI’s impact is most visible today. Generative AI now powers spam tools that scrape personal data, generate highly personalized lures, and adapt language to evade filters and detection. This evolution transforms phishing into a data-driven, scalable operation where personalization is automated and continuous.

Model extraction and information attacks

Adversaries can reverse-engineer models to tamper with training data or steal it to get sensitive information about the model, leading to a confidentiality breach.

AI-enhanced malware and obfuscation

Threat actors are moving beyond simple chatbot misuse and are integrating AI directly into their toolkits. “DarkLLMs”, open source large language models fine-tuned on malicious datasets, can now generate phishing kits, malware snippets, and obfuscated payloads without the guardrails of legitimate AI systems.

Sold through subscription models that mimic mainstream SaaS, these tools can be accessed for as little as US$30 per month, lowering skill barriers and professionalizing the underground ecosystem.

Criminals are also experimenting with retrieval-augmented generation (RAG) systems, which allow LLM-powered chatbots to query documentation and enrich their context for more effective malicious outputs. At the same time, attackers are leveraging AI for data exfiltration from AI services themselves, using prompt injection or exploiting insecure Model Context Protocol (MCP) tools to access sensitive information.

Jailbreaking AI models

Coaxing an AI model into overriding its own safety systems has emerged as a potent weapon in the toolkit of threat actors. A particularly concerning example comes from industry analysis of misuse attempts against Google’s Gemini, in which adversaries tried to bypass the model’s built-in guardrails using widely circulated prompts. Although these efforts largely failed, they underscore a key insight: threat actors are increasingly experimenting with guardrail evasion, even against major commercial systems.

This trend poses a dual threat. On one hand, jailbroken models can be repurposed to automatically generate phishing templates, malicious scripts, and disinformation campaigns at scale, lowering the bar for sophisticated cyber operations. On the other hand, because these exploitations take place within legitimate platforms, they are more difficult to detect and monitor than traditional malicious infrastructure. Jailbreaking thus represents a bridge between academic threat research and real-world exploitation, enabling bad actors to weaponize mainstream AI under the radar.

Multi-modal prompt Injections

Multi-modal LLMs (those that generate images, video, audio, text, and PDFs) are now being used by adversaries to spread malware. For example, an image generated may have an embedded text not visible to the naked eye, asking an agent or chatbot to enable unauthorized actions.

These prompt injections are also used in subliminal advertising, where the hidden text may influence a model’s behavior (nudging the user towards a specific link).

- Data poisoning (training-time attacks): Adversaries corrupt training data or features in the model (also called a model backdoor) so the model learns the “wrong” mapping, or learns to recognize a specific pattern, or even later misclassifies malicious inputs as normal (model integrity, reliability, and accuracy are compromised.

- Data poisoning beyond training sets: Attackers are now using public sources and open databases, planting corrupted, manipulated data into them. Initially, data poisoning was limited to training sets, but it has now extended to the LLM lifecycle phases, including pre-production, tooling, and APIs.

Since LLMs’ wide usage and dependency have made them an avenue for exploitation, be it data leaks or LLM-based secondary attacks, make sure you set standard practices and regulations around their usage within your organization.

For expert forecasts on how adversaries will leverage AI in 2026 and beyond, read our whitepaper – Weaponized AI.

What challenges and risks are associated with using AI in cybersecurity?

While AI will continue to play a critical role in advancing cyber protection, inadequate oversight, deployment, or management of these AI models can challenge your business systems and data’s integrity, confidentiality, and availability.

The concerns regarding privacy infringement, algorithmic bias, AI models’ abstract decision-making processes, and flawed threat assessments are becoming more and more central to understanding its complete potential.

Data and model integrity risks

The efficiency of your AI system is mainly dependent on the quantity and quality of data it is trained on. Feeding it incomplete or unbalanced data can cause it to ignore malicious signs and miss real threats, whereas mislabeled data can lead to wrong associations, resulting in false positives. Outdated data will lead your model not to detect emerging threats.

Therefore, the need for diverse, representative data is imperative, and datasets must include different attack surfaces, actors, TTPs, and temporal diversity for systems to recognize abnormal behavior across different environments.

Inconsistent AI decision-making

Another challenge is around AI making inconsistent decisions and the lack of transparency within the systems. AI systems often make decisions in autonomy, raising concerns about their transparency and accountability when errors occur. When the model’s features aren’t interpretable, meaning that it can’t validate why it labeled one activity as malicious and another benign, reliability in the system cannot be achieved.

This black-box problem (when the decision logic is hidden in millions of internal parameters) makes AI decision-making uncertain and requires expert analysis to understand the outputs. Over-reliance or automation bias can lead to a false sense of security and cause actual threats to be missed.

Ethical considerations around AI

As AI systems advance, they become increasingly complex. Therefore, organizations must factor in ethical, bias, and diversity considerations in data when building these models. The training data used to build these AI systems often raises concerns about privacy and user consent, and measures for protecting sensitive data are not always effective.

Example: AI-based network monitoring tools may collect more user data (behavioral data, identity attributes, and telemetry) than necessary, exposing private user habits. Another example of data misuse is through prolific use of AI tools such as ChatGPT, which can indirectly support social engineering attacks, automated hacking, network payload generation, sophisticated malware development, and phishing campaigns.

Therefore, clear governance policies are crucial in addressing these challenges. They should be linked to cyber risk and board-level oversight frameworks to ensure transparency, output adequacy in AI models, and user protection.

Non-unanimous AI regulations

The global regulations surrounding AI are currently in a patchwork, making governance and the ethical adoption of it challenging. For example, while the EU Artificial Intelligence Act outlines detailed frameworks for tech adoption, other regions may have generic guidelines with no global, uniform standards. Additionally, some rules may be applied during the AI SDLC phase, while others are oriented around post-deployment. Similar distinctions make compliance demonstration challenging.

Having these unclear, highly adjustable, and ambiguous regulations creates additional risks instead of diminishing them. Also, it restricts certain AI defensive “offensive” functionalities (e.g., in threat hunting or model exploitation, etc).

There are several open, globally applicable AI governance frameworks, such as the NIST AI Risk Management Framework (AI RMF) and ISO/IEC 23894:2023, which offer standardized guidance to address AI challenges. But they cannot provide complete operational assurance, which requires regions and industries to build their own compliance mechanisms.

Interdisciplinary combined front

Organizations should collaborate with peers across businesses and governments to ensure that threat- and incident-sharing mechanisms and international standards for AI safety and security evaluations take AI-related cyber risks into account. Strengthening ties between AI researchers, cybersecurity experts, legal scholars, and policymakers is essential to developing effective governance solutions.

Stakeholder involvement for responsible innovation

AI requires a cross-functional understanding, which is difficult to achieve if all stakeholders are misaligned on its usage, risks, and management. Therefore, as the technology adoption impacts not just the security teams but entire business ecosystems, teams such as legal, cyber, compliance, technology, risk, human resources (HR), ethics, and the relevant business units need to be involved to assess how and where AI is being used within the organization. Ensure a safe and seamless transition and control; upskilling is needed to adopt and secure AI systems.

Building AI cyber hygiene

Strategic AI adoption requires businesses to re-evaluate existing controls and develop additional safeguards to reduce cyber risks specific to AI. Some of the foremost steps that businesses can take include:

- Threat and vulnerability management to remediate exploitative blind spots around AI technologies.

- Ascertaining controls and role-based access for AI tools

- Implementing data and system controls, such as network and database segmentation and data-loss prevention, to reduce the impact of an AI compromise.

- Ensuring the security of AI models and training data to avoid integrity issues, model poisoning, and unauthorized manipulation.

- Updating incident response tools and playbooks for AI systems (including detection, investigation, and recovery).

Redrafting business continuity and operational resilience plans with AI-related cyber risks in mind. - Ensuring AI-oriented awareness and training, including AI risks, misuse scenarios, possible ramifications, and preventative measures.

Is your organization ready to adopt AI?

Assess your AI-readiness through the self-driven survey easily; we’re confident you’ll get a helpful insight into how well-positioned your organization is in using AI for effective cybersecurity. The survey is carefully designed to evaluate AI's relevance to your business, integration of AI into your existing infrastructure, your current maturity level and expertise, and identify the specific use cases for which AI can be implemented. After the survey, you will receive a comprehensive score across these areas, providing valuable insights into your readiness for AI adoption. This score will inform the following steps, guiding you toward recommended actions tailored to your organization's needs and empowering you to prioritize and plan your AI initiatives effectively.

The future of AI in cybersecurity: Where are we headed?

AI is set to become a perennial part of all things cyber. 40% of all cyberattacks are now AI-driven. So, to make sure AI works to our advantage and not against us, businesses need to ensure that its associated risks are understood by everyone and overcome.

To help the industry innovate confidently and securely adopt and deploy AI, wider security standards and tools need to be developed to leverage different functionalities of AI models. The GenAI cybersecurity market alone is forecasted to reach $35.5 billion by 2031, emphasizing the rising investment in this area.

The need for better collaboration between the AI and cybersecurity communities is established — cross-functional involvement within businesses and interdisciplinary collaboration at regional and industry levels are key to building stronger AI defenses based on global standards and frameworks.

Since its inception, Group-IB has collaborated with INTERPOL, EUROPOL, AFRIPOL, the Cybercrime Atlas (an initiative hosted at the World Economic Forum), and other international alliances to share intelligence and support investigations. These partnerships also help shape regulatory developments — such as the European Union Artificial Intelligence Act — ensuring that policies evolve alongside adversary techniques.

Another important factor is that human expertise must remain an essential layer of analysis and oversight. AI can enhance, not dictate, security functions and strategies; human expertise remains essential to interpret results, make informed decisions, and ensure ethical and responsible use of AI in cybersecurity.

A foundational component of a successful cybersecurity strategy is acknowledging that even the biggest and best-resourced organizations will need to work hand in glove with technology partners that have extensive experience, not just in using AI for cybersecurity but also in understanding how adversaries use AI to attack organizations like their own.

With cybersecurity service providers like Group-IB, organizations can empower themselves against the current and forthcoming challenges of next-gen cybercrime with unified, transparent, AI-driven cybersecurity.

Frequently Asked Questions (FAQs)

How is AI used in cybersecurity?

Data collection, normalization, dissection, and analysis capabilities of artificial intelligence reign supreme over any expert out there. The agility and precision of deriving actionable insights from diverse source data is the very leverage that AI offers — one that can make or break the situation in case of cyber incidents.

AI and ML applications in cybersecurity enhance our digital and infrastructural setups’ resilience by delivering real-time (and predictive) updates on current and emerging threats for accurate detection, prevention, and response. This is assisted by recognizing malicious patterns within networks, using threat feeds to update controls, validating potential threats through rules or baseline deviations, correlating signals, contextualizing data for fewer false positives and higher accuracy, and also uncovering complete threat actor activity. Spotting and preventing unanticipated threats leads to better attack surface management.

What are the ethical considerations when implementing AI in cybersecurity?

Every AI model’s decisions must be transparent, as black-box decisions create concerns regarding accuracy. That can hide model shortcomings such as overfitting, underfitting, etc. Explainable AI (XAI) helps understand the reasoning behind AI decisions by showing which features (e.g., IP, time of access, or process behavior) led to a classification, also validating threats and reducing misinterpretations, keeping the model in check.

Privacy concerns often circle AI. Organizations must ensure that user consent, data protection, and risk oversight frameworks are enforced to prevent misuse and enable data minimization.

As mentioned earlier, AI’s efficiency depends on the quantity and quality of data it’s trained on. If the dataset is inadequate or outdated, it might cause models to develop bias toward one attack type over another, leading to false positives or missed threats. Unreliable outputs decrease confidence and the system’s reliability.

How can AI be used to prevent and mitigate phishing attacks?

AI analyzes anomalies in sender addresses, routing paths, and URLs to spot threats early, which also helps prevent malicious emails from getting through. It also uses text and image analysis to detect phishing beyond email. AI-driven digital risk protection systems detect and block phishing, impersonation, and scam campaigns in real time, issuing proactive takedowns to stop new scams before they operate.

For phishing detection against your brand, consider enabling Group-IB’s Digital Risk Protection (DRP) that monitors and compares millions of online resources (including brand sites screenshots, images, HTML files, redirect chains, traffic sources, and domain-related parameters) to detect violations such as brand abuse, phishing, fraud, and counterfeit sites.

How does AI transform cyberattacks, and what role does basic cyber hygiene play in defending against AI-driven attacks?

While AI’s speed, computational abilities, and precision in threat detection are up for grabs for both offensive and defensive sides, despite the need for advanced security tools to defend against AI threats, the first line of defense starts with practicing hygiene: Prevention is better than response. AI threats still rely on exploiting common weaknesses (be it systems or people) to enter and disrupt your network. So things like MFA, zero trust or least privilege access, patching software gaps and dependency management, backup and recovery kit, are a rudimentary need. However, instilling such practices starts with building the right security culture. Here’s how our security experts advise you to approach it.

How can AI be used to identify and respond to zero-day threats?

Rule, signature based threat detection works on identifying risks that are already known. Zero-day threats are notorious for exploiting previously unidentified vulnerabilities within systems or ones that have no signatures yet. With no hash or rule match, you won’t be able to identify it unless you look for a change in network behavior and catch anomalies that indicate threats and correlate this signal to understand the nature, extent, and severity of the attack. This will help teams to contain the threat faster and issue adequate first response – if it is quarantining, roll back actions, or the need for expert intervention.

Once a zero-day threat is identified and analyzed, defenders (security vendors, CERTs, or internal blue teams) can then create a rule or signature for it.

How can organizations ensure the security and privacy of data used in AI models?

- Ensure data quality and representativeness to prevent bias.

- Apply strong access controls, segmentation, and encryption across AI pipelines.

- Regularly test models for poisoning, evasion, or manipulation attempts.

- Maintain continuous auditing, versioning, and model validation to ensure accuracy and reliability.

What are the implications of AI in cybersecurity for small businesses?

AI-driven threats have a blanket target audience – they scale down as easily as they scale up.

For SMBs, the cybersecurity challenge is resource constraints – limited budgets, resources, and overreliance on third-party tools. Yet this is also where AI-powered defense can aid.

They should seek comprehensive threat coverage with limited operational handling. Group-IB’s Extended Detection and Response (XDR) leverages AI capabilities tp secure corporate email, networks, applications, and working devices such as desktop computers, laptops, virtual machines, and servers.

Combining that with retainer assistance from Group-IB’s global Digital Forensics and Incident Response (DFIR) experts, organizations can count on swift intervention in the event of an incident to contain and remediate threats, safeguarding their operations from any potential harm.

How can AI be used to detect and prevent insider threats?

It is necessary to look at it from a dual perspective. The damage that can happen from a malicious or even seemingly benign network user who has access to your communication and sensitive data systems can be massive — corporate espionage, data breach, or data misuse. While AI can detect and put an early end to these attacks in their early stage (UEBA for unusual patterns and anomalies in network activities can be identified, correlate signals across assets and see if problems are malicious or benign to motive and impact to avoid false accusations and guardrails), AI can also be used to unintentionally facilitate these attacks (use of LLMs to cancel alerts, synthetic insider personas, auto-generating misleading remediation report, exploiting automation pipelines such as SOAR playbooks by injecting crafted commands, enabling attacks, agentic misalignment from fraudulent or misinterpreted information).

So governance and oversight are needed.

How do we advise businesses to proceed?

- Provide explainable AI (XAI) tools within SOC dashboards so analysts can see why a model flagged an anomaly.

- Train teams to understand AI-assisted workflows, where bias can occur, and how to handle incidents involving AI misuse.

- Use adversarial attack simulations, red teaming exercises to understand how AI attacks may occur and how to mitigate them.

- Even for redundant routine tasks, make sure those are human-validated (especially on identity, insider, and executive-impact events) to ensure AI outputs don’t become automation bias.

- Operationalize threat intelligence by mapping threat feeds to your environment, contextualizing them, and enabling automated enforcement for known threats like real-time blocking and analyst-ready insights.

- Measure and tune through periodic checks, threat-hunting efforts, and red-team assessments to understand potential security gaps in AI.