Introduction

Artificial Intelligence (AI) had its big bang in the 90s, revolutionizing almost every sector. So, how could cybersecurity escape its influence? The rapid integration of AI into cybersecurity has paved the way for efficiency, agility, automation, and data analytics to take over operations.

However, amidst this transformation, AI, particularly Generative AI (GenAI), is ranked among the top 5 threats in the World Economic Forum’s Global Risks Report. Adding to the scare, Group-IB discovered over 100K compromised ChatGPT accounts on dark web marketplaces last year, elevating the risks of credential theft and secondary attacks.

Concerns surrounding Gen AI often circle privacy risks, data collection, and the potential misuse of technology. While the validity of these concerns is undeniable, they can certainly be addressed to mitigate the drawbacks of GenAI. The collective sentiment towards GenAI adoption isn’t out of the ordinary, as every transformative technology brings some level of uncertainty.

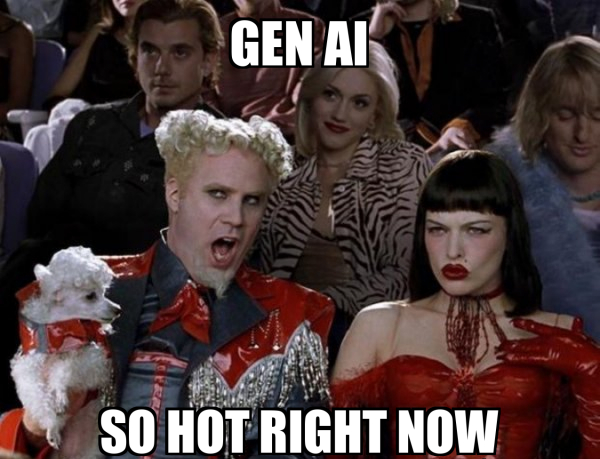

We must recognize that GenAI, like any other tool, can be constructive or destructive (more recent insights by Group-IB experts on the Middle East Economy). Where tools like ChatGPT’s API, with their less restrictive prompts, can help users do this:

ChatGPT key Logger – Scary Stuff. Educational purposes only

by inChatGPT

Image 1: Use of ChatGpt to create malicious code (shared by a Reddit user on the subreddit)

GenAI enables adversaries to orchestrate various social engineering ploys, including deepfakes, AI-generated synthetic media, phishing, Business Email Compromise (BEC), and the creation of polymorphic malware. Additionally, attacks aimed at AI systems like jailbreaking*, prompt injections*, and model poisoning attacks* further highlight risks to the integrity and reliability of these systems.

On the other hand, AI can aid cybersecurity defenders in strengthening their posture by identifying security blind spots as well:

Image 2: The use of ChatGpt to help identify vulnerabilities in code

*Jailbreaking: It refers to designing prompts to make GPT systems bypass their rules or restrictions

*Prompt injection: It involves manipulating or injecting malicious content into prompts to exploit the system.

*Model poisoning attacks: These attacks happen when cybercriminals can inject bad data into your model’s training pool and hence get it to learn something it shouldn’t.

Tapping into its capabilities, businesses can benefit from AI in a broader sense in three major aspects:

Cyber-modernizing security operations

Threat Detection and Risk Mitigation: Businesses can leverage GenAI to enhance their threat detection by parsing vast datasets to understand emerging cyber threats, tactics, and patterns. By analyzing historical data and real-time information, GenAI generates detection signatures that identify anomalies and evolving threats swiftly and effectively.

Phishing Detection: AI scrutinizes text and images, including logos and screenshots, to detect phishing content(an essential component of Group-IB’s Digital Risk Protection).

Fraud detection in digital web and mobile applications: Traditional approaches, such as transactional anti-fraud or KYC, have significant blind spots for fraud using social engineering or banking malware. Therefore, user behavior analysis and biometric characteristics, such as keyboard or cursor patterns, come to the rescue. This helps detect cases where a fraudster has taken control of an account and is attempting a fraudulent transaction.

Patching vulnerabilities: Security teams can utilize GenAI to identify vulnerabilities and automate the generation of security patches. These patches can then be tested for efficacy in a simulated or controlled environment.

Intelligent Response to Cyber Threats: With networks facing growing threats, GenAI enables a shift from rule-based systems to contextual analysis to help join the hidden links that reveal the complete chain of threat activity. LLM models are also employed to develop self-supervised threat-hunting AI, autonomously scanning network logs and data to provide adaptive and dynamic threat responses.

Code generation: GenAI can assist in various SecOps tasks, such as code generation, writing queries, and creating playbooks.

Additionally, it can assist in red teaming exercises by generating code to automate the simulation of real-world threats. Through GenAI, security teams can craft scripts and tools to automate diverse attack scenarios, providing a comprehensive security posture assessment. It assists red teams in generating exploit codes during reconnaissance, helping organizations quickly identify weaknesses in their defenses. Furthermore, GenAI enables the swift adaptation and evolution of attack simulations, ensuring ongoing improvement in security testing.

Structuring Data from Threat Intelligence: Artificial Intelligence (AI) makes threat intelligence data actionable through graphical user interface (GUI), automated reasoning, and real-time alerts. This assists in making informed security decisions and strategies based on the most relevant threats to your business.

Improving Identity Access Management (IAM) through automated capabilities: Adapting IAM deployments to meet the evolving needs of organizations necessitates automation. This is where GenAI comes into play. Identity automation enhances efficiency, accuracy, and security in managing user identities, access controls, and authentication mechanisms. It streamlines operations, minimizes manual effort, mitigates risks, and ensures compliance with regulatory requirements.

Enhancing SOC/SIEM efficiency through task automation and unified workflows: GenAI can automate repetitive and time-consuming tasks within the SOC environment. For instance, it can swiftly triage and prioritize security alerts based on predefined criteria. Additionally, it assists in workflow automation, such as creating runbooks and suggesting process refinements. Moreover, teams can leverage GenAI for succinct reporting to quantify and communicate the value of their security endeavors.

Malware Detection and Analysis: GenAI models can be trained to identify patterns of malicious behavior or anomalous activities in network traffic, aiding in detecting malware (including polymorphic malware that constantly changes code).

Enumerating Tactics, Techniques, and Procedures (TTPs) of Advanced Persistent Threats (APTs) and other actors: It is instrumental in identifying the kill chain, building defenses, and supporting intrusive cybersecurity engagements such as red teaming. Teams can also leverage GenAI to understand threat actors and their attack maneuvers and get answers to critical questions like “Where am I most vulnerable?” through natural language queries.

Bridging the gap between cybersecurity and resource shortage

Closing the divide between security vulnerabilities and resource constraints requires streamlining operations through automation. By automating routine tasks, teams can redirect their energy towards strategic decision-making, empowering them to focus on insights and strategy and driving security that contributes to business growth.

Reducing Toil

Slashing the requirement for additional resources: AI’s threat triaging and prioritization capabilities can significantly reduce the time, cost, and cognitive load involved in the risk mitigation process without requiring human intervention. This alleviates the strain on an overstretched security team and enables the organization to expand its threat response capabilities without needing additional personnel.

Streamlining workflows: GenAI can streamline workflows by integrating disparate security tools and systems into a unified platform. It can act as a central intelligence hub, aggregating data from various sources, including SIEM logs, threat intelligence feeds, EDR, and NDR tools.

Managing the slew of security alerts and redundant tasks: This is a significant challenge for security analysts who must review, prioritize, and investigate every security alert. Despite their best efforts, human capabilities are limited, leading to instances where alerts are ignored, false positives are pursued, and errors in judgment. While expecting humans to address every alert is unrealistic, automated processes can help blow off steam and handle certain tasks for our overworked security team.

GenAI offers endless possibilities. However, caution is crucial. While businesses look forward to deploying GenAI to power their operations, innovation, and growth ambitions, there’s a need to parallelly develop frameworks, compliance solutions, and ethical considerations to manage the technology.

Before we dig deeper into what the future looks like with GenAI, let’s gain a basic understanding of GenAI and how it works. Simply put, GenAI trains a computer to automatically create new content (code, audio, image, videos, etc). However, computers can’t simply create content; instead, they rely on vast amounts of data to generate content, which is essential for GenAI to function effectively.

Unlike traditional AI models that rely on large datasets and algorithms to classify or predict outcomes, Generative AI models are designed to learn the underlying patterns and structure of the data and generate sophisticated outputs that mimic human creativity.

LLM’s and GenAI

AI, Large Language Models (LLMs), and GenAI have been prevalent topics since ChatGPT’s 2022 launch. Predominantly discussed in connection with GPT (generative pre-trained transformers) models, they operate on language modeling—predicting the sequence of words based on the preceding input (prompt).

The technology utilizes neural networks to learn and predict in a nuanced and sophisticated manner. These models grasp general language characteristics like grammar, syntaxes, and semantics relationships by training on a vast corpus of language data and then truncating or removing some text to let AI predict the missing portions. This intelligence helps them understand prompts and generate an informed response.

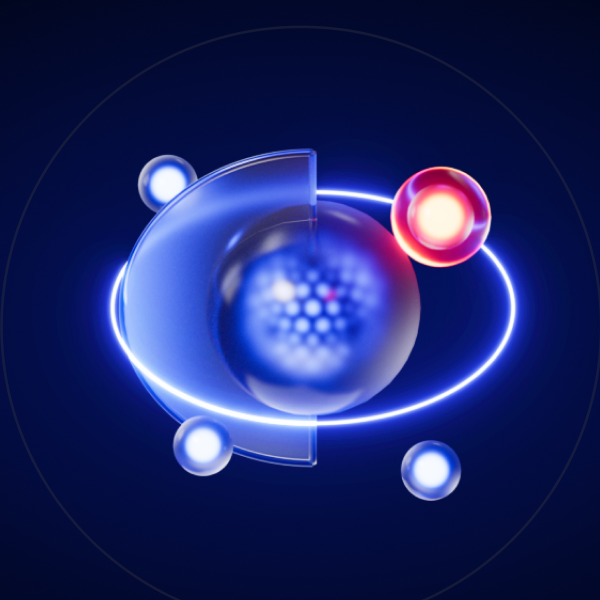

Image 3: The illustration of the basic neural network language model

This is just a basic neural network (based on 3X4+ 4 + 4X4+4 + 4X1+1= 41 parameters), and a GPT model consists of many such mini neural networks. ChatGPT, for example, is built on 1.5 billion parameters!

Neural networks rely on training data to learn and enhance their accuracy. GPT models fall under the category of Large Language Models (LLMs). These models are neural networks with extensive parameters, enabling them to learn complex patterns in language.

GenAI: Siding cyber defenders or adversaries?

While opinions may vary, the prevailing consensus leans towards the belief that GenAI could be a leverage to the adversaries. Nevertheless, many researchers and security professionals remain optimistic about the potential of GenAI to assist cyber defenders in the long term. This belief is supported by the role of data in the effectiveness of LLMs, of which cyber defenders have a surplus currently.

Nonetheless, it’s essential to remain vigilant and anticipate the creative ways in which cybercriminals may exploit GenAI for malicious purposes. While GenAI is already used to craft effective phishing emails or generate malware code, cybercriminals constantly adapt to launch more sophisticated attacks using the technology.

However, both defenders and adversaries begin from the same point. Cybersecurity experts possess the same tools and can leverage GenAI for AI vector identification and offensive security initiatives, all to outsmart the bad guys.

Where is GenAI in cybersecurity heading?

Despite its pros and cons, GenAI raises some red flags. However, there’s a way for businesses and cybersecurity professionals to shield against these threats and harness the technology’s potential—and the answer is again through AI. By leveraging AI-driven solutions, organizations can proactively identify and mitigate risks, bolstering their defenses while capitalizing on GenAI’s transformative capabilities.

Image 4: Gartner’s insights on GenAI adoption in the upcoming years

Resisting GenAI as a valuable defense tool could hinder innovation and limit the potential of security strategies, as AI is here to stay and transform conventional security systems. Embracing change is essential. Managing its development is key to effectively and safely integrating GenAI into cybersecurity.

GenAI will play a definitive role in shaping the future cybersecurity landscape. However, leveraging the technology requires due diligence, research, and an understanding of its function, accuracy, and maintenance. Adopting GenAI with sound governance is essential for its successful integration and upkeep.

For businesses eager to utilize GenAI to address business challenges but have concerns about its adoption, schedule a quick consult with Group-IB’s Audit and Consulting experts for governing regulations, strategies, and how Group-IB’s AI-enabled technologies can future-proof your cybersecurity stance.

How does Group-IB leverage AI to unleash the next generation of cyber defense?

Integrating AI with cybersecurity offers a shift from a reactionary to proactive apporach for expanding attack surfaces, endpoints, network and enterprise-wide threat management. These defenses are built on comprehensive, agnostic, and forward-leaning technologies and cyber vision, which Group-IB has embedded as its mission, proving to be one of its strongest differentiators.

Our forward-leaning and nuanced approach to solving persistent cybersecurity challenges for businesses across industries has consistently placed us at the forefront of change, whether it be for our cyber-fraud kill chain or AI-powered innovations integrated into our core technologies.

One successful integration of AI is witnessed in Group-IB Managed Extended Detection and Response (MXDR). Traditional cybersecurity platforms rely on signatures or thresholds to detect known threats, comparing incoming data to predefined patterns. However, this approach has limitations in effectively identifying new or previously unseen threats. With AI, Group-IB’s MXDR has evolved anomaly detection.

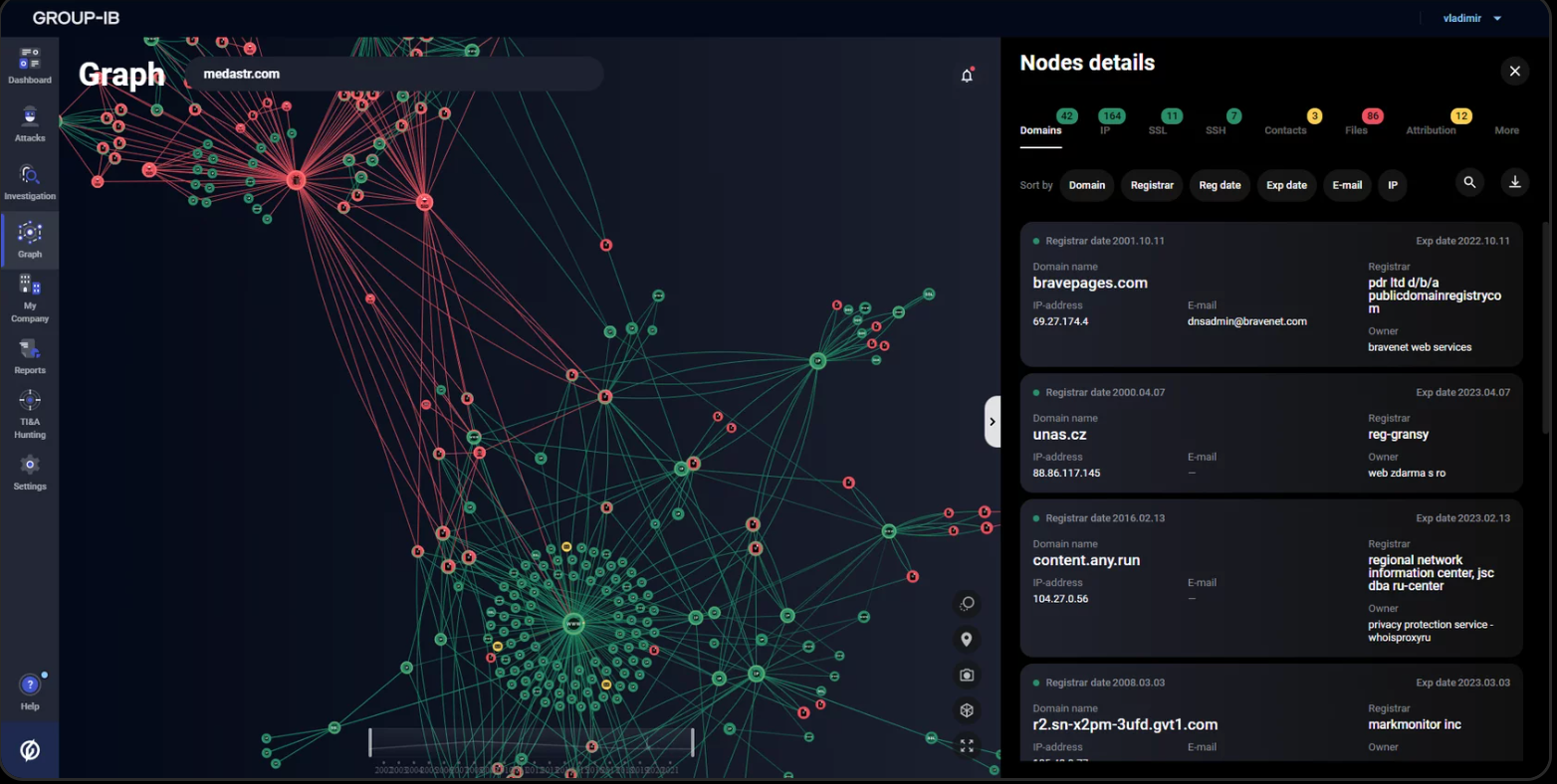

In fragmented security environments, detecting and investigating a threat can lead to added questions about the attacker’s identity, data exfiltration, internal interactions, or network lateral movement. Finding answers across disparate data and tools is challenging. The graph-based analysis addresses this by correlating data and automating insights and responses, enhancing cybersecurity operations.

Throughout the years, Group-IB experts have used graphs to detect such threats. Each case has its own set of data and its algorithm to establish links, which can be visualized in a graph, an instrument that once used to be Group-IB’s internal tool, available only to the company’s staff.

Network graph analysis became Group-IB’s first internal tool to be incorporated into the company’s public products – Threat Intelligence, Managed XDR, Digital Risk Protection, and Fraud Protection. Automated network graph analysis systems are now widely leveraged for cybercrime investigations, threat attribution, and detection of phishing & fraud.

Image 5: Screenshot of Group-IB’s Network graph analysis tool

This was just one of the AI-enabled capabilities added across our security stack, including some major updates made to our technologies this year. At Group-IB, we harness AI to help enrich our technologies with newer features such as biometric intelligence, GenAI, explainable AI, automated violation detection and takedowns, and more to make our fight against cybercrime and fraud formidable.