Introduction

Deepfake vishing – fraudulent phone calls that leverage AI‑generated voice clones – has rapidly evolved into one of today’s most sophisticated social‑engineering threats. This research dissects the full attack chain, from harvesting target audio on social media to crafting hyper‑realistic calls that bypass traditional caller‑ID and voice‑biometric checks.

Drawing on Group-IB’s experience in real‑world incidents and threat‑intelligence telemetry, this research highlights the sectors most at risk: finance, executive services, and remote‑work help desks. Through detection techniques such as acoustic fingerprinting and multimodal authentication, this research aims to provide cybersecurity professionals with a layered defense strategy that blends AI‑powered anomaly analysis with robust employee awareness training.

By mapping attacker tactics to defensible controls, this research provides security teams with actionable guidance to mitigate deepfake vishing before it damages brand trust and bottom lines.

Key Discoveries

- Global economic impact is exploding: Deepfake‑enabled fraud losses are forecast to reach US$40 billion by 2027.

- Asia‑Pacific is the current hotspot: Regional deepfake‑related fraud attempts surged 194% in 2024 compared to 2023, with voice‑based scams leading the rise.

- Voice cloning now takes mere seconds: Modern AI-enabled speech models can create a realistic impersonation from just a few seconds of target audio, slashing technical barriers for attackers.

- Single incidents can top seven figures: Over 10% of surveyed financial institutions have suffered deepfake vishing attacks that exceeded US$1 million, and an average loss per case of approximately US$600 000.

- Stolen money is almost never recovered: Due to rapid laundering through money‑mule chains and crypto mixers, fewer than 5% of funds lost to sophisticated vishing scams are ever recovered.

What is Phishing?

Phishing is a form of cybercrime involving emails, text messages, phone calls, or advertisements from scammers who impersonate legitimate individuals, businesses, or institutions – such as banks, government agencies, or courier services – with the intent of stealing money and/or sensitive information.

Victims are typically tricked into revealing usernames, passwords, banking credentials, personal details, or debit/credit card information by clicking on malicious links or engaging in fraudulent phone calls. Once the information is obtained, unauthorized transactions may be carried out on the victims’ accounts or cards, leading to financial losses and potential identity theft.

What is Vishing?

Vishing – short for voice phishing – is a type of phishing in which scammers use fraudulent phone or in-app calls to impersonate authority figures such as bank employees, government officials, police officers, or tech support representatives.

Unlike phishing emails or links, the direct interaction over the phone creates emotional pressure, making the scam feel more urgent and personal. Scammers often impersonate some authority figures(e.g. Bank staff, government officers) and claim that there are issues with the victim’s bank account, or that the victim is under investigation for fraudulent activity, and demand personal or financial information under the pretext of verification or resolution.

“Tech Support” scam is another commonly used vishing scenario, in which the scammers contact their victims under the pretext that their mobile device or computer has been compromised. The scammer then instructs the victim to install remote access software, giving the attacker full control over the device and access to sensitive data.

What is Deepfake Vishing?

Deepfake vishing differs from traditional vishing by using artificial intelligence to clone a person’s voice. Instead of the scammer speaking in their own voice, machine learning models are used to imitate the voice of someone the victim knows and trusts, such as a family member, colleague, or company executive.

For example, a family member may receive a call that sounds exactly like their loved one, urgently requesting money for an emergency. Similarly, a finance team may receive a voice message that mimics their CEO, instructing them to authorize an urgent wire transfer for a supposedly critical business deal.

These scams are highly deceptive due to the hyper-realistic nature of the cloned voice and the emotional familiarity it creates. As a result, deepfake vishing is significantly harder to detect than traditional scams and has a much higher success rate.

The Rise of AI-Powered Scams

In recent years, AI-enabled fraud has seen an upward trend globally, specifically those involving the use of deepfaked voice, images and videos. According to industry projections, global financial losses attributed to AI-enabled fraud are expected to reach US$40 billion by 2027, up from approximately US$12 billion in 2023.

Within the Asia-Pacific region, the threat landscape is evolving at an even faster pace. Compared to 2023, AI-related fraud attempts surged by 194% in 2024. A significant portion of these incidents involved voice and video-based malicious calls, underscoring the growing prevalence and impact of deepfake vishing attacks.

Why Is Deepfake Vishing on the Rise?

The widespread adoption of remote work in the post-COVID-19 era, combined with the rapid advancement and accessibility of AI voice synthesis technologies, has contributed significantly to the increase in deepfake vishing. Publicly available voice recordings – extracted from webinars, interviews, social media, or leaked communications – make voice cloning both feasible and scalable for threat actors.

The Damage from Deepfake Vishing: Emotional and Financial Toll

The impact of deepfake vishing is profound across both personal and organizational domains. According to a global survey, financial institutions report an average loss of US$600,000 per incident involving deepfake-related fraud. Over 10% of surveyed institutions indicated that individual cases exceeded US$1-million in financial losses.

At the personal level, financial loss is often accompanied by emotional distress and psychological harm. For instance, in a documented case in Canada, a grandmother was deceived by a deepfake voice mimicking his grandson, under the pretext that he was arrested by the police and needed money to be released. Believing the fabricated emergency, the grandmother transferred US$6,500 (CAD$9,000) to the scammer.

Who Is Being Targeted by Deepfake Vishing – and Why It Works

Deepfake vishing relies heavily on social engineering tactics and emotional manipulation, which make certain individuals and roles especially vulnerable:

- Corporate Executives

CEOs, CFOs, and other high-ranking executives are prime targets due to their authority over corporate funds and the availability of their voices on the Internet. Fraudsters exploit this trust to pressure finance teams into executing urgent fund transfers – often without proper verification. - Financial Employees

Employees in finance or treasury departments are commonly targeted by AI-enabled vishing calls from leadership figures. The pressure to act swiftly and follow chain-of-command instructions makes them susceptible to deception. - Elderly and Emotionally Distressed Individuals

This group is particularly vulnerable due to limited digital literacy and unfamiliarity of synthetic voice technologies. Familiar-sounding voices often bypass skepticism, leading to emotionally driven decisions.

Why AI-enabled Vishing is Far More Dangerous

Compared to traditional vishing, deepfake vishing introduces a false sense of familiarity and trust by closely mimicking a known individual’s voice – including speech patterns, accents, and emotion. AI models today require only a few seconds of voice recording to generate highly convincing voice clones freely or at a very low cost.

The continued misuse and abuse of such models, as well as the level of scalability, combined with low technical and financial barriers, enables AI-powered vishing to become more widespread and difficult to contain. Furthermore, deepfake vishing undermines existing defenses. For example, standard verification mechanisms, such as caller ID checks or voice-based authentication, are rendered ineffective when the fraudulent voice is perceived as trusted and legitimate.

The Anatomy of a Deepfake Vishing Attack

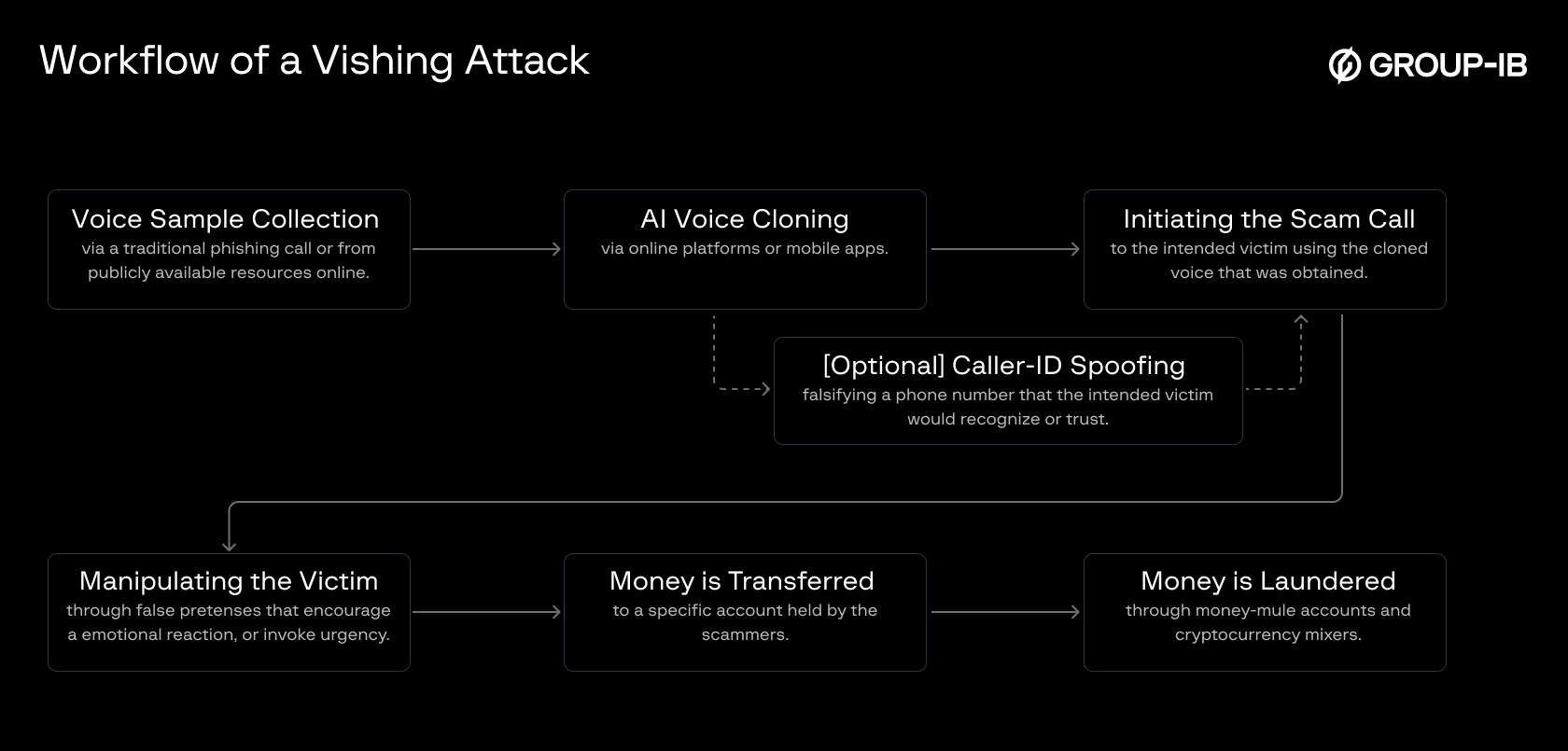

Figure 1. A typical workflow of a vishing attack.

Step 1: Voice Sample Collection

The first step in a deepfake vishing attack typically involves collecting voice samples of the target that the fraudsters intend to impersonate. Common sources include social media platforms (e.g., YouTube, TikTok), recorded webinars, virtual meetings, voicemails, and even short phone conversations. For example, answering an unknown call with a brief greeting like “Hello? Who is this?” may allow a scammer to capture enough audio for cloning.

Step 2: AI Voice Cloning

Once audio samples are acquired, fraudsters may feed the recordings to advanced AI speech synthesis models such as Google’s Tacotron 2, Microsoft’s Vall-E, or commercial services like ElevenLabs and Resemble AI. These tools use text-to-speech (TTS) technology to generate customized speech that replicates the voice’s tone, pitch, accent, and speaking style with remarkable realism, even across multiple languages.

There are two primary methods by which cloned voices are operationalized in vishing attacks:

- Pre-generated Audio (e.g.TTS): Pre-written scripts are converted into speech using the cloned voice and played during the call.

- Real-time Voice Transformation: The scammer’s live speech is transformed into the cloned voice using voice-masking or transformation software.

Although real-time impersonation has been demonstrated by open-source projects and commercial APIs, real-time deepfake vishing in-the-wild remains limited. However, given ongoing advancements in processing speed and model efficiency, real-time usage is expected to become more common in the near future.

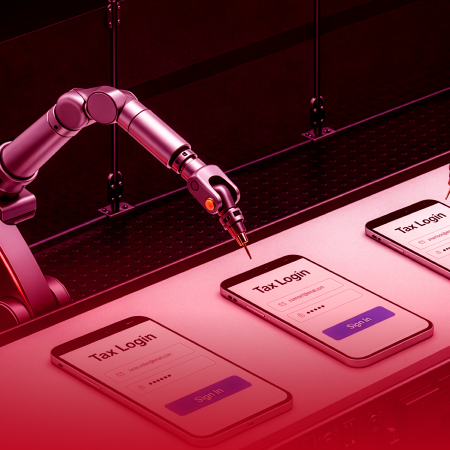

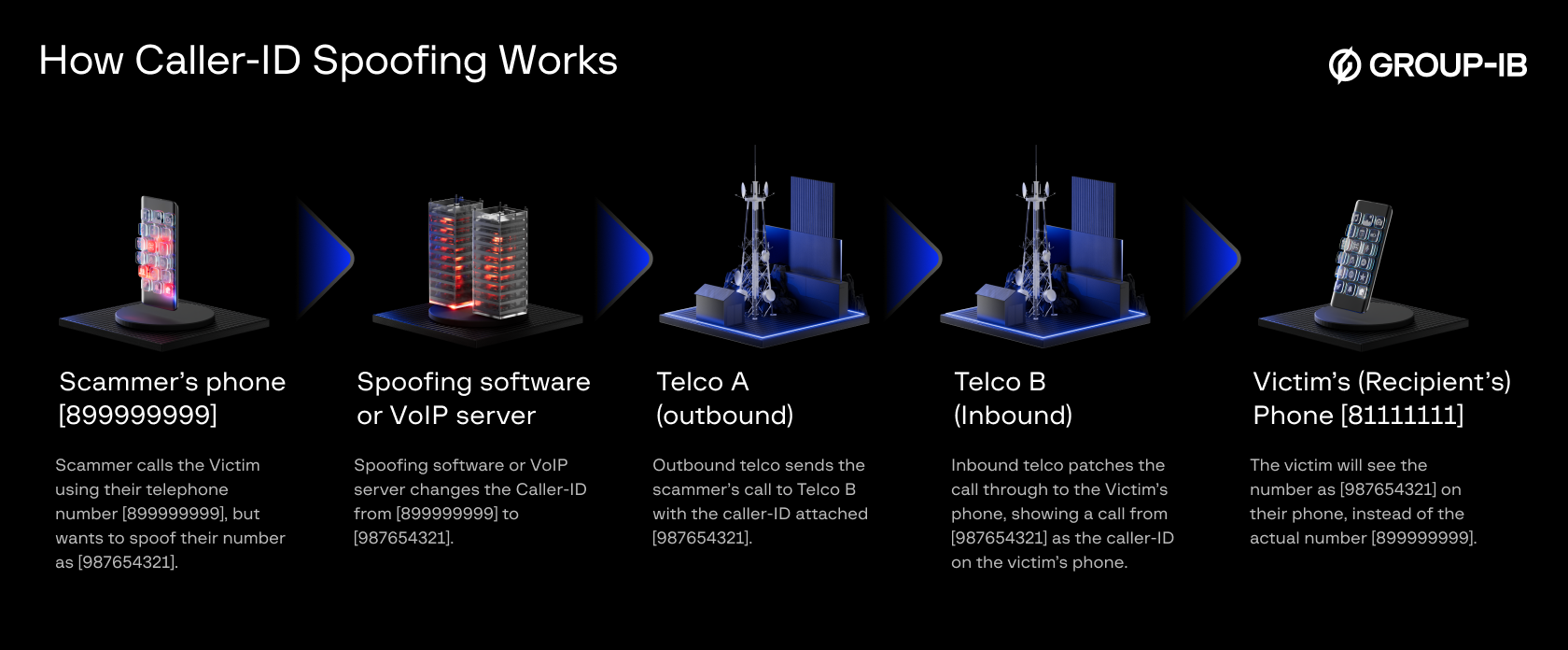

Optional: Caller ID Spoofing Caller ID spoofing is typically performed using VoIP (Voice over Internet Protocol) platforms (i.e. in-app calling), which allow fraudsters to program any number -including those belonging to banks, government agencies, or company executives – into the call header. This manipulation increases the likelihood that the call will be answered and perceived as credible.

Figure 2. How Caller-ID spoofing works.

Step 3: Initiating the Scam Call and Manipulating the Victims

Once the call begins, psychological manipulation becomes the key weapon. Regardless of the chosen scenario, fraudsters employ several core strategies: impersonating authority figures, generating urgency or fear, and suppressing the victim’s skepticism. Common vishing storylines include:

1. Government Official Impersonation

Scammers may pose as police officers, immigration agents, embassy staff, or tax authorities. The fabricated scenarios often involve:

- Alleged unpaid taxes or fines

- Visa or immigration complications

- Suspicion of criminal activity

- Ongoing identity theft investigations

- Home country’s embassy call

2. Bank Staff Impersonation

The caller claims to be from the bank’s fraud or security department, citing:

- Suspicious or large transactions

- Unapproved loan or credit card applications

- Attempts to link a new device or phone number

- Internal fraud investigations

- Threat of account suspension or freezing

3. Executive Impersonation (CEO Fraud)

In scenarios targeting the corporate sector, deepfake voices are used to impersonate CEOs, CFOs, or IT directors. Finance personnel may receive calls instructing them to:

- Process time-sentitive vendor payments

- Execute confidential wire transfers

- Settle urgent legal, tax, or regulatory issues

- Fulfill VIP client obligations or partner requirements

- Respond to a cybersecurity breach or recovery

4. Family, Friends, Colleagues

In scenarios involving close contacts, deepfake voices may typically be used to mimic a trusted relative, friend, or coworker.

Common fabricated scenarios include:

- Medical emergency or hospitalization

- Travel emergency or stranded abroad

- Urgent loan request or temporary borrowing

- Legal trouble or arrest

On the call, scammers may incorporate realistic details such as names, account info, or recent projects gleaned from social media or data leaks via Dedicated Leak Sites (DLS), to make the story more plausible. Victims are often instructed not to inform anyone else, further isolating them and reducing the chances of verification. When a call sounds familiar, the story seems believable, and the caller applies urgent pressure, people stop thinking critically and often comply.

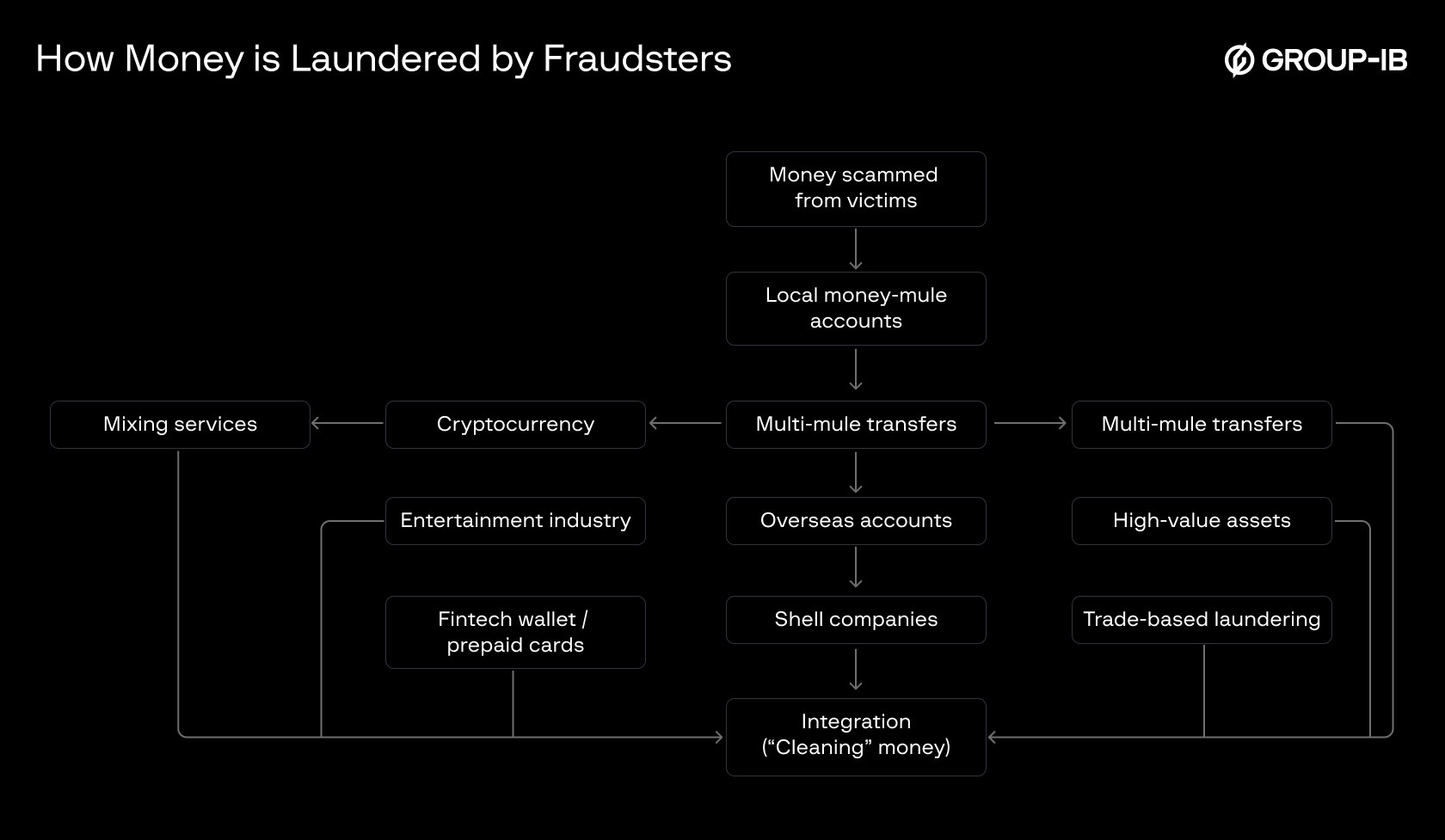

Step 4: Where the Money Goes – and Why It’s Hard to Recover

Once funds are transferred to fraudsters, the opportunity for recovery becomes extremely limited, as the stolen money is often fully laundered by the time victims realize what has happened. According to global cybercrime data, fewer than 5% of funds stolen through sophisticated vishing attacks are ever recovered.

Fraudsters launder stolen money by swiftly moving it through multiple layers to obscure its origin and avoid detection. The most common method involves transferring funds to local bank accounts held by money mules, who either forward the money through a chain of other accounts or send it overseas to jurisdictions with weak reporting and limited cooperation, making recovery extremely difficult. Increasingly, stolen funds are also converted into cryptocurrency, where the decentralized and pseudo-anonymous nature of blockchain hampers tracing—especially when mixing services are used to break transaction trails.

In addition, fraudsters may channel illicit funds through online casinos, shell companies, or trade-based laundering schemes (e.g., fake invoices or overpriced goods). Some invest in high-value assets like real estate or luxury items, or route money through unregulated fintech wallets and prepaid cards. These complex, cross-border laundering networks are designed to fragment and disguise the money trail, making legal recovery extremely slow, if not impossible.

Figure 3. How money is laundered by fraudsters.

How We Can Detect and Defend Against Deepfake Vishing

As attackers exploit the speed, scale, and believability of AI-generated voice impersonation, countermeasures must be implemented across individual, organizational, and national levels.

Policy and regulation play a critical role in forming a unified front against the misuse of generative AI in fraud. Governments should establish clear legal frameworks that criminalize the unauthorized use of synthetic media, including voice cloning. Penalties must be well-defined and consistently enforceable to serve as effective deterrents.

Institutions and organizations must adopt a layered internal defense strategy. Mandatory training should be provided to executives and staff on how deepfake technologies function and how impersonation scams typically unfold. All requests involving fund transfers, sensitive data, or account access should be validated through at least two separate communication channels—for example, confirming a phone call via email or secure internal messaging.

For the general public, awareness remains the first line of defense. Any unexpected call that involves urgent demands for money, login credentials, or personal information—particularly from someone claiming to be a known authority – should be approached with skepticism. Always pause and verify before taking action.

Conclusion

The rise of deepfake vishing marks a critical turning point in the landscape of cyber fraud. What was once limited to deceptive emails and fake links has evolved into hyper-realistic voice scams powered by generative AI. These attacks are not only financially damaging but emotionally manipulative – exploiting trust, authority, and familiarity to bypass human defenses. As evidenced throughout this blog, the threat is no longer hypothetical. It is active, growing, and targeting both individuals and institutions with increasing precision.

Addressing this challenge requires more than advanced detection tools – it demands a united response. Governments must establish clear regulatory frameworks to criminalize the malicious use of synthetic media. Organizations must adopt layered verification protocols and train employees to recognize voice-based deception. Individuals must remain cautious and question urgency, even when the voice sounds familiar. As we move deeper into the era of AI-enhanced fraud, vigilance, education, and collaboration across sectors will be essential to preserve security and trust in our digital interactions.

Frequently Asked Questions

What is deepfake vishing?

Deepfake vishing is a type of voice phishing scam where cybercriminals use AI to clone the voice of someone familiar—like a boss, colleague, or family member—to deceive victims into transferring money or revealing sensitive information.

How is deepfake vishing different from traditional vishing?

Traditional vishing uses a scammer’s real voice, while deepfake vishing uses AI-generated voice clones to impersonate trusted individuals. This makes deepfake vishing more convincing and harder to detect.

Who is most at risk of deepfake vishing attacks?

High-risk targets include corporate executives (e.g., CEOs, CFOs), finance personnel, elderly individuals, and emotionally vulnerable people—especially those unfamiliar with AI-generated voice technology.

How do scammers create deepfake voices?

Scammers collect audio samples from online sources such as social media, webinars, or phone calls. They then use AI voice synthesis tools like Tacotron 2, Vall-E, or ElevenLabs to generate realistic cloned voices.

What tactics do scammers use in deepfake vishing calls?

Scammers typically impersonate authority figures (e.g., police, bank staff, executives), create urgency, and use emotional pressure to manipulate victims into acting without verifying the request.

What happens after money is sent to scammers?

Once funds are transferred, they are quickly laundered through bank accounts, crypto wallets, or shell companies. Recovery is extremely difficult—less than 5% of such stolen funds are ever recovered.

Why is caller ID not a reliable defense against deepfake vishing?

Caller ID can be spoofed using VoIP tools, allowing scammers to display a trusted number (e.g., from a bank or a known contact), which tricks victims into answering the call.

Can real-time voice deepfakes be used during live calls?

Yes, though still emerging, real-time voice transformation tools can alter a scammer’s voice on live calls. This technology is expected to become more widespread and dangerous.

What can organizations do to prevent deepfake vishing?

They should implement multi-channel verification for financial requests, train staff to recognize voice scams, and adopt internal protocols that require secondary approvals for sensitive actions.

How can individuals protect themselves from deepfake voice scams?

Stay skeptical of unexpected urgent calls—even if the voice sounds familiar. Always verify through a second method (e.g., text, email, or a direct callback), and never share sensitive info over the phone without confirmation.