Introduction

In the ever-expanding digital world, automation has revolutionized industries, enhancing efficiency, and redefining human-computer interaction. Artificial intelligence (AI)-powered agents like OpenAI’s Operator, Deepseek, and Alibaba’s Qwen are the latest advancements in this space, promising to optimize workflows with minimal human oversight.

Yet, history has repeatedly shown that technology, no matter how well-intended, can be twisted for malicious purposes. Just as browser automation tools designed for quality assurance can be repurposed by cybercriminals to test stolen credit card details at scale, fraudsters are now eyeing AI-driven automation to refine and accelerate their schemes. With thousands of victims falling prey to scams daily, stolen financial data is flooding underground networks, from encrypted messaging platforms to dark web marketplaces. The rise of autonomous AI systems introduces a pressing question: what happens when the same tools that drive business innovation are weaponized for cybercrime? As fraudsters embrace AI-powered automation, the challenge is no longer just fighting known threats; it’s staying ahead of a rapidly evolving digital battlefield.

To illustrate this point, let’s deep dive at a previous case where similar innovation for quality assurance (QA) browser automation and testing was adopted by cybercriminals to conduct mass scale compromised cards testing attacks.

Fraud Scheme Summary

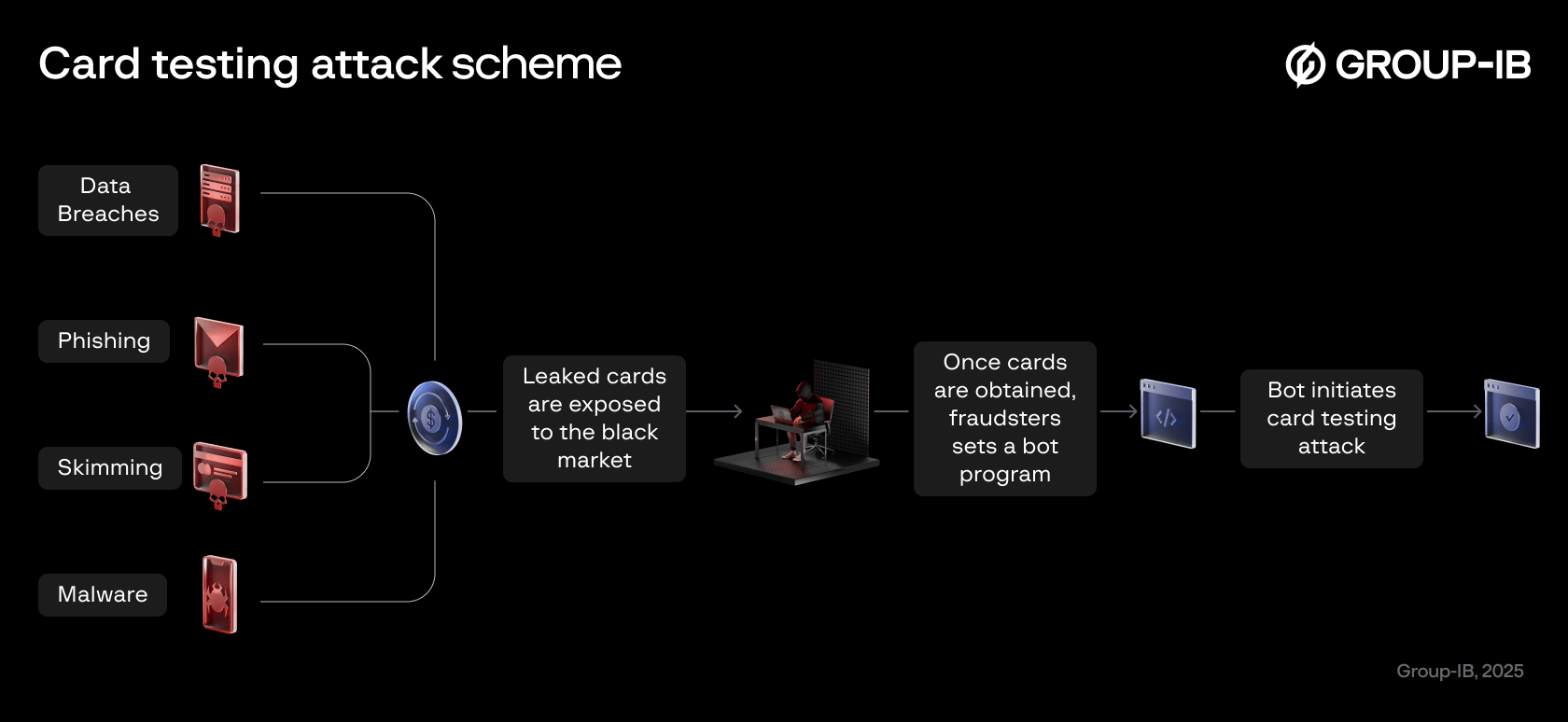

Card testing attacks (Card Not Present) is a type of fraud scheme in which the fraudster tries to verify the stolen credit card details by making small unnoticed purchases across various websites. These purchases enable the fraudsters to verify if the card is active and not blocked and has sufficient money in it for later larger purchases, often evading detection by the card owner and fraud prevention systems which usually focus on bigger spending patterns.

Figure 1. Card Testing Attack Scheme

Once fraudsters obtain the stolen card details, they need a way to verify whether these cards are still active and usable. This is where bot-driven card testing attacks come into play. These operations follow a structured process, leveraging underground markets, automation, and sophisticated evasion techniques to avoid detection.

The cycle typically begins with stolen card credentials, which are obtained through various illicit means, such as phishing emails, social media scams, malware-infected websites and applications, or compromised ATMs (Card skimming), and POS terminals. These stolen credentials are then sold on online black markets, where cybercriminals—often referred to as “bot herders”—purchase them in bulk.

Once in possession of the stolen card data, fraudsters use automated bot programs to test these cards on multiple e-commerce websites. To evade detection, the bot traffic is routed through residential proxy networks, making it appear as though the transactions are coming from legitimate customers. The bot systematically attempts small transactions using the stolen credentials, allowing fraudsters to identify active cards that can later be used for high-value fraudulent purchases.

This entire operation is highly automated, making it challenging for fraud detection systems to catch these fraudulent transactions in real time. By the time the actual cardholder notices unusual activity, fraudsters may have already validated multiple cards, and used them for larger unauthorized transactions.

Case study of automation misuse: Card Testing Attack

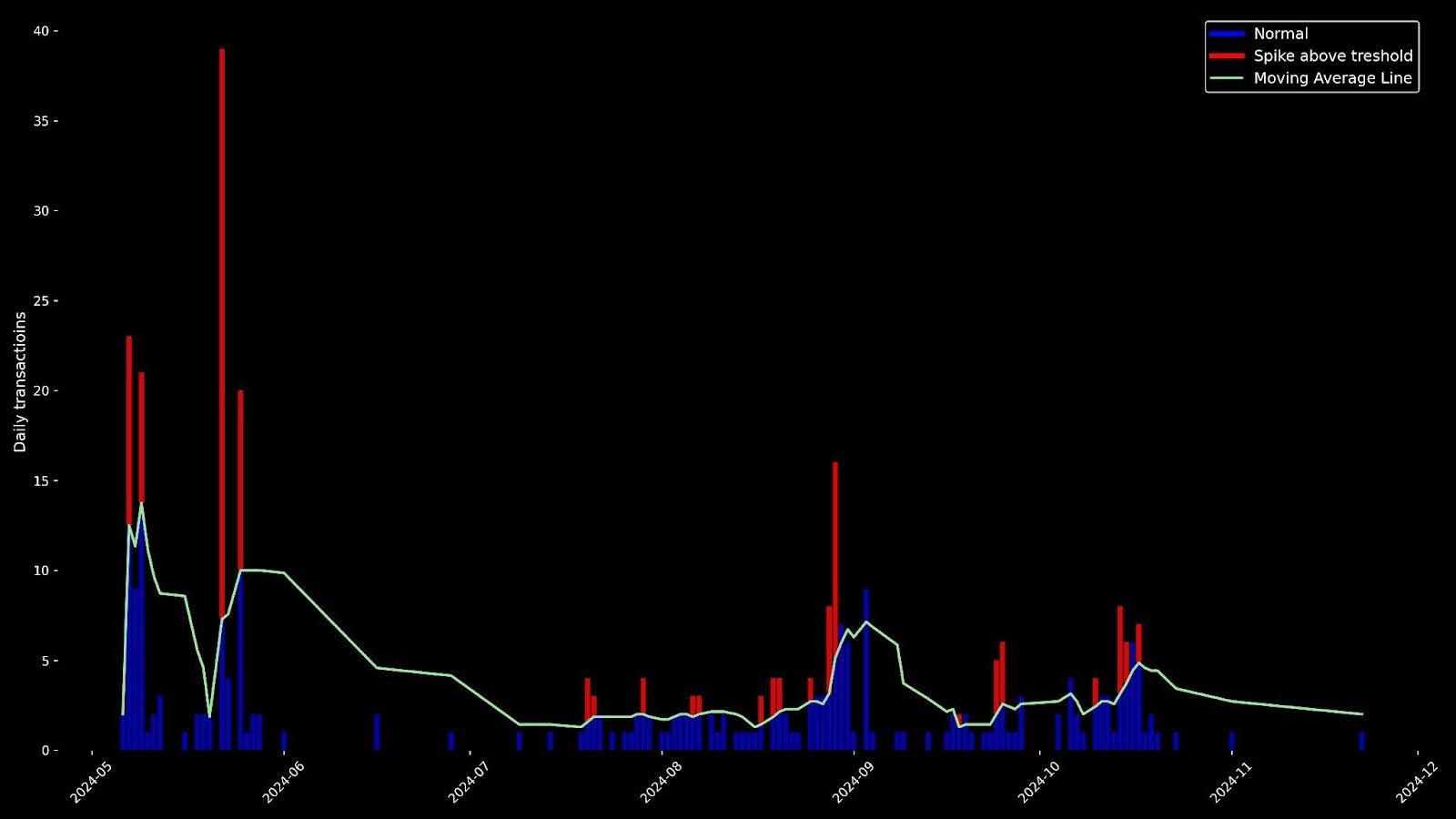

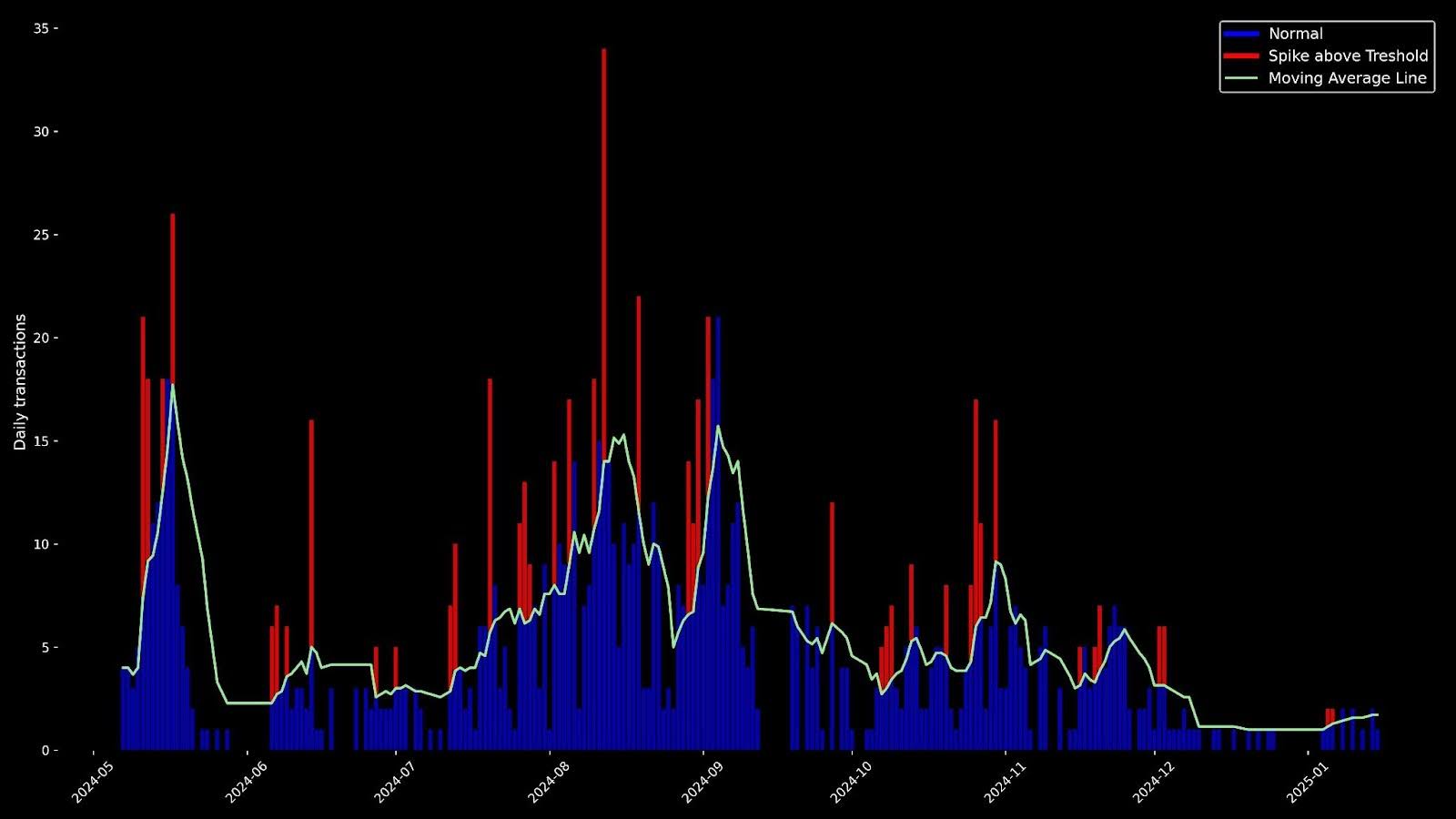

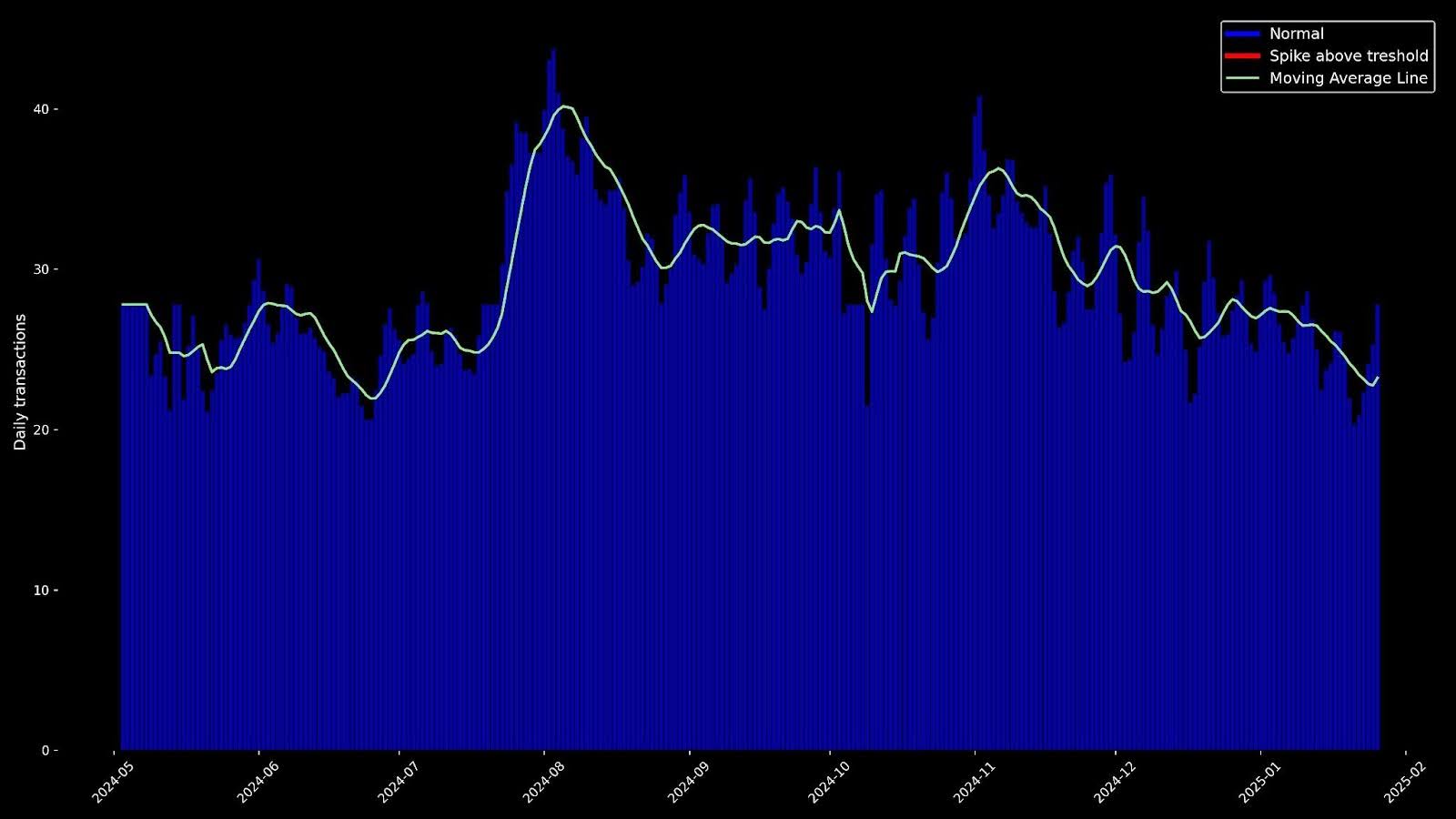

Starting in May of 2024 and continuing through the year, Group-IB analysts observed unusual spikes in Three-Domain Secure (3DS) channel transactions across several banking clients (Figures 2 and 3). These spikes were concentrated around two specific e-ticket merchants—Taiwan High Speed Rail Corporation and CHAT.VERSAILLES—raising concerns of card details leakage, potential card testing and bot-driven fraudulent attempts.

Figure 2. Observed 3DS transactions over time for CHAT.VERSAILLES.

Figure 3. Observed 3DS transactions over time for the Taiwan High Speed Rail Corporation.

Figure 4. A comparison trend of 3DS transactions over time for a merchant not targeted by bots.

These graphs illustrate significant and sudden surges in traffic originating from these specific merchant domains, often indicative of bot activity, as genuine human interactions typically do not exhibit such steep or abrupt increases.

Compromised Cards Analysis

From the very first analysis, it was evident that these transactions weren’t made by legitimate cardholders. Further analysis with Group-IB’s Threat Intelligence (TI) confirmed that some of the cards had been leaked, reinforcing suspicions of fraudulent activity.

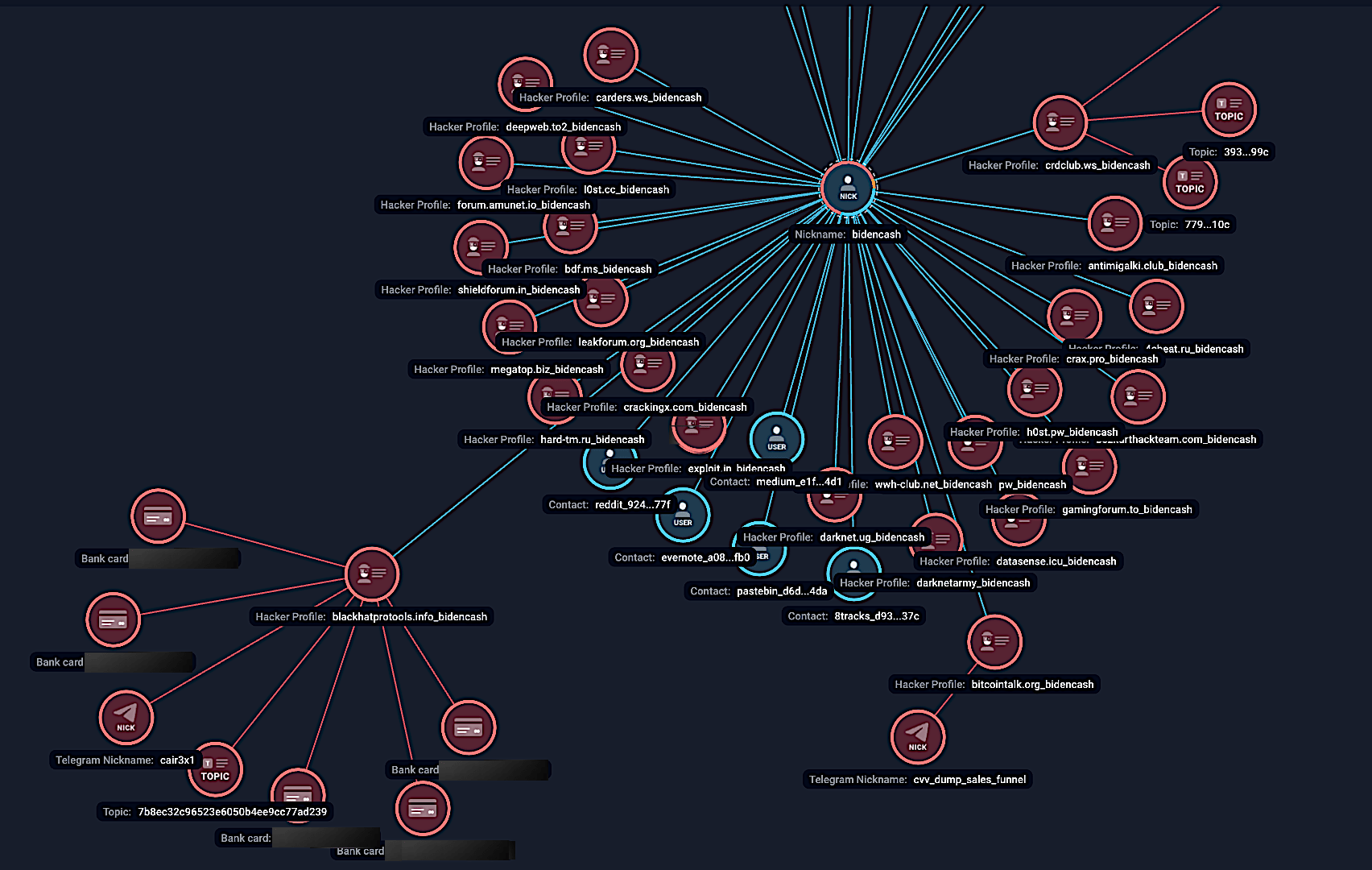

According to Threat Intelligence, the majority of cards exploited by card testing bots were initially identified as compromised in 2022, with additional exposures occurring throughout 2023 and beyond. The primary threat actor behind these breaches is believed to be “BidenCash,” which has been linked to domains tagged with “bidencash”, and hacker profiles reference terms such as “carding.pw_bidencash.” This assessment aligns with previous reports from different organisations, including Visa, which reported that BidenCash was able to dump massive collections of stolen card details in the last years since 2022. These compromised datasets have been circulating across the darknet and underground forums up to January 2025, posing an ongoing and future threat to financial institutions and cardholders alike.

Figure 5. Group-IB’s Graph Network Analysis mapping the cards used by card testing bots, and connections to BidenCash.

Compromised Cards Sources:

- Data Breaches: Attacker gains access to card information from a compromised database.

- Phishing: Attacker deceives users into providing card details via fake websites or emails.

- Skimming: Attacker uses devices to capture card information during legitimate transactions.

- Malware: Cybercriminals infect mobile and desktop devices with hidden malware that surreptitiously captures and transmits card details, enabling unauthorized transactions or resale on underground markets.

Malware infected ATMs/POS devices: Hacked and infected point-of-sale devices (POS) machines can copy card payment details and save to hacker databases.

Utilized Merchants

Targeted merchants typically include low-security websites with minimal fraud detection and e-commerce platforms with high transaction volumes, allowing fraudulent transactions to blend in.

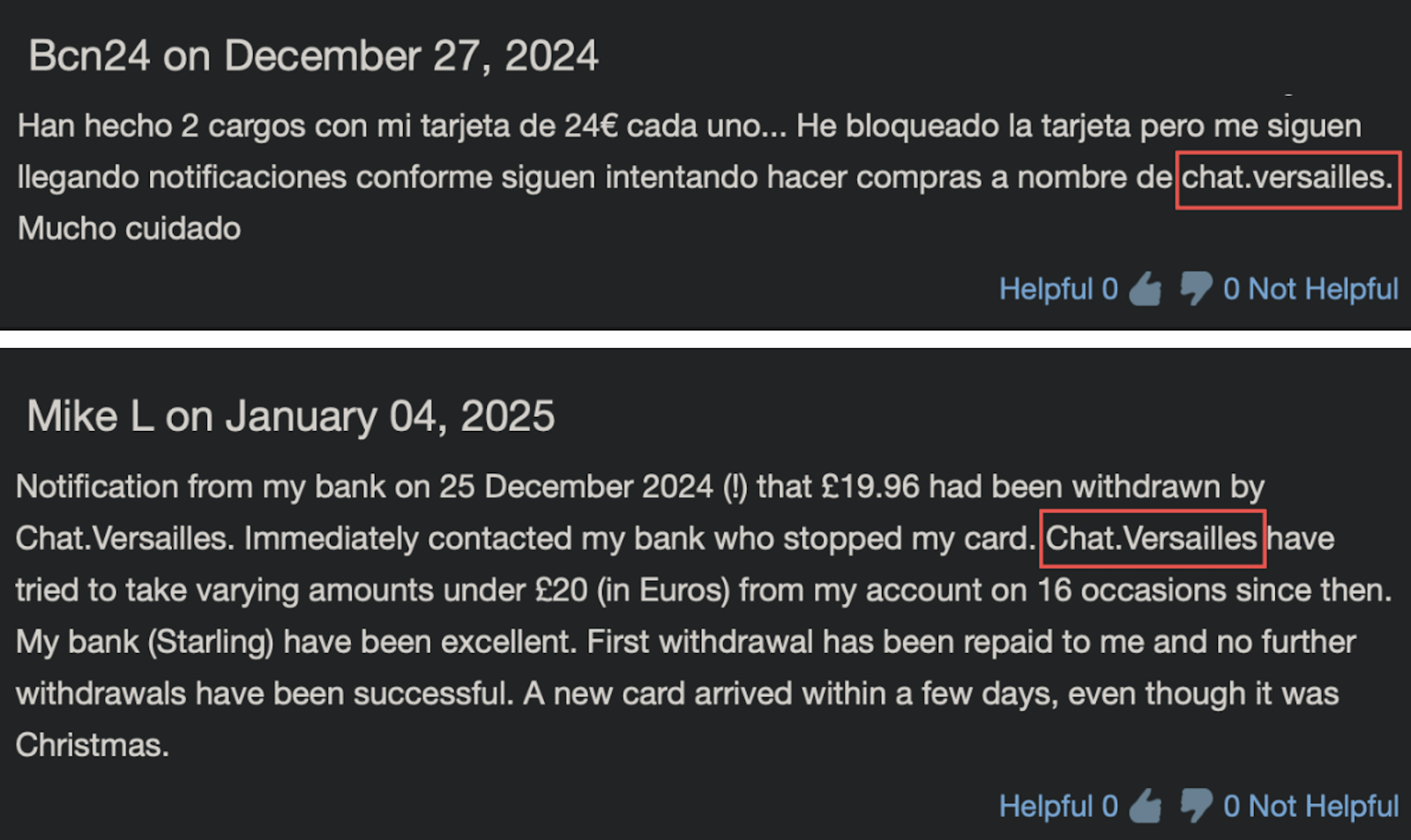

As of the publishing of this blog, illicit transactions from CHAT.VERSAILLES continue to be reported worldwide.

Figure 6. Screenshot of users reporting fraudulent charges relating to CHAT.VERSAILLES on their credit cards. (Source: scamcharge.com)

Additionally, multiple merchant reports for certain banks were published in May, aligning with Group-IB’s detection of unusual transaction spikes in May 2024 (see figures 2 and 3), evidence of the global scale of fraudulent bot operations.

Threat Actors Infrastructure Analysis

Fraudsters commonly leverage multiple proxies or hosting servers to circumvent fraud protection detection and blocking measures, as traffic originating from standard hosting environments is relatively straightforward to identify, whereas bots routed through residential proxies pose a significantly greater challenge for fraud prevention systems.

The bot targeting Chat.Versailles exhibited greater sophistication by utilizing U.S. residential US IP proxies from providers like Comcast, Spectrum, T-Mobile and others, allowing it to blend in with legitimate user traffic more effectively. In contrast, the bot targeting Taiwan High Speed Rail Corporation relied on hosting IPs (primarily G-Core Labs SA isp) that security systems can more easily flag as suspicious, given their typical association with data centers rather than residential networks.

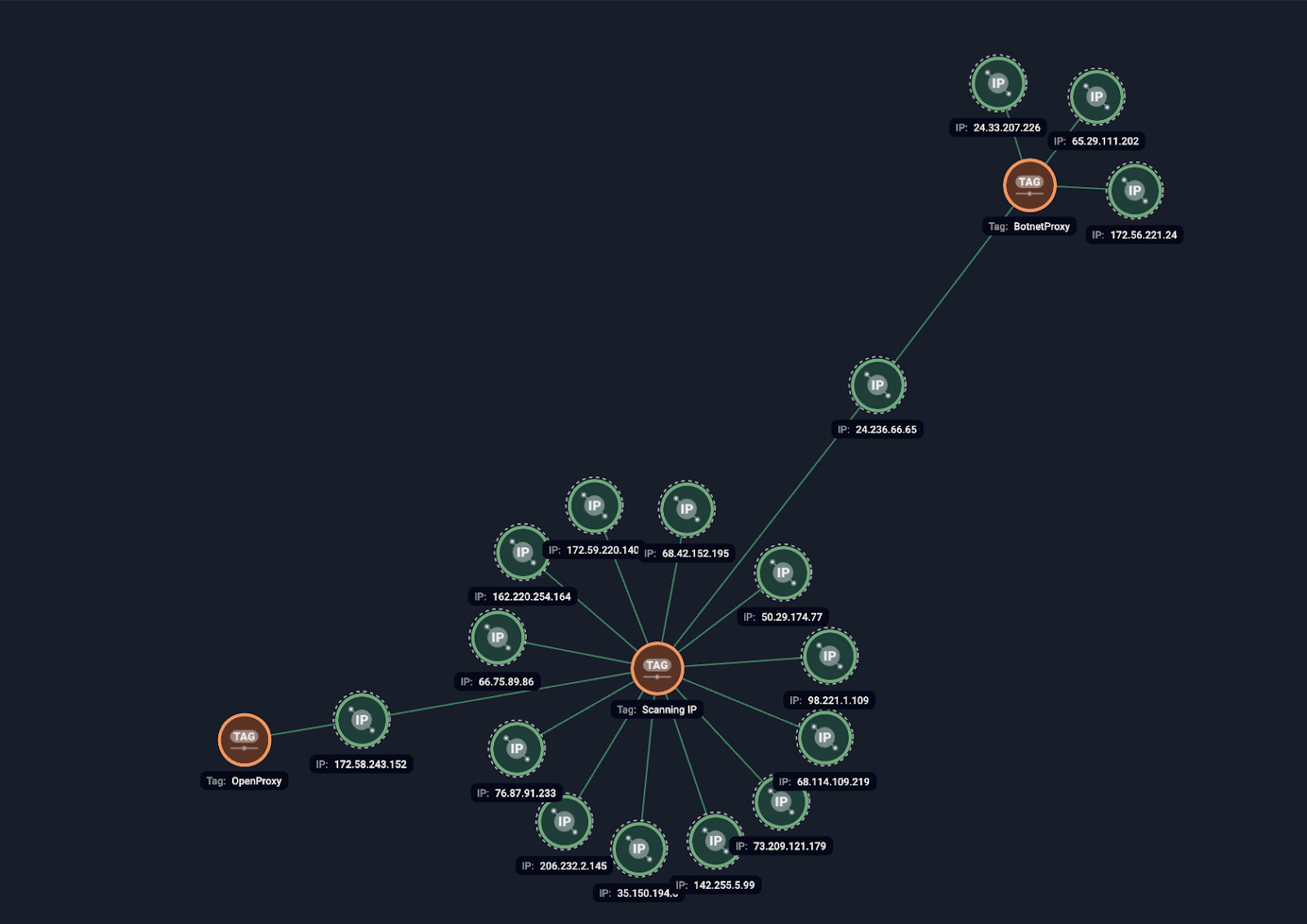

Figure 7. Group-IB’s Graph Network Analysis detecting recurring fraudulent and abusive behavior from the same IP addresses.

Group-IB’s Threat Intelligence system uncovered recurring fraudulent and abusive behavior from the same IP addresses, flagged as “Scanning IPs,” “BotnetProxy,” and “Open Proxy” (Figure 3) all linked to card testing attacks in 2024. These IPs also engaged in brute force attacks and malicious web scraping, highlighting their involvement in a range of fraudulent activities.

The Technology and Tactics Behind the Automation

In order to systematically test large volumes of stolen card data, cybercriminals frequently rely on automation frameworks like Selenium, WebDriver, or similar headless browser tools that can closely mimic legitimate user interactions.

Selenium is an open-source test automation framework that controls web browsers to verify the behavior of websites, while WebDriver (part of the Selenium suite) is a standardized API enabling direct communication with various browser engines.

Those automation frameworks and tools were originally developed for legitimate software testing and continuous integration practices, allowing quality assurance (QA) engineers to simulate real user interactions— such as clicks, form submissions, navigation—without manual intervention.

Unfortunately, cybercriminals now exploit the same capabilities to automate large-scale validation of stolen card data and other fraud schemes. By scripting automated browsers, attackers can generate realistic fake human-like traffic that bypasses simple bot-detection techniques. In Ad fraud operations like the infamous “Methbot”, fraudsters programmed automated browsers to mimic user engagement on websites and ads, deceiving advertising networks and raking in millions in fraudulent revenue. Today, similar automation frameworks underpin credential stuffing attacks, card testing schemes, and other forms of fraud—scaling malicious activities to industrial levels while evading less sophisticated detection systems. While initially designed for QA testing, these automation tools have, in some instances, been adopted by threat actors to execute fraudulent schemes.

And as AI agents are developed for legitimate automation, productivity and efficiency, there is a growing concern that they could be exploited by cybercriminals for malicious activities as well.

Group-IB Detection & Mitigation:

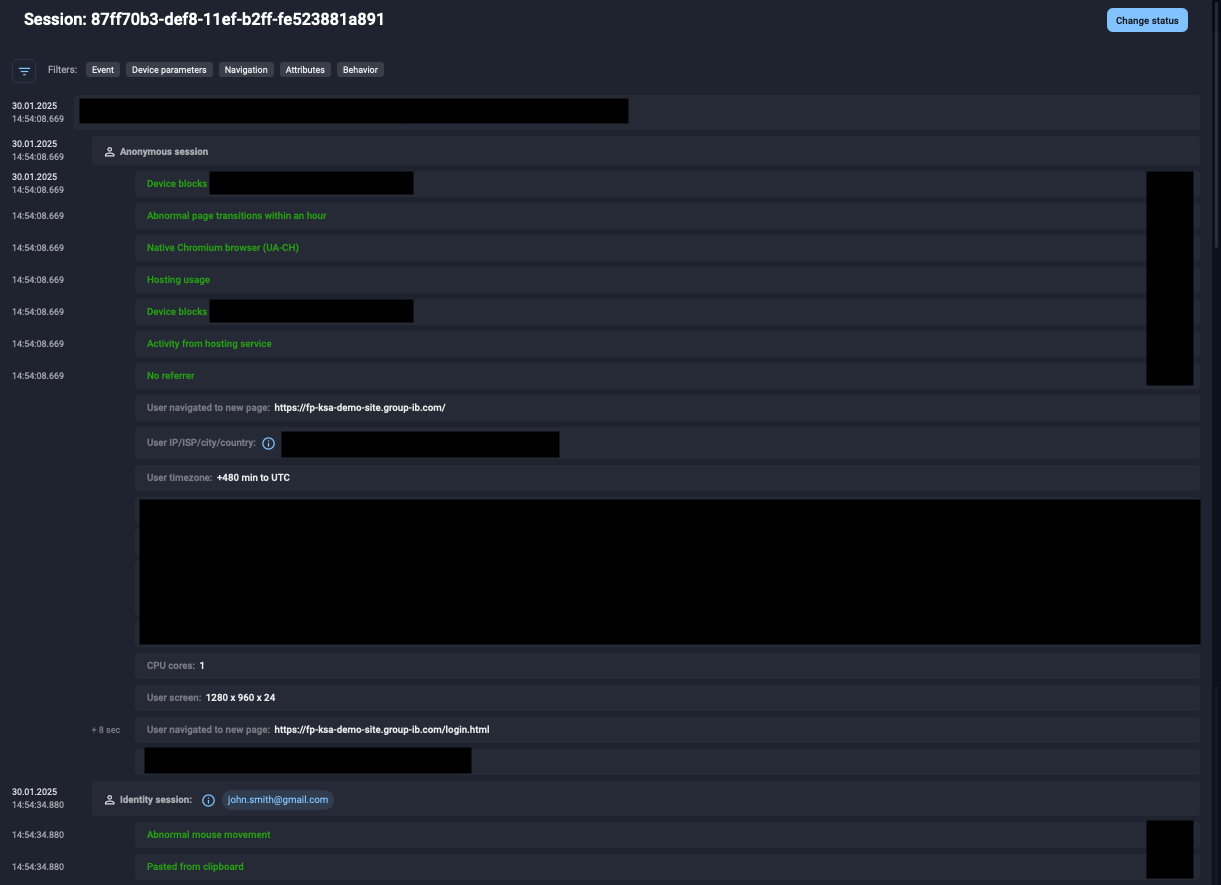

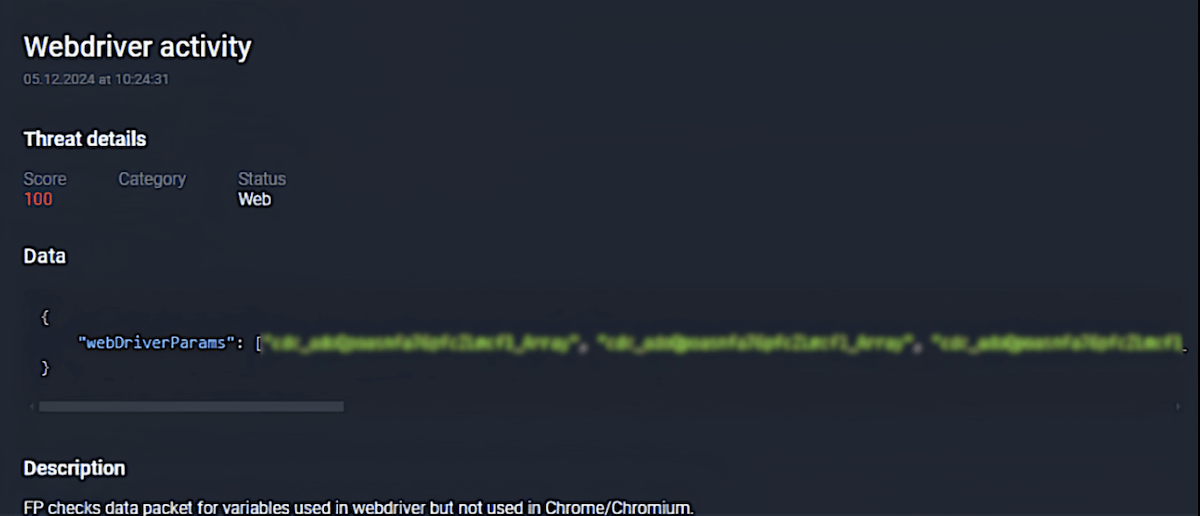

Throughout 2024 to date, the Group-IB Fraud Protection system has detected real-time alerts identifying that 98% of transactions from the Taiwan High Speed Rail Corporation originated from devices using automation frameworks (WebDriver) alongside hosting datacenter IP addresses, leading the system to automatically classify these sessions as “Transaction via bot”.

Figure 8. An example of webdriver detection by Group-IB’s Fraud Protection system.

For Chat.Versailles, 95% of transactions triggered real-time alerts related to non typical capabilities of devices indicating usage of suspicious environments.

The key indicator is that these transactions originate from a headless Chrome/Chromium setup in a container or virtual machine (VM), combined with IP addresses routed through a US-based proxy. As a result, the Group-IB Fraud Protection system flags these sessions as “Automation methods used to perform transactions”.

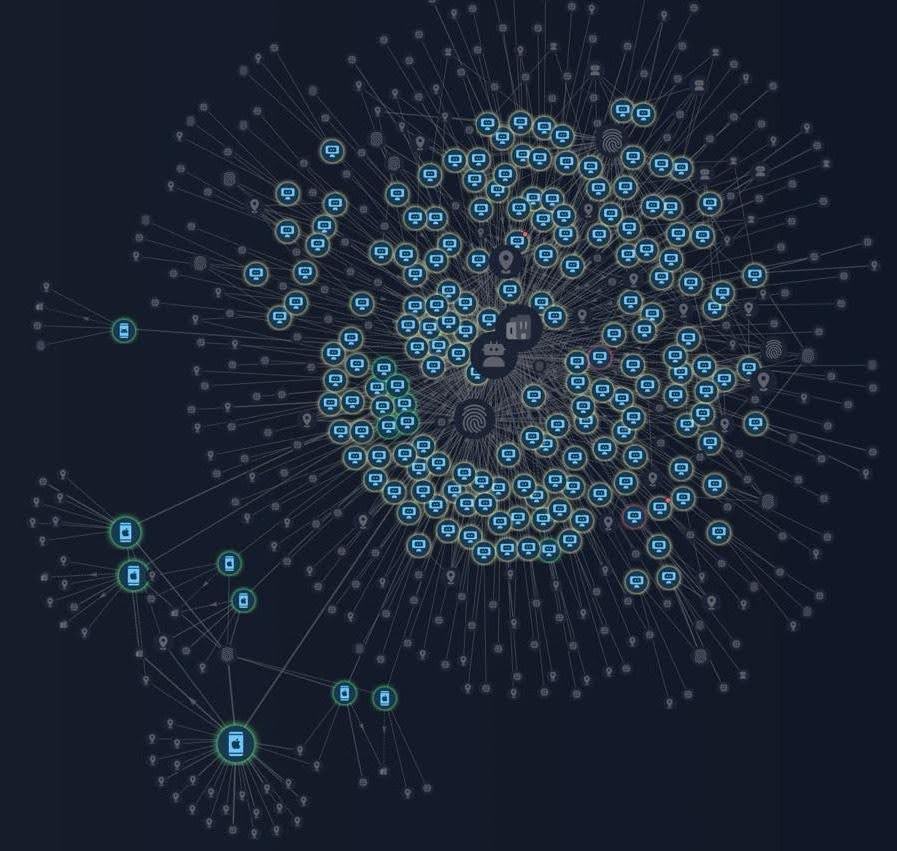

Figure 9. Group-IB’s Graph Network Analysis mapping a card testing attack, where fraudsters validate stolen credit card details through automated methods by connecting compromised devices and IP addresses

Group-IB’s Fraud Protection demonstrated a 96% detection rate in real time, flagging and intercepting thousands of fraudulent transactions at targeted merchants. Most attempts were thwarted by 3D-Secure protocols triggering OTP validations together with Group-IB’s automated alerts, leading issuing banks to decline the transactions. This seamless integration of 3D-Secure with Group-IB’s Fraud Protection technology provides a robust defense against automated fraud attempts in online banking environments.

Cyber Fraud Risks of new trend: AI agents

Modern day new automation tools as well as AI agent models are now capable of using dedicated web browsers to book travel, make reservations, and shop online—much like a human would. While the majority of AI agent technology providers have implemented safeguards and supervision requirements, their ability to take real actions underscores the potential security concerns, particularly if malicious actors attempt to bypass these protections for fraudulent automated activities. As AI agents become more capable, cybersecurity measures must keep pace—ensuring they can detect illegitimate usage and block suspicious behaviors and abuse attempts.

These AI powered capabilities present new risks in banking industry:

Card Testing and Fraudulent Transactions

- Automated AI agents can quickly iterate through stolen card data, submitting rapid-fire micro-transactions to validate card details.

- Human-like capabilities to evade detection by mimicking genuine user interactions (e.g., random mouse movements, real-time form completion).

Mule Account Creation

- AI tools can streamline the creation of fraudulent accounts for money laundering.

- By automatically providing synthetic or stolen identities, complete with AI-generated faces (Deepfake Fraud: How AI is Bypassing Biometric Security in Financial Institution) and credentials, criminals can create large networks of “mule” accounts across multiple platforms, making it more difficult for financial institutions to distinguish legitimate customers from fraudsters.

Brute Force and Credential Stuffing Attacks

- Cybercriminals use brute force and credential stuffing techniques to systematically exploit stolen usernames and passwords, aiming to gain unauthorized access to user accounts and systems.

Global Fraud Operations at Scale

- Decentralized, containerized AI agents enable continuous 24/7 operations from multiple accounts with VPN or Proxy usage, concealing fraudsters’ true locations. Because advanced AI tools are now publicly available, criminals no longer need to develop sophisticated human-like bots from scratch—significantly lowering their time and technology barriers. This allows fraud rings to coordinate large-scale card testing and synchronized account takeovers or mule account opening , leveraging AI’s capacity for rapid data processing and strategic adaptation.

Automated Account Takeover

- AI agents can take over bank accounts by automating sophisticated cyberattacks at scale. A fraudster could deploy an AI-powered bot that can access a victim’s banking account, bypassing security measures by mimicking human behavior with further transactions to mule accounts.

AI Agent Demonstration by Group-IB

As a proof-of-concept, Group-IB analysts used the latest publicly available AI agent technology,and tasked it to access the account and perform a payment. The details such as credentials and payment details were also provided to the agent. The agent was able to seamlessly perform the request without any intervention or additional guidance from the end user.

Disclaimer: This article is intended solely for educational and informational purposes. All demonstrations and examples described herein were conducted in controlled, authorized environments using test data only; no real user data or unauthorized actions are involved.

References to specific companies, services, AI tools, automation frameworks, ISPs, or merchants are provided to illustrate industry-wide trends and risks. Such mentions do not imply endorsement of illicit activities, security vulnerabilities in those entities, or deficiencies in their security measures. Neither the inclusion of an organization nor any part of this article is intended to defame, misrepresent, or harm the reputation of any entity.

We do not endorse or encourage the misuse of technology. Readers are responsible for complying with all applicable laws, regulations, and relevant third-party Terms of Service. The detection rates and technical indicators cited herein are derived from our internal performance metrics over a specified timeframe and may vary based on traffic patterns, system configurations, and other factors. All threat observations are based on Group-IB’s products and publicly available sources.

Any descriptions of methods or techniques are exclusively for research and awareness purposes; they do not constitute approval or encouragement of unlawful conduct. Our analysis aims to inform the cybersecurity community about how fraud may occur, thereby supporting the development of more robust protective measures and responsible innovation in AI security and fraud prevention.

Group-IB’s Fraud Protection solution managed to identify suspicious behaviour of the latest AI agent technology via the following indicators:

- Device blocks collection of certain hardware details

- Usage of native Chromium browser

- Abnormal number of CPU Cores (1)

- Unusual mouse movement

The above indicators are very unusual for the typical web user and may indicate automated behaviour of the tested AI agent technology.

Conclusion

With the continuous evolution of digital technology, AI-driven automation continues to revolutionize industries, unlocking new levels of efficiency and innovation. However, these same advancements present growing cybersecurity challenges. Cybercriminals are increasingly combining AI and traditional automation with Deep Fake technology to execute more deceptive and large-scale fraud schemes.

Such malicious misuse of automated and AI tools enabling fraudsters to automate card testing attacks, orchestrate advanced phishing campaigns, scale mule account opening operations and take over accounts schemes with unprecedented realism. Group-IB’s Fraud Protection solution demonstrated a 96% real-time detection rate of such malicious exploits of automated frameworks used by fraudsters to perform Card Testing Attack on certain merchants web domains, successfully flagging and blocking a huge number of fraudulent bot transactions from over 10,000 compromised cards. As automated methods and AI agents and other new AI solutions evolve, the potential for misuse grows, making it critical to develop robust safeguards against their exploitation.

Recommendations

AI-Driven Bot and Automation Detection: Deploy advanced analytics and behavioral profiling to identify patterns indicative of headless or automated browser usage (e.g., WebDriver, Selenium, PhantomJS). Closer monitoring of traffic from devices with non typical capabilities indicated usage of suspicious environments. Utilizing methods of advanced statistics methods and analysis of abnormal and outlier devices. Implement real-time detection systems, like Group-IB Fraud Protection, that flag suspicious transactions when automation frameworks combine with hosting datacenter IPs or Proxy — ultimately detecting suspicious activities performed by AI agents or Bots.

Proxy and Hosting Detection: Enhance proxy and hosting IPs detection mechanisms to identify and assess risks associated with web traffic from such IPs. Supplement with behavioral analytics to spot potentially malicious residential proxies used for fraudulent activities.

Behavioral Analytics: Use machine learning to detect transaction anomalies, such as repetitive small charges, abnormal time-of-day spending, or mismatched card origin and merchant location. This helps spot unusual behavior like spikes in transactions in foreign currencies.

3-D Secure: Implement 3D Secure on payment cards to add an extra authentication layer through OTPs. This allows real cardholders to spot unauthorized charges and block fraudulent attempts in real time.

Phishing Awareness Training: Provide targeted, role-based education on recognizing suspicious emails, links, and social engineering tactics. Conduct regular simulated phishing exercises to measure and enhance organizational resilience.

Secure ATMs/POS Devices: Keep ATM and POS software regularly updated with patches, and proactively inspect devices for physical tampering. Deploy remote monitoring and threat detection tools to quickly identify and respond to anomalies.

Monitor Data Breaches: Use specialized services to monitor public and dark web sources for compromised payment data. Investigate and remediate any breaches promptly, maintaining a clear incident response plan.

Merchant Security Standards: Apply strict security protocols (e.g., frequent patching, secure coding, bot detection) on merchant sites to block automated fraud. Proactively address known vulnerabilities and exploits to deter criminal activity.

Transaction Monitoring : Continuously track and analyze transactions in real time, flagging anomalies such as unusual spikes from certain merchants or spending patterns. Implement automated alerts to quickly detect and stop suspicious activities.

Rate Limiting: Restrict the number of transactions per IP or IP range (or device) within a given timeframe. This prevents mass automated attacks and card testing from a single source.

Small Transaction Monitoring: Pay attention to high volumes of small, uniform payments often used for card testing. Investigate repetitive micro-transactions to expose potential fraud campaigns.

Dark Web Monitoring: Leverage specialized services to scan dark web forums and marketplaces for stolen card information. Swiftly respond to identified threats in collaboration with financial institutions.

Social Engineering Awareness: Train staff and customers to identify and resist tactics aimed at extracting OTP codes or personal details. Enforce robust verification for sensitive actions, such as adding cards to digital wallets or payment apps.