Group-IB Threat Intelligence Portal:

Group-IB customers can access our Threat Intelligence portal using the links below for more information about online investment scams, and the tactics and techniques of these syndicates, including:

- Exposing the Al Trading Scam: Tactics, Techniques, and Infrastructure

- Investment Scam Campaign Impersonating Forex Trading Platforms

Introduction

In recent years, fake investment platforms—fraudulent websites impersonating cryptocurrency or forex exchanges—have become the most common tactic for financially motivated threat actors to steal funds from victims. These scams often rely on social engineering to trick victims into transferring funds to attacker-controlled systems disguised as legitimate trading platforms.

Today, this type of fraud is no longer confined to any single country or region. In fact, it has become a cross-border threat throughout Asia, with organised groups frequently operating on an international scale.

For example, in August 2025, Vietnamese authorities arrested 20 individuals linked to the billion-dollar Paynet Coin crypto scam. They were charged with violating multi-level marketing regulations and misappropriating assets, according to reports by the Vietnamese media. While not directly tied to the campaign analysed in this blog, it highlights the vast scale and transnational reach of such scams.

Key discoveries in the blog

- Investment scams conducted through online platforms are rapidly increasing in Vietnam. Threat actor groups often use fake companies, mule accounts, and even stolen identity documents purchased from underground markets to receive and move victim funds. These tactics allow them to bypass weak Know Your Customer (KYC) or Know Your Business (KYB) controls before stricter regulations are enforced.

- This research distills its findings into a Victim Manipulation Flow and an Operational Hierarchy and Multi-Actor graph, based on published cases. These models serve as a foundation for understanding and analyzing such campaigns on a broader scale.

- Shared backend artifacts (such as API calls, SSL certificates) are common across scam sites. When these artifacts appear across multiple threat actors, they can serve as valuable indicators for linking campaigns and uncovering centralized infrastructure.

- There is a growing trend in investment scams to use chatbots to screen targets and guide deposits or withdrawals. For analysts, examining chatbot responses can reveal payment details, configuration data, registered service accounts, and linguistic patterns that support attribution.

- Scam platforms often include chat simulators to stage fake conversations and admin panels for backend control, providing insight into how operators manage victims and infrastructure. Analysts should treat these as high-value indicators, as recurring interfaces, code, and IP addresses can reveal shared centralised backends that connect multiple scam domains.

Who may find this blog interesting:

- Cybersecurity analysts and corporate security teams

- Threat intelligence specialists

- Cyber investigators

- Computer Emergency Response Teams (CERT)

- Law enforcement investigators

- Fraud Analysts

- Cyber police forces

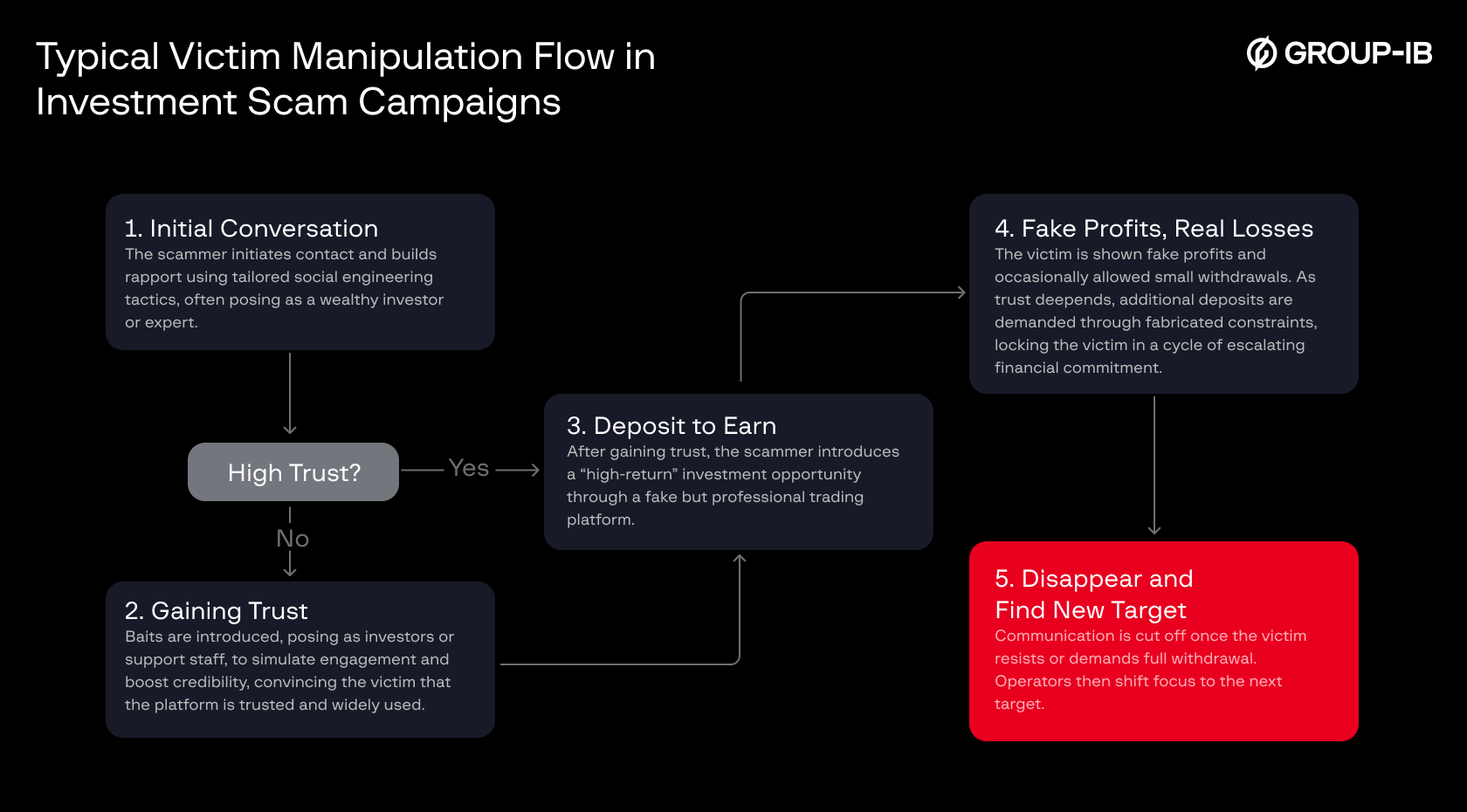

Victim Manipulation Flow

Following recent takedowns of investment-fraud rings in Vietnam that were documented by police investigations and official government reports, we outline the case descriptions in the form of a staged victim-manipulation flow, reflecting tactics observed in the wild, rather than theory.

Figure 1. Victim manipulation flow from initial contact to fund extraction.

- Initial Conversation: The scammer makes first contact through social media platforms (such as Zalo, Facebook, or TikTok), or messaging apps (including Facebook Messenger, Telegram, and WhatsApp), presenting themselves as a successful investor or financial expert. Using tailored social engineering tactics and forged personal details, they work to build trust and spark the victim’s interest in investing.

- Gaining Trust: If the victim hesitates or shows low initial trust, the scammer introduces additional “baits”, such as fake fellow investors, friends, or support staff. These personas engage directly with the victim to simulate real activity and reinforce the illusion of a legitimate, widely used platform.

- Deposit to Earn: Once sufficient trust is established, the scammer presents a high-return investment opportunity. The platform, often a clone or a convincingly fabricated interface, promises guaranteed profits with minimal risk, persuading the victim to make an initial deposit.

- Fake Profits, Real Losses: The platform displays fabricated profits to convince the victim that their investment is growing. In some cases, the victim is even allowed to make small initial withdrawals to build confidence. Once the trust deepens, scammers pressure the victim to make larger deposits, often citing fake excuses.

- Vanishing and Moving On: When the victim attempts to withdraw their full balance or expresses any hints of doubt, the scammer abruptly cuts off all communication. The scammer then abandons the victim and moves on to a new target, restarting the cycle once more.

This model represents a typical victim manipulation flow consistently observed across investment scam campaigns. While the core structure is not new, it remains highly effective, continually evolving over the years through more sophisticated social engineering tactics and increasingly polished scam platforms.

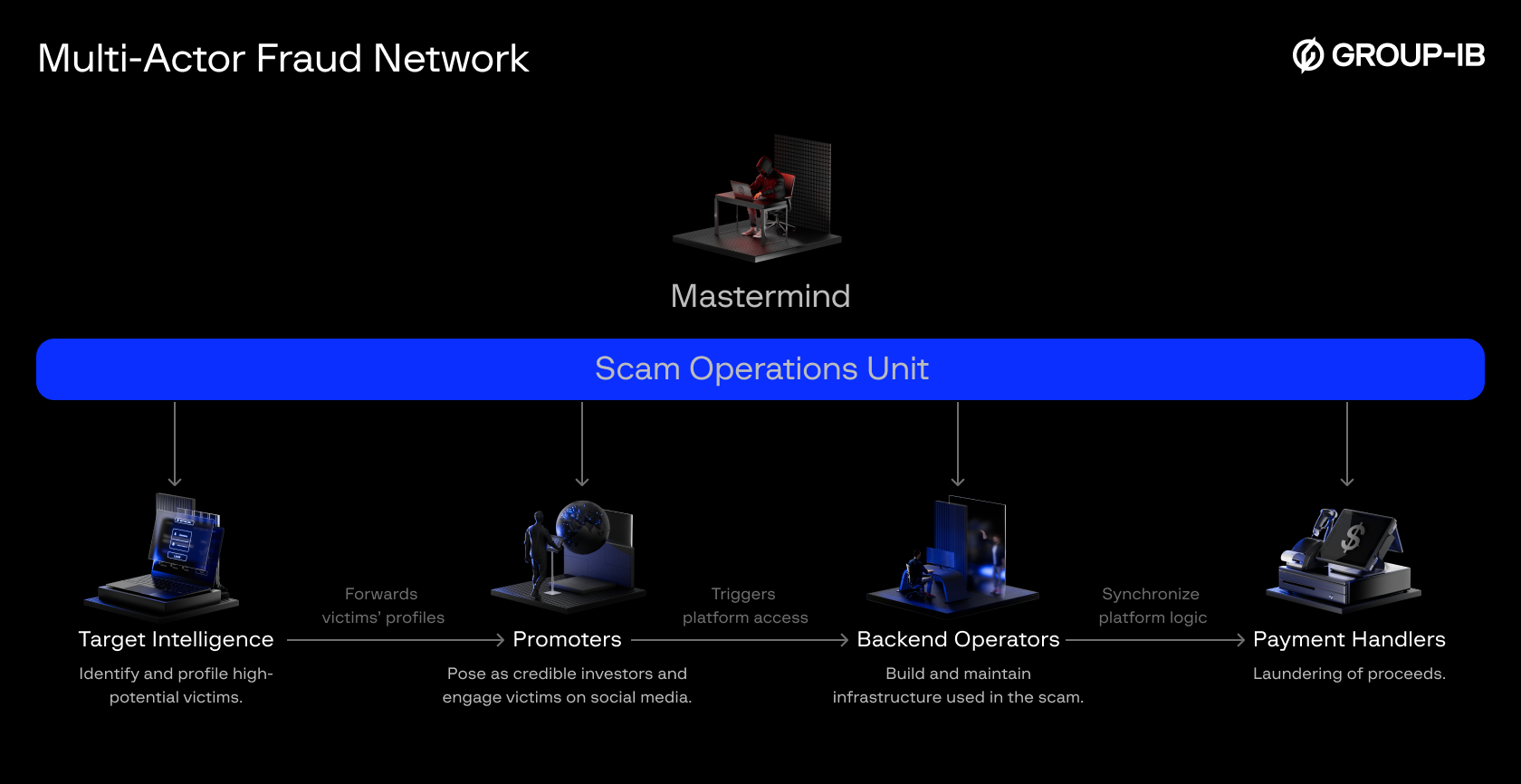

Unmasking the Machinery Behind Fake Trading Platforms

Behind the polished interface of a fake trading platform is a highly organized operation with defined roles, structured workflows, and cross-functional teams. Contrary to the common assumption of a lone scammer acting independently, our findings suggest a distributed model involving multiple actors working together. From profiling targets and building technical infrastructure to manage payment channels and posing as fake investors, each participant within the scam machinery plays a distinct part in maintaining the illusion and extracting funds from their victims.

Based on infrastructure analysis, behavioral observations, and recurring patterns across multiple campaigns, we propose the following role segmentation for the purposes of this research:

Figure 2. An organization chart depicting a Multi-Actor Fraud Network.

Mastermind

At the top of this structure is a mastermind, or central operator, who oversees the entire fraud ecosystem. The mastermind maintains operational control, manages backend infrastructure, monitors financial flows, and ultimately profits from the scheme.

Beneath the mastermind are subordinate teams, each with clearly defined roles and responsibilities:

Target Intelligence

This role focuses on acquiring leaked or stolen personal information from the internet or the Dark Web, such as datasets that include names, contact information, financial indicators, or digital behavior profiles. Their task is to identify high-potential targets, often individuals with signs of wealth, vulnerability, or investment interest, and pass them along to the next layer.

Promoters

Promoters serve as the visible face of the scam. Their role is to create convincing personas on social media, posing as successful entrepreneurs, crypto investors, or well-connected insiders. They engage victims through private messages, sustaining long-term conversations to build trust.

At times, they introduce additional personas into the chat by pivoting to the role of successful participants, advisors, or observers, to simulate group validation and reinforce the scam narrative. These roles are fluid and adapt based on the victim’s personality and level of skepticism. Common personas include:

- Successful “participants” who share fabricated stories of profits and success to entice the victim.

- Developers or admins of the platform who provide technical instructions.

- Gatekeepers that control access, making the victim feel privileged to participate.

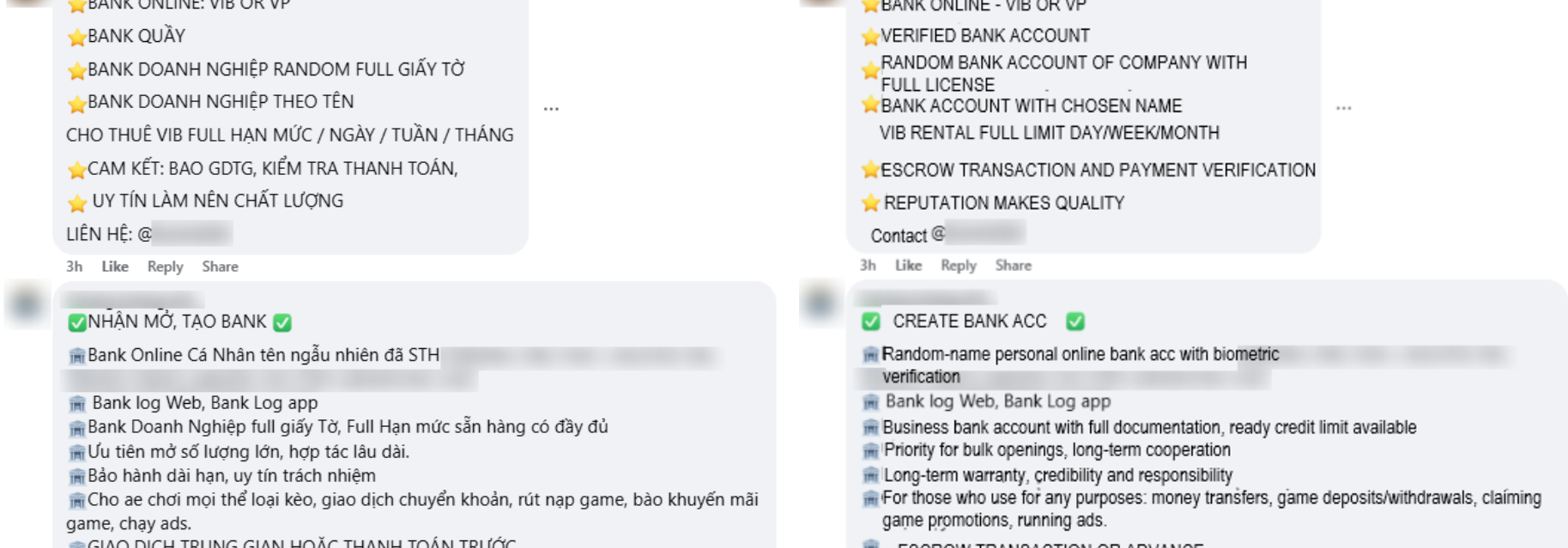

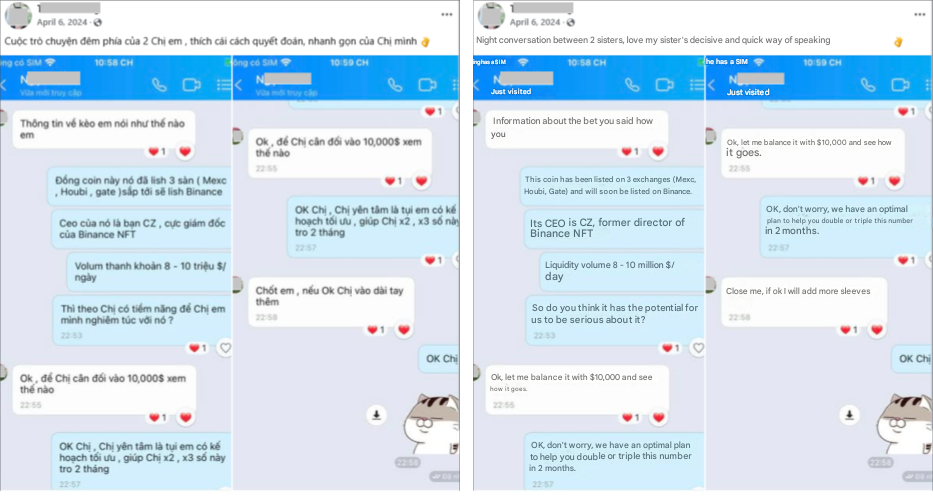

Figure 3. A screenshot of a manipulative messaging strategy in Vietnamese (left) and English translation (right) observed during the onboarding of a scam campaign, captured from a threat actor’s Facebook post.

Based on behavioural and language analysis, we believe that some promoters may be using chat generator tool to create fabricated conversations. These simulated dialogues often depict fake successful investments, customer support interactions, or glowing testimonials. These fake chats are often shared publicly on social media without context or verification, serving as deceptive social proof to entice and mislead potential victims into falling for the scheme.

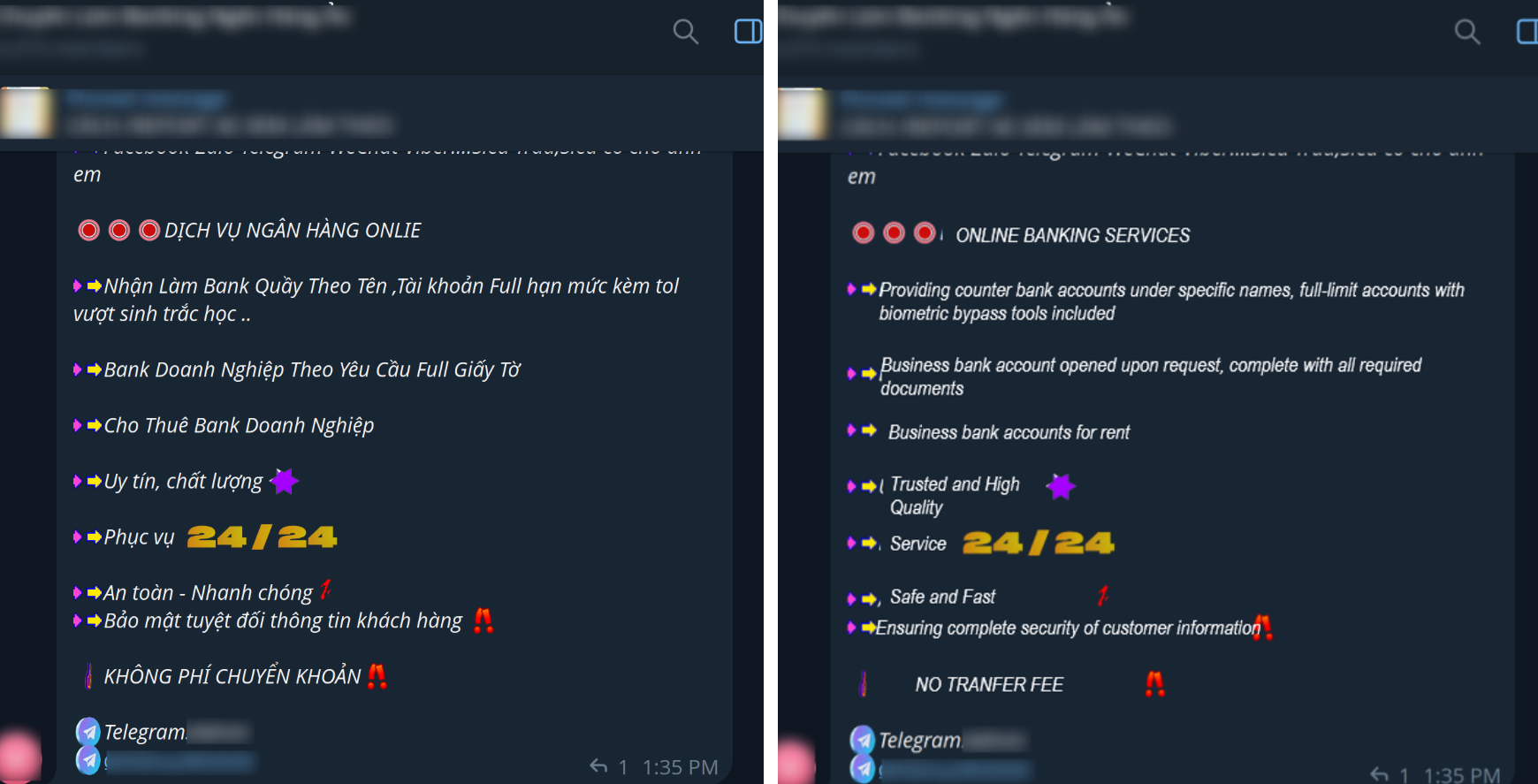

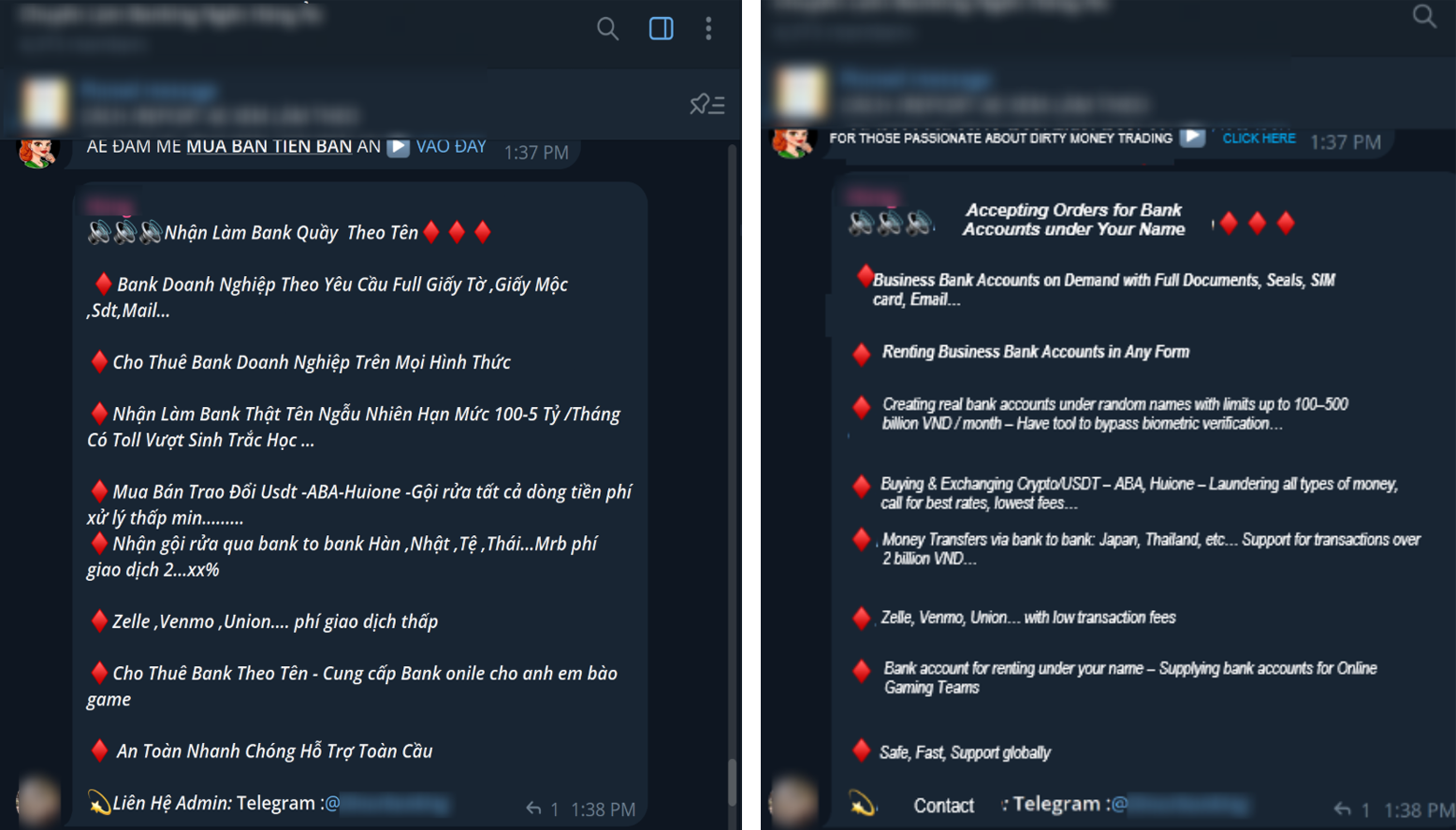

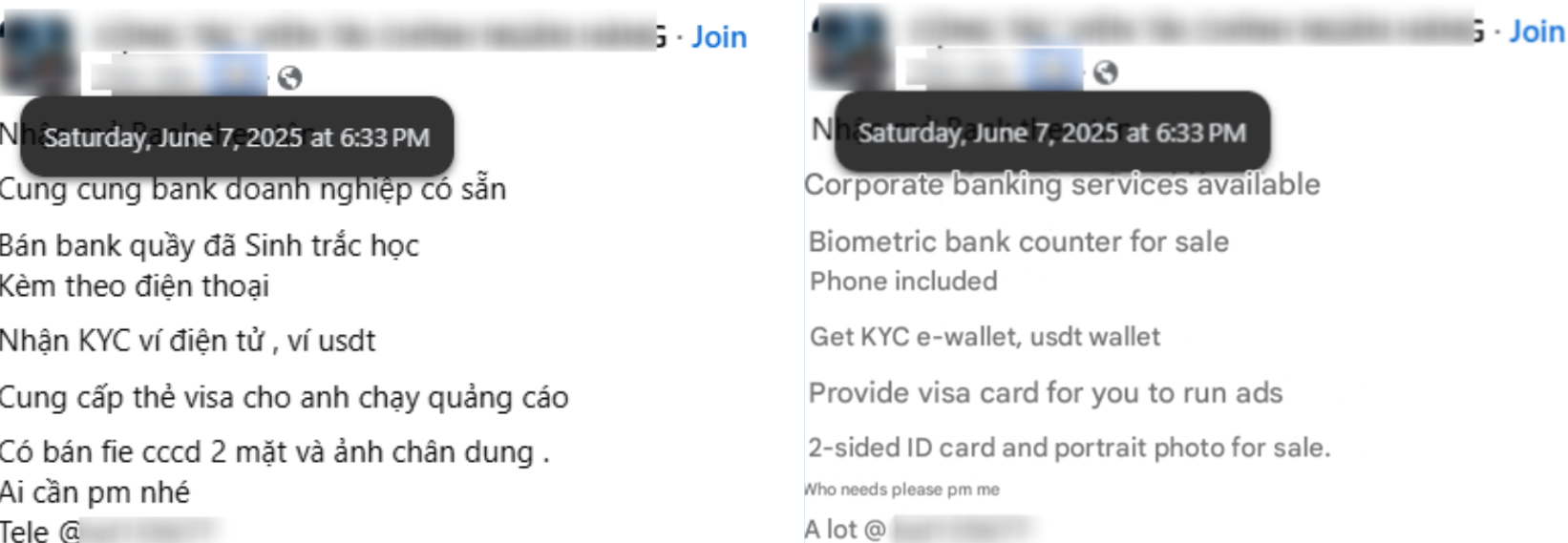

Payment Handlers

The role of payment handlers is to establish and manage the bank accounts or digital wallets used to collect funds from victims. To achieve this, they may register fake or dormant companies and exploit weaknesses in Know Your Business (KYB) and Know Your Customer (KYC) verification processes.

Under Circular 17/2024/TT-NHNN issued by the State Bank of Vietnam, which took effect on 1 July, 2025, corporate payment and checking accounts will face stricter requirements. Specifically, these accounts will only be permitted to process withdrawals or electronic transactions once the legal representative’s identification documents and biometric information have been verified.

In anticipation of these tighter controls, scam operators began actively seeking workarounds. Online forums, Telegram groups, and social media platforms now host a thriving black market for both corporate and personal bank accounts, as well as stolen identity data. These illicit offerings often include forged business licenses, falsified legal documents, ID photos, stolen citizen ID cards, verified e-wallets, biometric KYC bypass services, and even face-swap technology. Such tools enable fraudsters to open fake or rented accounts at scale, giving their operations the appearance of legitimacy while continuing to deceive and exploit victims.

Backend Operators

Backend operators build and maintain the infrastructure used in the scam. This includes designing the fake investment platforms, managing the backend logic, and integrating tools such as chatbot widgets, invitation code systems, and fake dashboards that display fabricated profits. They also manage the onboarding process, ensuring that only selected victims can access the platform, often through a valid referral code provided by the promoter.

Together, these roles form a resilient and scalable fraud architecture in which functions can be replaced, updated, or relocated independently. This structure enables mass victimization across multiple countries and languages, and complicates investigation and takedown efforts by isolating exposure points from the central operator.

Technical Infrastructure & Toolkit Reuse

While social engineering initiates the scam funnel, the underlying infrastructure is what sustains it. Our analysis reveals that these campaigns do not depend on isolated landing pages or throwaway sites; instead, they are thin fronts on a shared backend. For analysts, the goal is not only to document a single instance but to recognize recurring components and to know how to capture and pivot from them. The following sections provide structured guidance on how these artifacts can be identified and leveraged for infrastructure correlation. The actors responsible for creating, operating, and managing this infrastructure are those defined under the Backend Operators role. (see Figure 2, Multi-Actor Fraud Network).

Scam entry points

The term “entry point” refers to the very first stage of a scam campaign, where potential victims are funneled into a scam scheme. This step is rarely random: victims are often pre-selected by the scam’s Target Intelligence role (see Figure 2, Multi-Actor Fraud Network), which filters individuals based on leaked or purchased data and tailors outreach accordingly. This ensures that only promising targets are directed toward the platform.

To further protect the infrastructure and reduce exposure to investigators, scam platforms rarely reveal their full content at the outset. Instead, the initial page typically presents a login or registration screen gated by an invitation or referral code. These gates serve a dual purpose:

- They restrict open reconnaissance by preventing casual browsing.

- They bind each new account to a Promoter role (see Multi-Actor Fraud Network) within the fraud network, ensuring that victim onboarding is traceable.

Typically, without a valid referral code, analysts would not be able to progress beyond the landing page, which significantly limits direct observation during investigations.

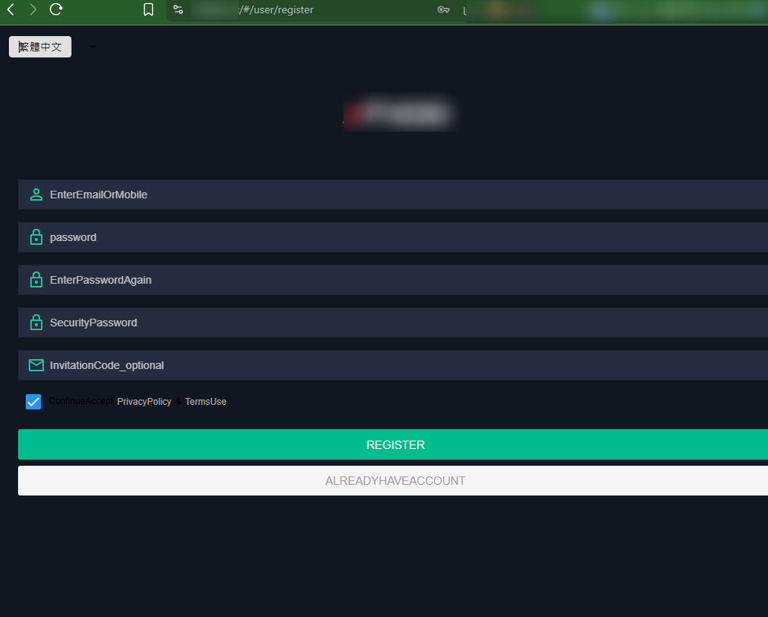

Figure 5. A screenshot of registration form with “Invitation Code” field.

To further the analysis, the analyst should attempt to obtain valid referral codes, which can often be found in:

- Promotional posts on social networks.

- Victim reports and complaint forums.

- Archived screenshots or shared scam warnings.

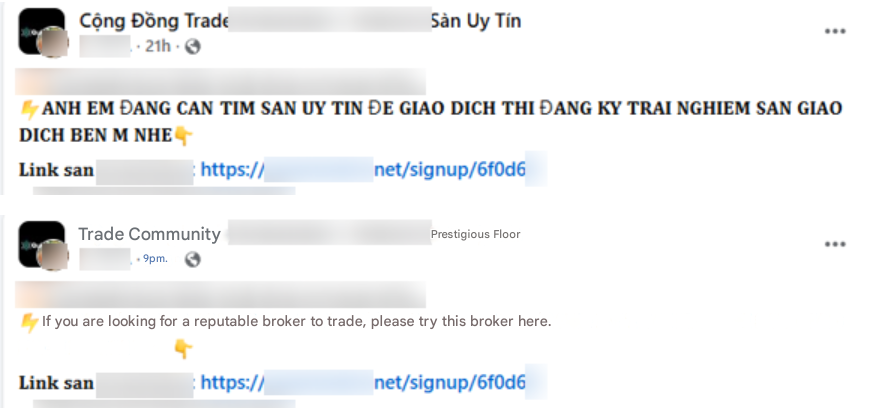

Figure 6. An example of the social media post promoting the platform with a referral code included from Facebook, in its original language in Vietnamese, and translation in English below.

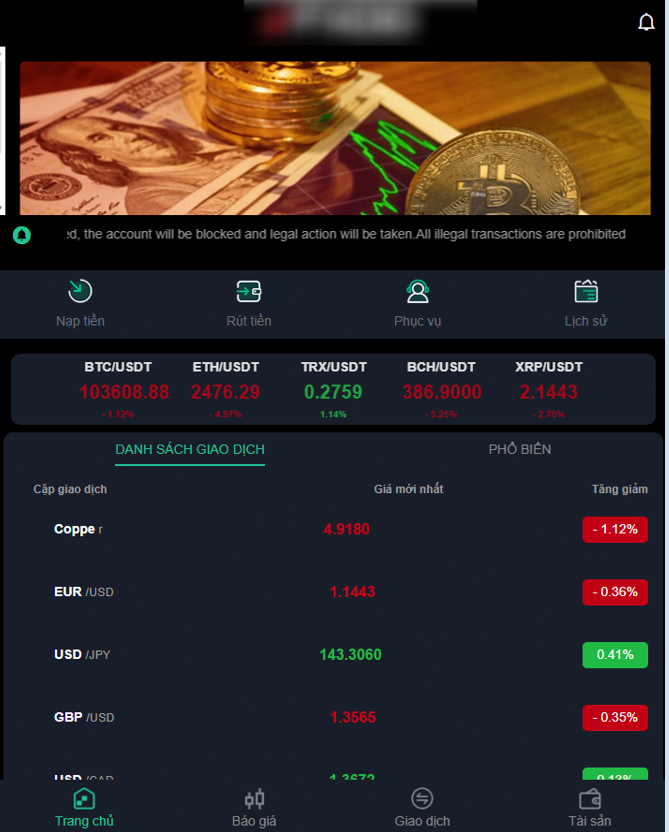

Figure 7. Example of a trading platform interface after successful login

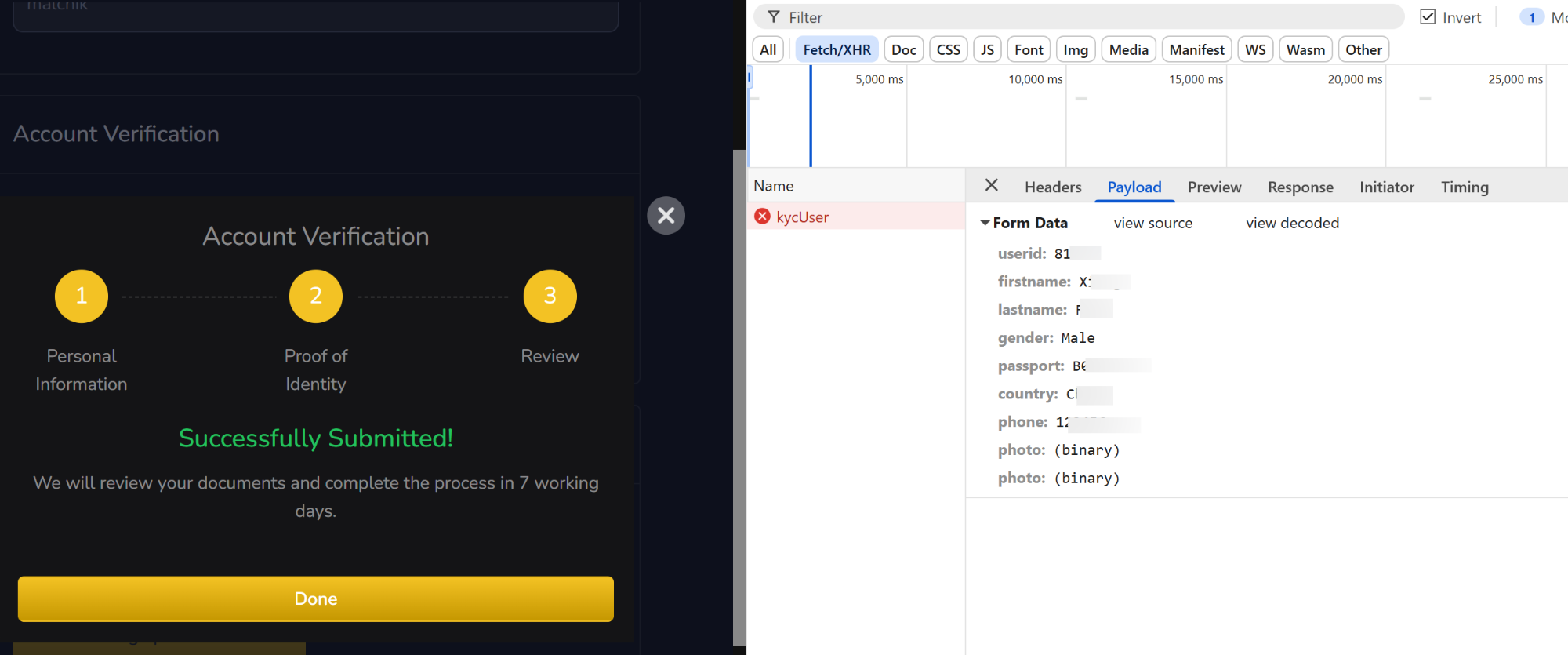

In the analysis of scam platforms, available features should be reviewed to identify what data is requested from victims. One common step is KYC verification, where victims are asked to submit personal details such as full name, ID number, and upload identification documents.

Documenting these data-collection flows is critical for assessing what categories of personal information the operators are harvesting, and how this data may be reused across multiple campaigns.

For analysts, this is where careful observation is required:

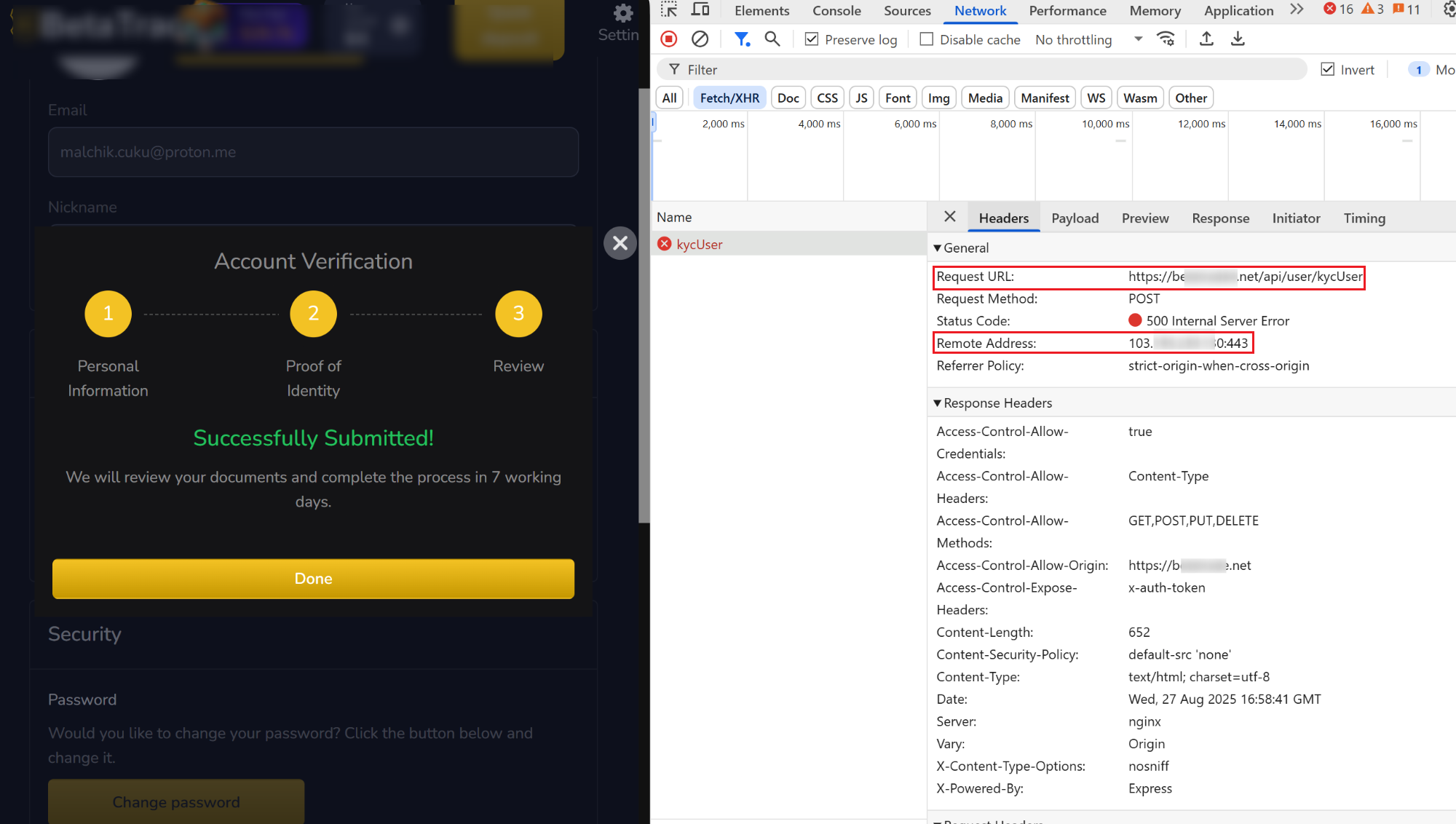

- Monitor API endpoints involved for KYC form submissions and document uploads: Examples of such API requests are presented in the screenshot below:

Figure 8. A screenshot of the KYC submission process on the fraudulent platform, and analysis exposing backend IP and server headers.

- Record the field structures sent:

Figure 9. Example of the fraudulent platform’s payload structure from /api/user/kycUser, illustrating collected victim data.

- Inspect the wallet/bank-binding flow to determine whether the platform actually attempts integration or simply collects data for harvesting.

These observations help analysts to map the platform’s functionalities, identify the categories of personal data collected from victims, and trace the associated API endpoints and request URLs involved in the process.

Potential Backend Sharing Between Domains

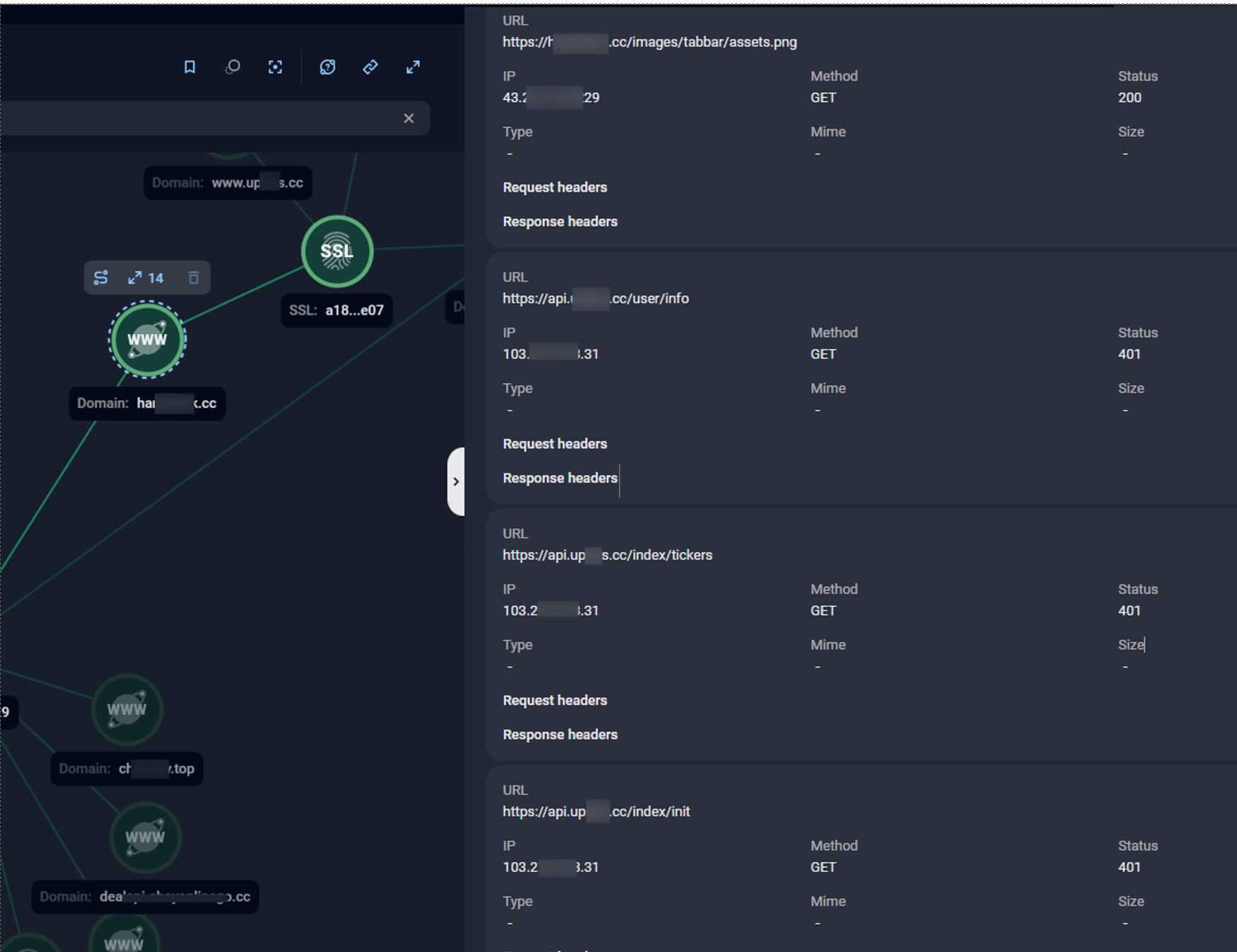

When investigating scam infrastructure, network traffic between related domains should be reviewed to identify potential backend connections. This can be archived by capturing HTTP requests during controlled browsing sessions and examining whether one domain is invoking functions or resources hosted on another web resource.

In one of the investment scam campaigns investigated by Group-IB’s High-Tech Crime Investigation team, captured traffic revealed requests to an additional domain api.<main domain>, with paths such as /user/info, /index/tickers, and /index/init. These requests returned 401 Unauthorized, as shown in the following screenshot (Figure 10), indicating that the endpoints exist but require authentication. By contrast, a non-existent endpoint would typically return 404 Not Found. This suggested that both the primary scam domain and API subdomain could be under the control of the same threat actors.

For analysts, capturing such cross-domain interactions is valuable because it provides a pivot for linking infrastructure: identifying repeated endpoints, shared response behaviors, or correlated error codes across domains can help confirm when multiple scam sites are tied to a single operational backend. An example of correlation between domains is presented below on the Group-IB Graph:

Figure 10. Group-IB’s Graph solution reveals HTTP requests to protected API endpoints (e.g., /user/info, /index/tickers, /index/init) returning 401 Unauthorized responses, indicating the endpoints exist, but access is blocked due to the requirement of authentication.

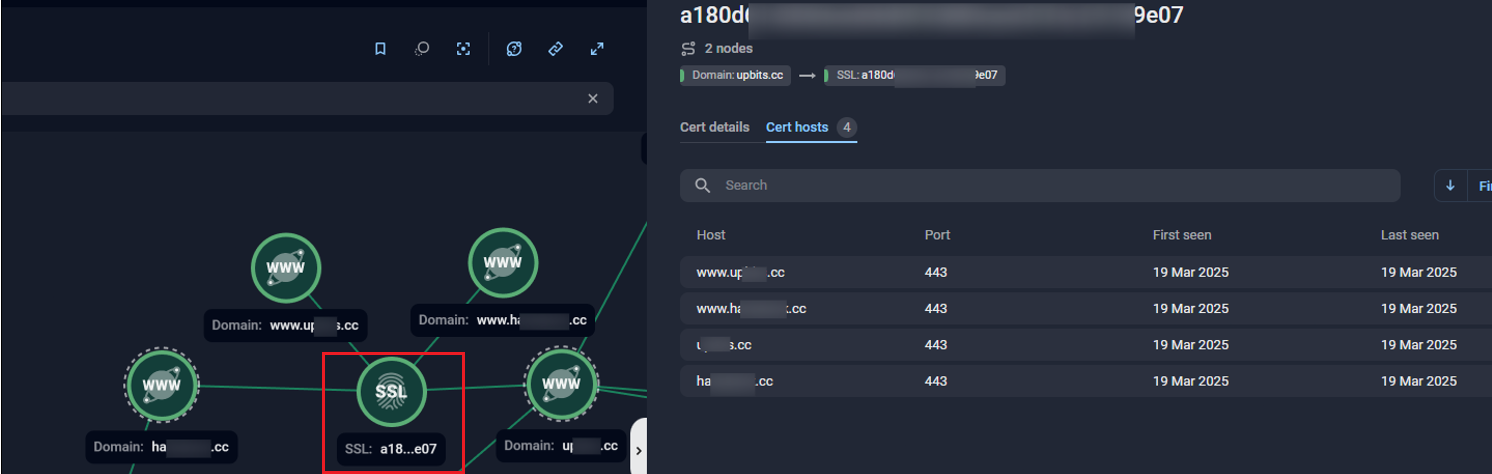

One way to strengthen the assessment that related domains are centrally managed is to check for SSL certificate reuse across infrastructure components.. When multiple different scam domains or API endpoints share the same certificate—particularly one observed exclusively within the scope of a single campaign—this serves as a strong indicator of shared infrastructure management. Certificate fingerprints provide reliable pivot points that allow analysts to cluster assets, track reuse across campaigns, and supply evidence to support attribution or takedown requests.

Figure 11. Group-IB’s Graph solution reveals SSL certificate fingerprint reuse across scam subdomains, illustrating centralized infrastructure management.

In summary, analysing cross-domain calls and SSL certificate reuse helps analysts move beyond individual domains to uncover links at the backend level. These technical artifacts provide reliable pivot points for clustering related sites and building a clearer picture of the underlying infrastructure.

Exposed Admin Panels as Infrastructure Artifacts

Following the identification of chat simulation subdomains, Group-IB’s investigations have shown that pivoting across subdomains may reveal web-based admin panels exposed within scam infrastructure. These panels serve as backend control points for operators, providing functionality such as user management, credential handling, or monitoring of victim activity. Most importantly, the identification of the admin panel should rely on indirect indicators observable without authentication to avoid interacting directly with live criminal systems.

Based on Group-IB’s experience, analysts can apply the following methods:

- Directory & File Discovery: Scan web servers for hidden directories or files that may expose login pages or admin panels, often placed in sub-folders.

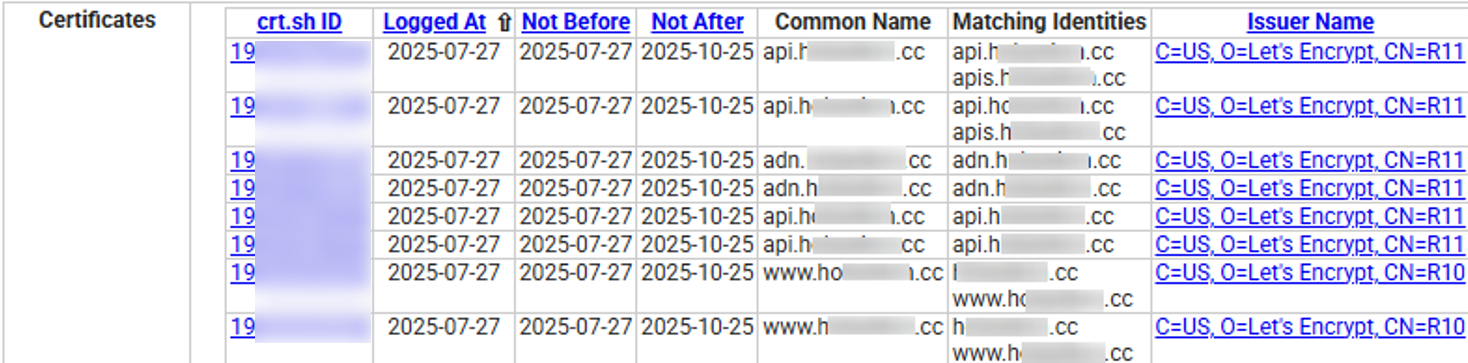

- Leverage Public Sources: Use Certificate Transparency logs (e.g., crt.sh) and search engine queries to discover subdomains. Focus on common administrative prefixes (admin, panel, dashboard, manage) or any other variations.

- Fingerprint Technologies: Use passive tools to identify the technologies used on the main website, which can give clues about the backend.

- Port Scanning: Use port-scanning tools to check for open ports beyond 80/443, as admin panels are sometimes exposed on alternative ports (e.g., 8080, 8081, 8443). This helps expand infrastructure mapping and may reveal hidden management interfaces.

- Source Code Review: Examine HTML, CSS, and JavaScript for indicators of administrative functionality. Useful clues may include <title> tags with terms like Admin or Management, or references to widely used UI frameworks.

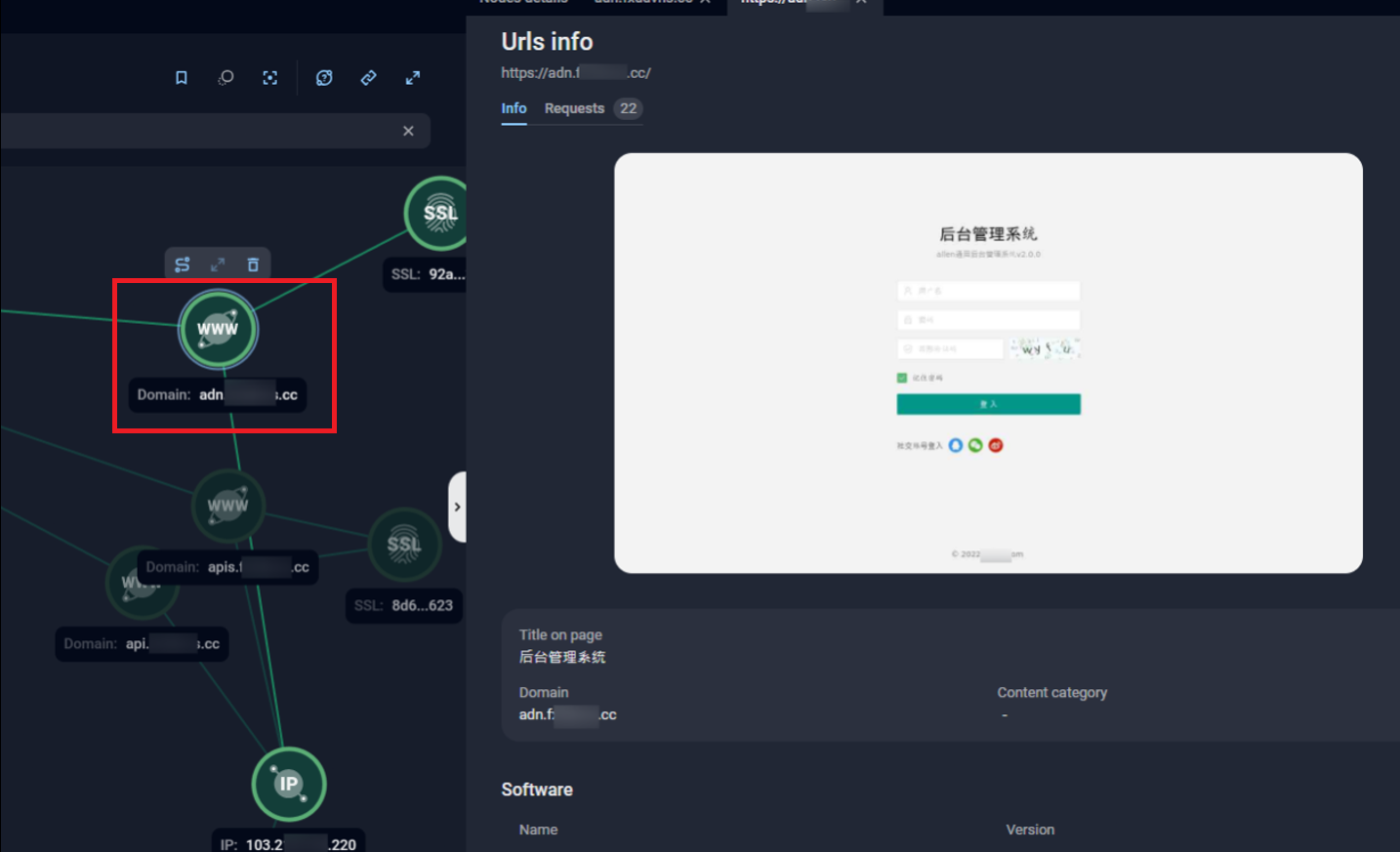

As a practical example of a particular campaign, querying crt.sh for domains linked to the campaign revealed multiple subdomains with consistent naming patterns (e.g., adn.<domain>, api.<domain>), each issued with its own certificate.

Figure 12. Example crt.sh output showing repeated subdomain patterns (adn., api.) across issued certificates, useful for identifying potential admin panel.

Analysts can then validate suspected admin-related subdomains either by directly reviewing accessible interfaces or by leveraging infrastructure analysis platforms, such as Group-IB’s Graph solution.

In the following example, subdomains following the adn.<domain> pattern were found to consistently resolve to a login interface presented entirely in Simplified Chinese. The interface featured standard login fields including username, password, and CAPTCHA fields, along with options for logging in via popular Chinese platforms such as Tencent’s QQ (腾讯QQ), WeChat (微信), and Weibo (微博). The page title, “后台管理系统” ( Backend Management System), is often one of the clearest indicators suggesting administrative functionality.

Figure 13., The interface of Chinese-language admin panel with options to login using QQor WeChat credentials, accessed via Group-IB’s Graph solution.

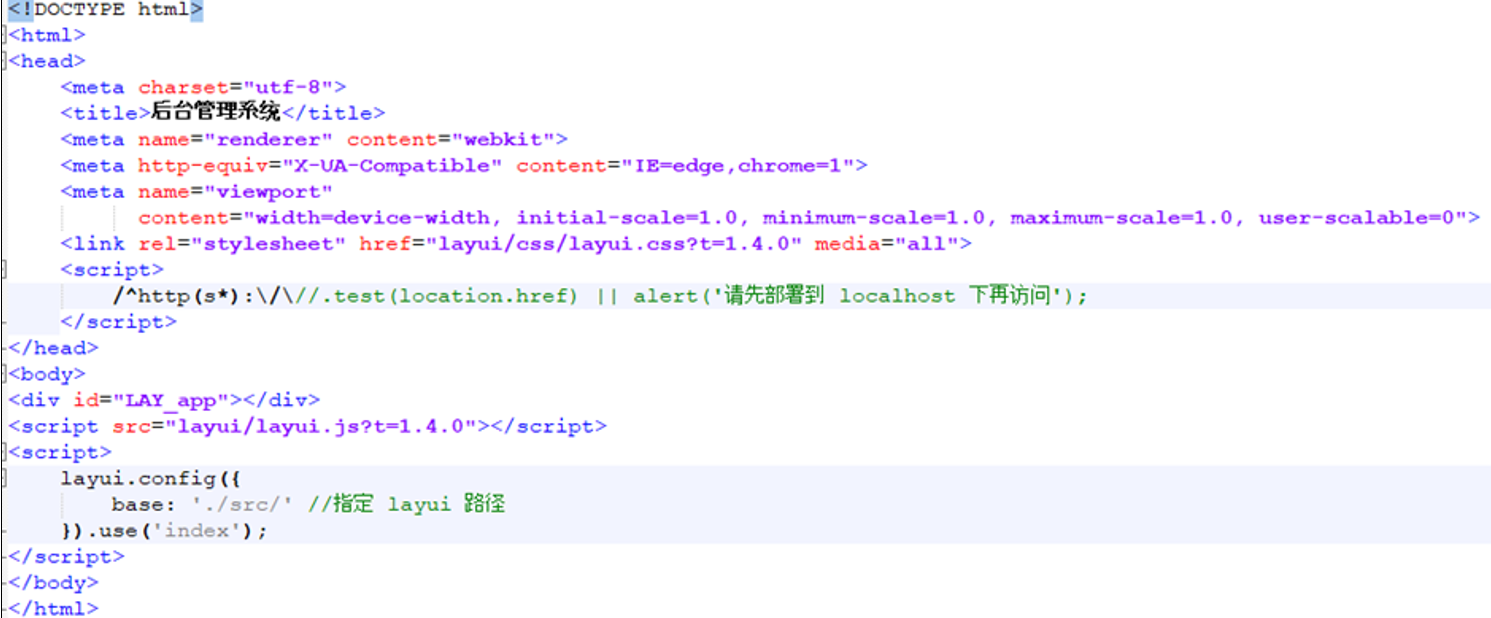

To determine the underlying technologies, the HTML and JavaScript source code of suspected admin panel pages can be reviewed. Source code analysis often provides confirmatory indicators that validate administrative functionality without requiring authentication.

In this example, useful markers include:

- <title> tags explicitly referencing administrative functions (e.g., “后台管理系统”, meaning “Backend Management System”).

- References to widely used UI frameworks such as Layui (e.g., layui.css, layui.js)

- Initialization scripts like layui.config().use(‘index’) that activate management dashboards.

Layui is a lightweight Chinese front-end UI framework commonly used in dashboards and admin panels. Its presence not only confirms an administrative interface but also provides a reusable fingerprint for correlating similar panels across multiple domains within the same campaign.

Figure 14 A screenshot of the source code indicators confirming admin panel functionality and Layui framework usage.

In addition to the indicators above, Group-IB recommends conducting cross-domain comparisons within the same campaign. When multiple domains expose admin panels with identical interfaces, source code, and underlying technologies, this strongly suggests the use of shared toolkits. If these panels also resolve to the same IP address or infrastructure component, this further supports and reinforces the assessment that operators are either reusing centralised backends or deploying from a common environment. Such patterns are a recurring feature of scam campaigns and provide reliable pivot points for clustering related infrastructure.

Onboarding via Chat-Based Interaction

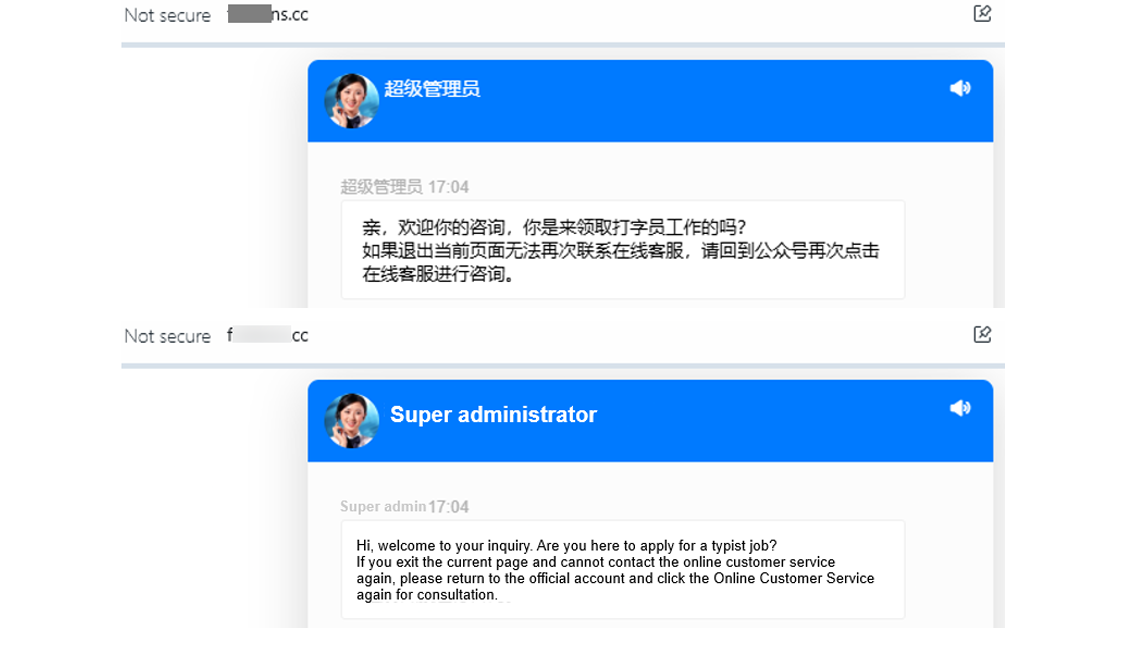

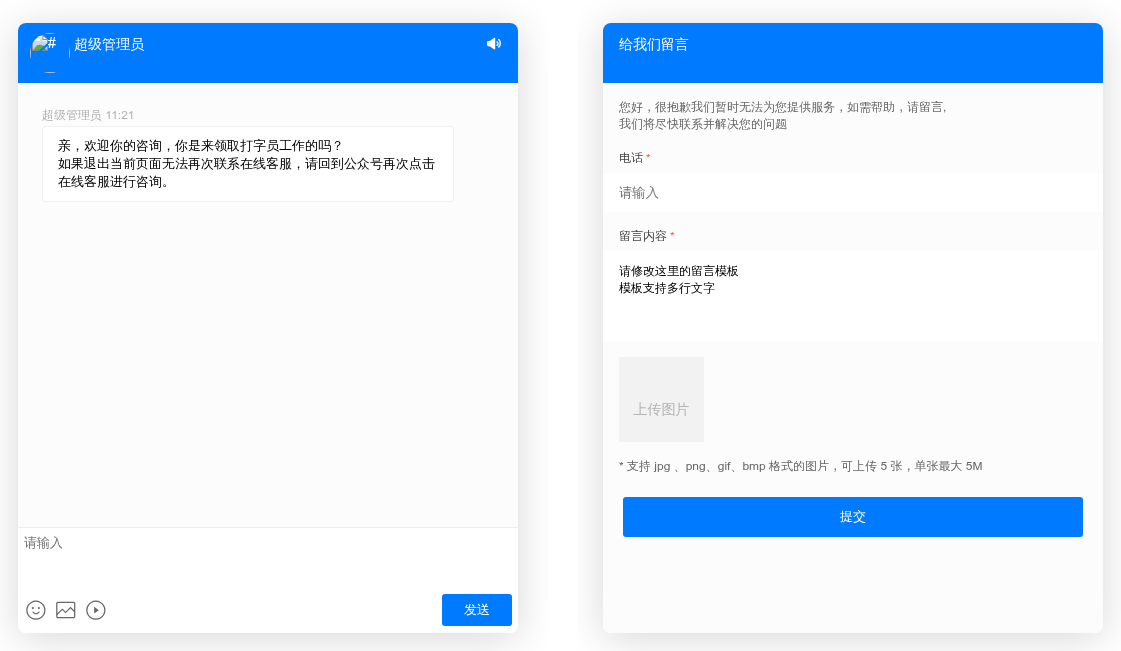

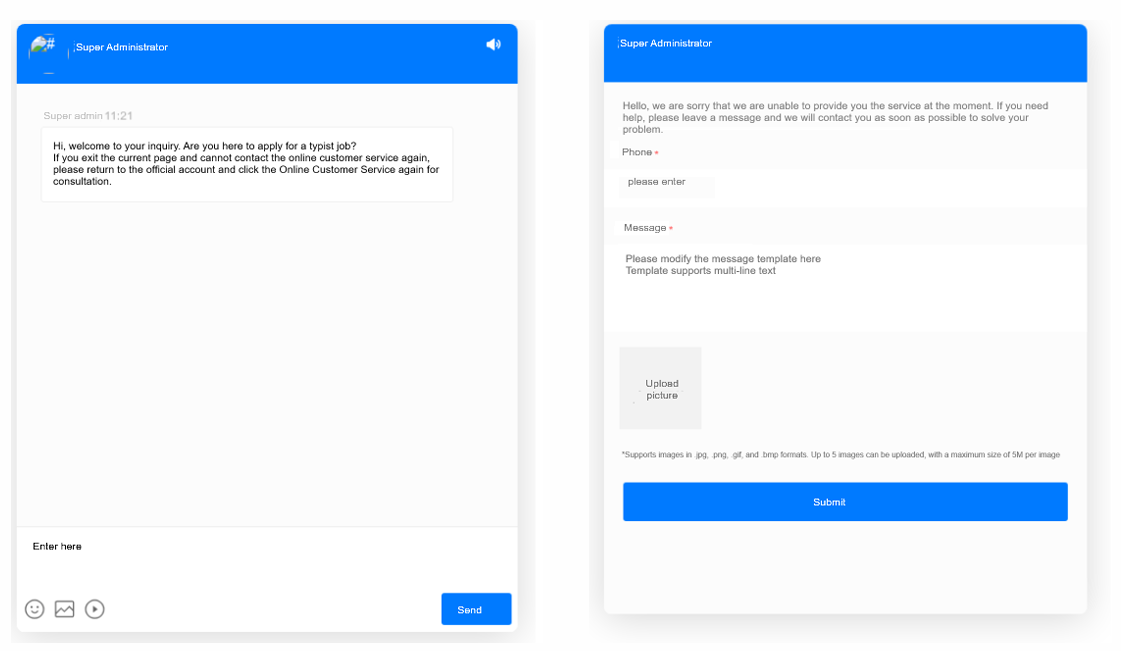

In several recent campaigns Group-IB observed recently, the initial interface did not present a direct registration form. Instead, it loaded a chat window resembling a live support system.

This interface was dominated by a chatbox powered by third-party services, with the first message appearing dynamically in different languages depending on the campaign.

Before being granted access to the platform’s main investment dashboard, users were required to interact with the chatbot, typically by providing basic identity information to confirm they are the intended targeted victim. Only after completing this initial interaction were users redirected to the actual registration or trading interface.

Based on our analysis, this interaction flow appears to serve multiple purposes:

- Access Control: Acting as agate to ensure that only pre-screened victims are allowed to proceed to the main platform.

- Trust Reinforcement: Creating the perception of professional customer support, and enhancing the platform’s credibility.

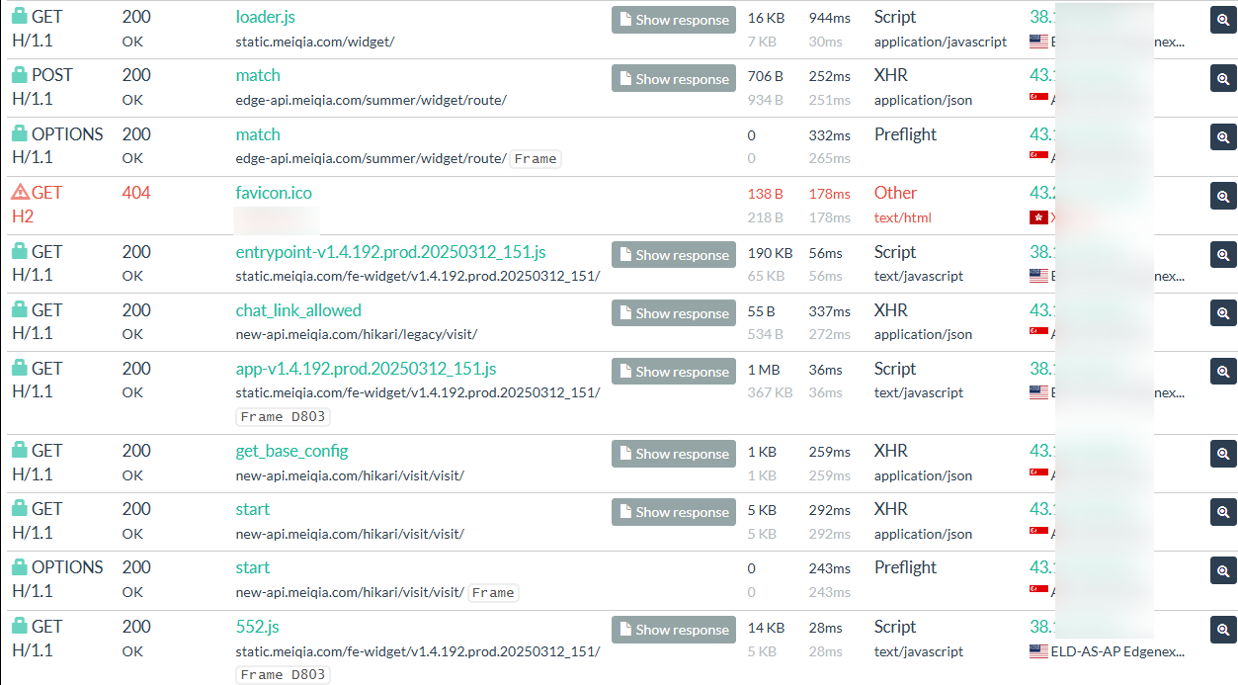

From an analyst’s perspective, chat-based onboarding flows provide an opportunity to identify which third-party chatbot service is embedded within the scam platform. This can be verified by capturing HTTP requests during the initial session and reviewing any API calls to external domains.

In several campaigns investigated by Group-IB’s High-Tech Crime Investigation team, traffic analysis revealed repeated requests to external chatbot infrastructure, although the specific provider may vary across campaigns. Documenting these observations are important, as they offer insight into the platform’s technical setup and can serve as additional indicators when correlating infrastructure across related operations.

Figure 16 A screenshot of HTTP requests trace showing API calls to the Meiqia chatbot service. Source: urlscan.io.

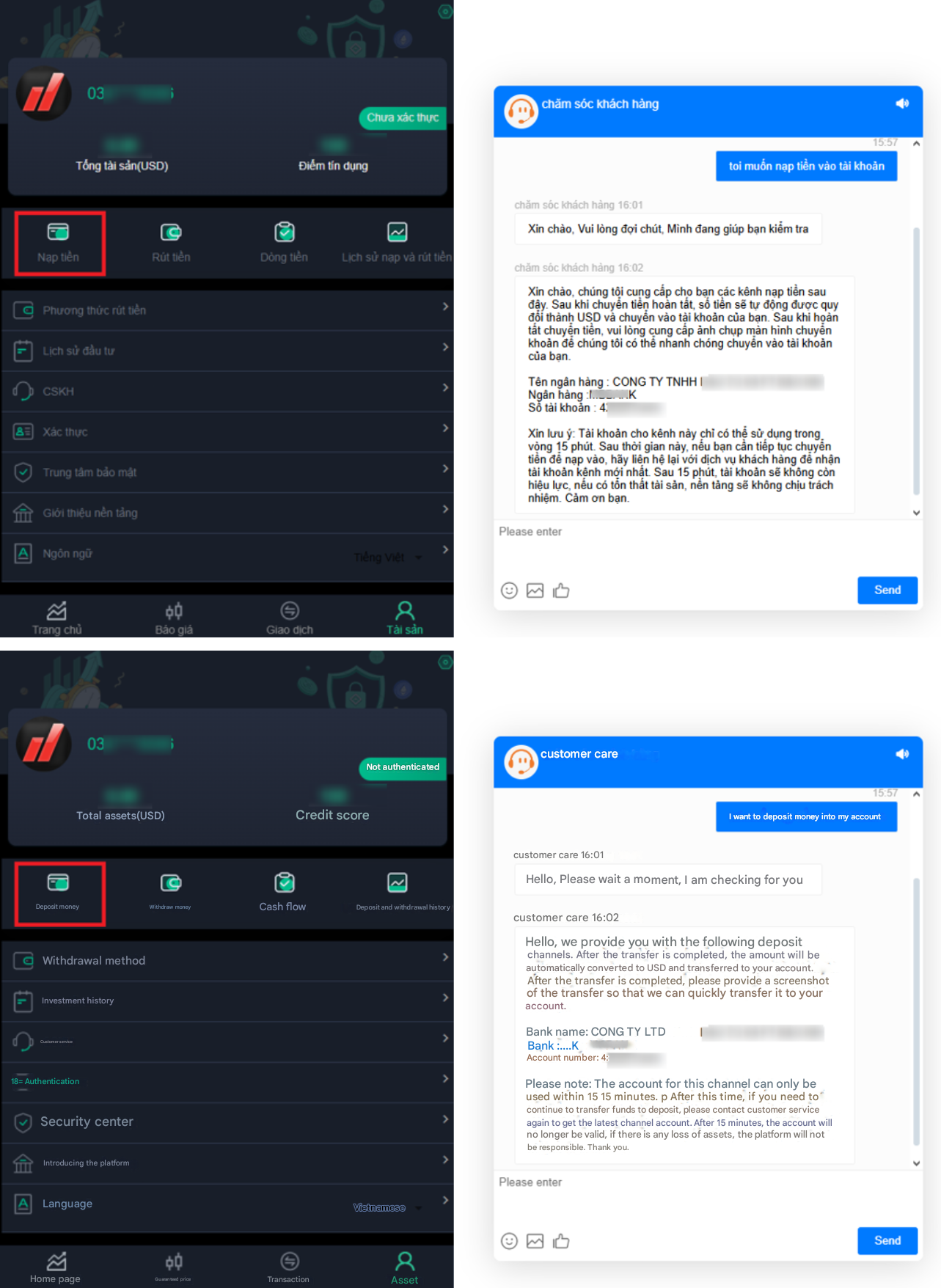

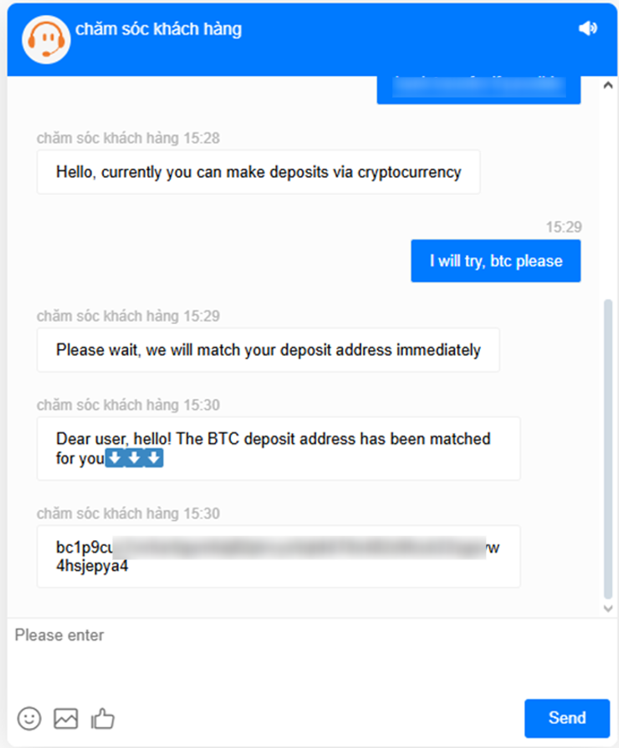

When victims select the “Top-up” function, the platform typically redirects them to a chatbot window rather than displaying payment instructions.

For analysts, capturing these chatbot-delivered payment instructions is critical. The returned bank accounts and cryptocurrency wallet addresses serve as high-value indicators for tracing transactions and understanding how the Payment Handler role operates within the scam ecosystem.

For law enforcement, such records provide actionable leads:

- Bank accounts can be traced back to shell companies, nominee owners, or complicit intermediaries.

- Cryptocurrency wallets can be tracked across blockchain transactions to identify laundering patterns.

- Repeated reuse of the same accounts across multiple campaigns can help strengthen attribution assessments and support formal requests for financial intelligence from banks and exchanges.

Figure 17. A screenshot of the deposit flow where the chatbot delivers corporate bank account details to the user.

Figure 18. A screenshot of the interaction with the chatbot providing a cryptocurrency wallet address during top-up.

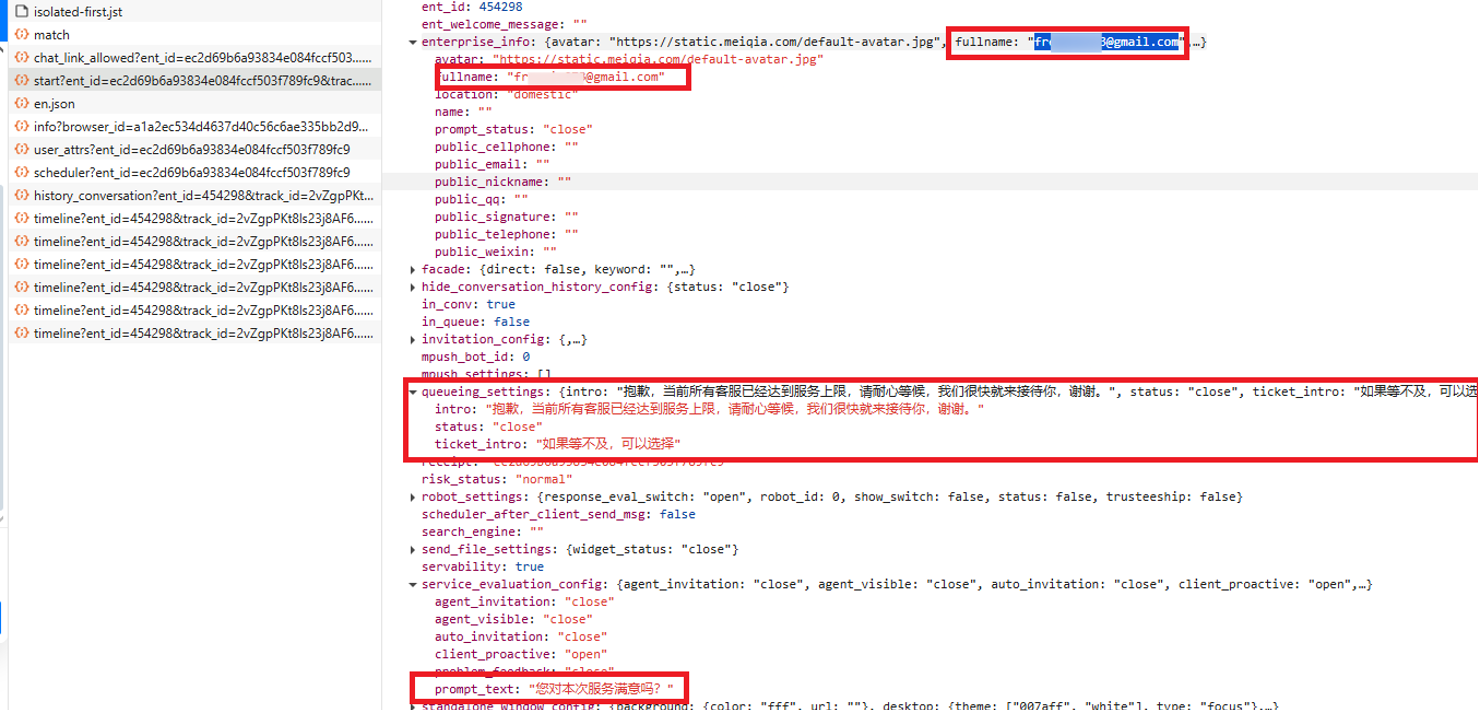

To extend analysis beyond the user-facing interaction, analysts can examine the backend responses of embedded chatbots. When inspecting chatbot payloads, analysts should check not only for technical parameters such as configuration data or registered accounts, but also for linguistic artifacts like system prompts or fallback messages. Both types of indicators can provide valuable context, although the findings may vary depending on the chatbot provider and campaign.

In one investigation as detailed below, analysis of a Meiqia chatbot response payload revealed backend configuration data containing system-level parameters, queue messages, and a registered email address. The same payload also contained Chinese-language system messages, such as queue notifications and ticket instructions, which were not visible in the frontend. Together, these details provided both a potential lead to the service account and insight into the operator’s technical setup.

Figure 19. A screenshot of chatbot payload inspection exposing configuration data, a registered email, and language-specific system strings observed by Group-IB’s High-Tech Crime Investigations analysts in one case.

Analyst Guidance: Payload artifacts such as registered emails and backend language strings should be collected and preserved. Emails associated with chatbot service accounts can serve as potential attribution leads, for example, being cross-checked against breach datasets or WHOIS records. Language artifacts, such as system prompts or queue messages, can provide supporting evidence about the operator’s origin, preferred language, or reliance on outsourced service use.

Group-IB advises treating these as supplementary indicators, best used in combination with other evidence such as infrastructure overlaps, payment accounts details, or social engineering materials observed in the same campaign.

Auxiliary Infrastructure Components in Scam Campaigns

When analyzing scam infrastructure, Group-IB advises analysts to expand their focus beyond the visible scam front-ends and account for auxiliary components that may be supporting these fraudulent workflows. These components often provide operational functionality to threat actors and can be systematically uncovered using established investigative techniques:

- Subdomain Enumeration: Subdomains may reveal backend functions that expose admin panels, chat simulators, or APIs.

- Infrastructure Pivoting: Domains within the same campaign often share the same IP or hosting range. Pivoting to these infrastructure elements can reveal additional services or reused interfaces.

- Archived Artifacts: Even after takedowns, archived scans (e.g., URLScan, Wayback Machine) preserve HTML, JavaScript, or developer comments, allowing analysts to reconstruct lost functionality.

- Code & Framework Fingerprinting: Identifying shared UI frameworks or recurring source code snippets across domains provides a consistent way to confirm toolkit reuse.

Drawing from Group-IB’s investigations, the following sections demonstrate how these techniques apply in practice through two representative examples frequently observed in scam infrastructure: chat simulation features and exposed administrative panels.

Presence of Chat Simulation Features in Infrastructure

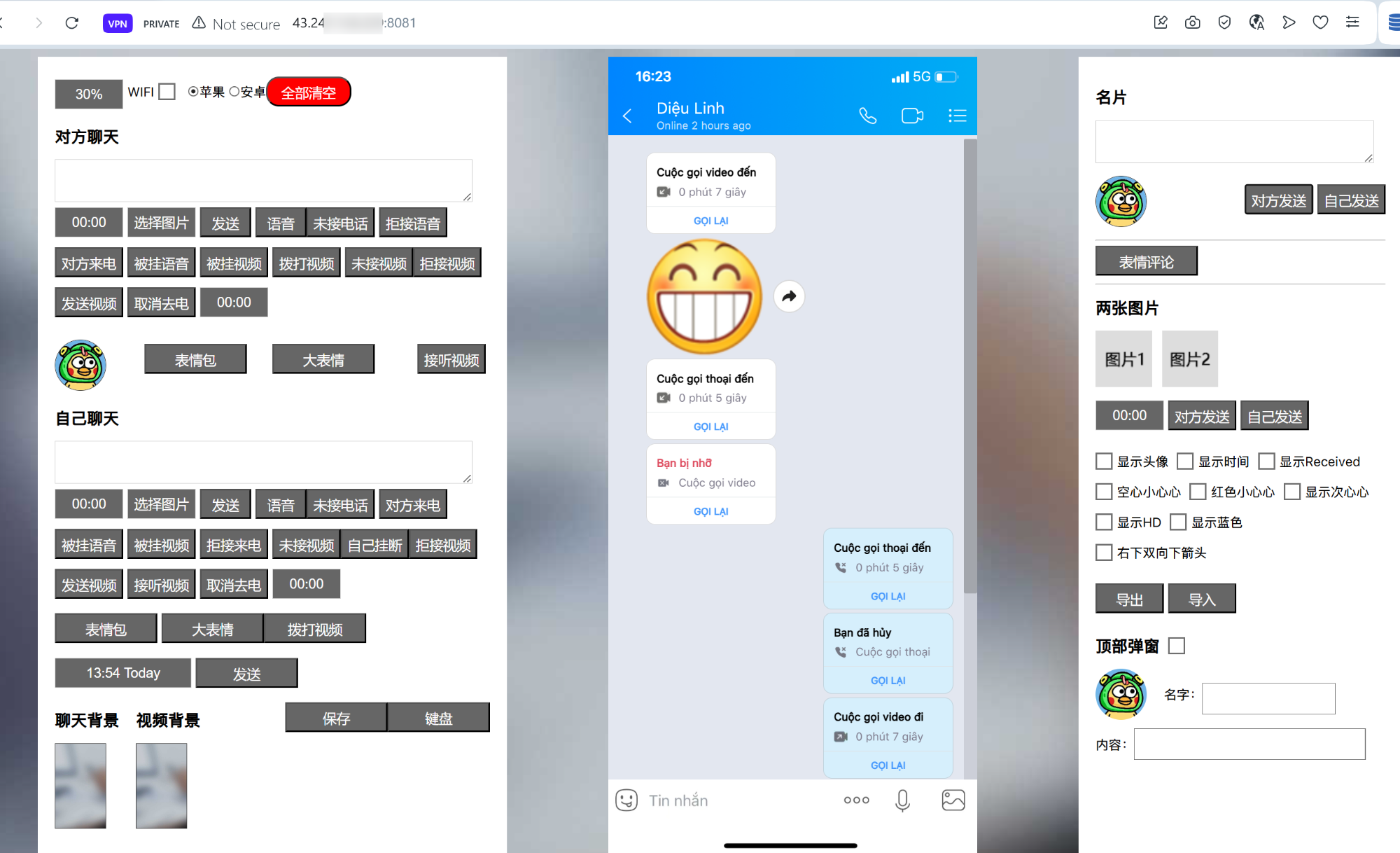

The hypothesis mentioned in the Promoters section that scammers may rely on chat generator tools to fabricate conversations was reinforced by Group-IB’s analysis, which identified a concrete example of such a tool within scam infrastructure: a chat simulation panel observed in a recent campaign.

The panel mimics a mobile messaging interface, complete with fields for usernames, timestamps, delivery status, and message content. While not present in every campaign, the discovery of such panels highlights how scammers may integrate auxiliary tools into their infrastructure.

These artifacts are significant for two reasons:

- Staging the Gaining Trust phase: In this particular case, fabricated chats were used to simulate prior success stories or impersonate support personas.

- Infrastructure Pivots: Identifying subdomains that host auxiliary tools enables analysts to expand mapping efforts beyond the trading front-end, uncovering the broader ecosystem controlled by the operators.

Figure 20. Group-IB’s Graph solution reveals the interface of a web-based messaging simulator discovered on an infrastructure-linked subdomain. The design mimics a chat application and includes configurable message metadata, likely intended to generate fabricated conversation screenshots for victim persuasion.

As a next step, analysts can attempt to review the source code of the exposed chat-simulator subdomain. Over the course of several investigations conducted by Group-IB’s High-Tech Crime Investigation team, these pages may already have been taken down by the time of analysis, making direct access impossible. While retrieving the source code of a decommissioned website is challenging, several approaches can be applied:

- Archived Snapshots: Services such as the Wayback Machine may preserve historical copies of HTML and JavaScript.

- Search Engine Caches: Cached versions stored by Google or Bing can sometimes be retrieved.

- Scanning Platforms: Tools like URLScan often archive full page content, including HTTP transactions and embedded scripts, which can be invaluable for reconstruction.

The screenshot below illustrates how analysts can pivot to URLScan results to recover HTTP transactions of a suspicious subdomain. Even if the domain has already been taken down, archived scans often preserve the complete HTML and JavaScript, enabling analysts to reconstruct and study the original site structure.

Figure 21. A screenshot of a URLScan record showing preserved HTTP transactions of a discovered domain.

The retrieved HTML contains developer comments and update logs in Simplified Chinese, referencing new feature additions such as WeChat integration and LINE UI updates. These artifacts provide valuable context, suggesting the developer’s likely linguistic background and offering possible clues regarding geographic origin.

Figure 22, A screenshot of the extracted HTML, with developer comments recovered via URLScan.

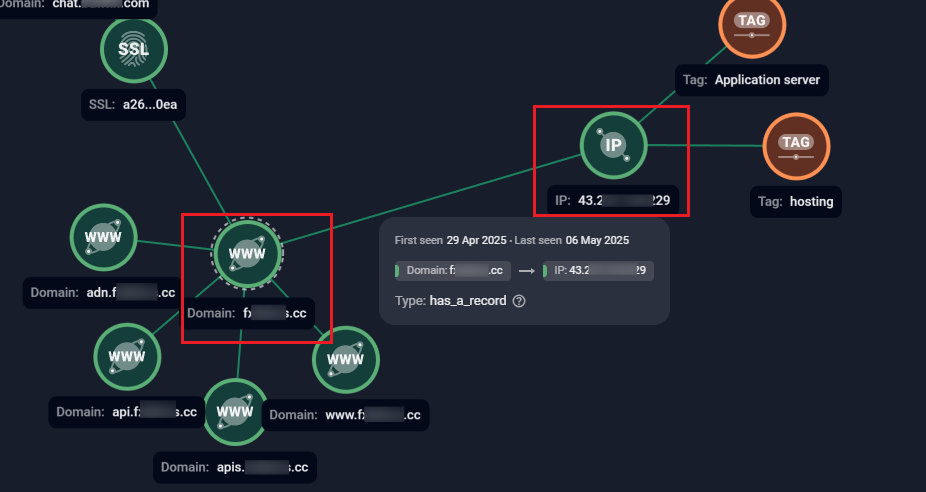

Pivoting to the hosting IP can reveal additional services running on ports that are not directly exposed through the main domain. In the earlier example, a subdomain exposed a web-based chat simulation. Pivoting to its hosting IP revealed the same interface on port 8081, confirming that both the domain and the IP-hosted service were part of the same backend. This demonstrates how examining IPs can uncover hidden services, duplicated interfaces, and broader infrastructure links that may not be immediately visible from the primary domain.

Figure 23. Group-IB’s Graph solution showing an example of domain-to-IP resolution, with multiple scam subdomains mapped to a hosting server.

Figure 24. A screenshot of a website hosted on the same server exposing the same chat simulation interface previously observed on the subdomain.

Analysts can also extend their investigation with Open-Source Intelligence (OSINT) to determine whether the same toolkits are promoted publicly. Advertisements for chat generators that create fake Zalo, LINE, or WhatsApp conversations demonstrate that these kits are openly available and frequently reused across campaigns. Such discoveries provide pivot points for correlation and offer insight into which victim groups or regional audience may be specifically targeted.

Figure 25. Screenshots of public advertisements for chat generator toolkits that imitate commonly used and popular messaging applications.

The observed toolkit enables manual creation of fake chat windows for popular instant messaging platforms. While its current operation is manual, it could be extended with automation or AI modules. From Group-IB’s High-Tech Crime Investigations team’s perspective, such findings should be viewed as early indicators of tooling that could evolve toward automation.

This trajectory is not unique to chat simulators. Group-IB has already observed generative AI being integrated into spam and phishing infrastructures, where AI modules are used to personalize lures, bypass filters, and automate large-scale campaigns. These developments highlight a broader trend towards increasingly sophisticated, AI-enabled fraud.

Conclusion

Investment scam campaigns leveraging fake trading platforms are increasingly structured and transnational. Far from being the work of isolated actors, these schemes are operated by role-based fraud networks, with distinct units for profiling victims, deploying infrastructure, and laundering illicit proceeds.

The Victim Manipulation Flow, derived from recent dismantled cases in Vietnam, provides investigators and law enforcement agencies with a clear framework for understanding how victims are identified, approached, and manipulated. It is a valuable tool for public awareness, professional training, and early detection of similar scam operations.

The Multi-Actor Fraud Network model gives experts a structured view of how these groups operate, defining how responsibilities are divided between operators, from intelligence gathering, technical operations, and money handling. This perspective helps investigators avoid blind spots during arrests and provides stronger evidence for criminal prosecution.

Group-IB’s technical analysis offers step-by-step insight for analysts. It explains how campaigns control access using invitation codes, how chatbots are used to screen targets and deliver payment instructions, and how infrastructure often includes auxiliary components like chat simulators.

These components are not merely supporting tools. They reveal how fraud is orchestrated and industrialized, and expose patterns of toolkit reuse across domains.

Most importantly, the presence of chat simulation features signals a potential trajectory towards automation or AI-driven interactions to create more convincing interactions at scale. While no confirmed AI integration was observed in these cases, the artifacts identified represent early indicators of evolving tactics that should be closely monitored.

For cybersecurity experts, these findings underscore the importance of collecting and correlating technical evidence to connect related domains, attribute operations to specific actors, and ultimately dismantling their infrastructure. For law enforcement agencies, the models provide a practical framework for explaining scam operations, raising public awareness, and building stronger cases for investigations and criminal prosecutions.

Recommendations

For Researchers and Analysts

- Apply the Victim Manipulation Flow and Multi-Actor Fraud Network models as analytical frameworks to study ongoing campaigns.

- Pivot on recurring infrastructure patterns to cluster related domains and uncover centralised operations.

- Track structural and behavioral indicators across campaigns to detect overlaps and build broader correlations.

- Monitor the rise of auxiliary components, as they may signal a shift toward more advanced automation or AI-driven fraud operations, offering early indicators of how investment scam tactics could evolve in the near future.

For Law Enforcement Agencies

To strengthen fraud prevention and reduce abuse of fake investment platforms, we recommend:

- Adopt the Victim Manipulation Flow model to recognise the staged tactics scammers use, enabling earlier intervention and more effective awareness campaigns for the public.

- Leverage the Multi-Actor Fraud Network model to understand how fraud groups divide roles and responsibilities, helping investigators avoid blind spots during operations and build stronger cases for prosecution.

- Coordinate with Group-IB’s Investigation Team for intelligence sharing, infrastructure mapping, and support in cross-border digital forensics and attribution efforts.

For Financial Institutions

- Proactively monitor for unauthorized use of brand identity—including fake websites impersonating trading platforms or customer portals.

- Set up a centralized incident response framework to respond quickly to reports of phishing or impersonation.

- Educate customers regularly on common fraud tactics and reporting channels.

- For Banks Hosting Fraudulent Receiving Accounts: Cross-check new corporate accounts with public company registries to detect fake or inactive business entities. Integrate Group-IB Fraud Protection to detect anomalies in payment flows, flag mule accounts, and support real-time investigation and block of fraud-related funds.

- Deploy Group-IB Digital Risk Protection (DRP) to automatically detect, validate, and take down fake domains, rogue mobile apps, and social media impersonators abusing your brand in real-time.

Frequently Asked Questions (FAQ)

What is the purpose of this research?

To explain how modern investment scam campaigns operate, from victim manipulation tactics to technical infrastructure, and provide guidance for analysts and law enforcement.

What is the Victim Manipulation Flow model?

It’s a step-by-step map showing how scammers approach, screen, and exploit victims, based on real cases investigated in Vietnam.

What is the Multi-Actor Fraud Network model?

A framework that shows how fraud groups divide roles (masterminds, promoters, backend operators, payment handlers) to scale and evade detection.

Why are chatbots and chat simulators important in these scams?

They are used to screen victims, deliver payment details, and even simulate fake conversations to build trust. Analysts can extract valuable data from them for attribution.

What technical clues can analysts use to track these scams?

Shared APIs, reused SSL certificates, admin panels, subdomains, and framework fingerprints (like Layui) often reveal connections between sites.

Does the research find evidence of AI use in these scams?

No direct AI use was confirmed, but chat simulation tools suggest that AI-driven automation could be the next step, making scams more scalable and convincing.

How can law enforcement benefit from these findings?

By using the proposed models to understand criminal workflows, improve investigations, educate the public, and strengthen evidence for prosecution.

What should security teams or analysts do when investigating?

Capture chatbot payloads, trace backend services, preserve artifacts like source code or certificates, and map related domains to uncover shared infrastructure.