Introduction

In late August 2024, a prominent Indonesian financial institution reported a deepfake fraud incident within its mobile application and sought a comprehensive investigation and expert guidance from Group-IB.

The institution had previously implemented robust, multi-layered security measures to protect its mobile application from various cyberattacks, including rooting, jailbreaking, and exploitation techniques. As the first line of defense, it utilized mobile application security features such as anti-emulation, anti-virtual environments, anti-hooking mechanisms, and Real-Time Application Self-Protection (RASP). To further enhance security during customer onboarding, the institution adopted digital identity verification as a second layer, enabling digital Know Your Customer (KYC) procedures with biometric tools like facial recognition and liveness detection.

However, fraudsters successfully circumvented these defenses by submitting AI-altered deepfake photos, effectively breaching the institution’s multi-layer security approach. The attackers obtained the victim’s ID through various illicit channels, such as malware, social media, and the dark web, manipulated the image on the ID—altering features like clothing and hairstyle—and used the falsified photo to bypass the institution’s biometric verification systems.

The deepfake incident raised significant concerns for the Group-IB Fraud Protection team. To address these issues and enhance the detection and prevention of deepfake fraud within the existing solution, the team launched a thorough investigation. This led to the identification of over 1,100 deepfake fraud attempts by one of our Indonesian clients, where AI-generated deepfake photos were used to bypass their digital KYC process for loan applications.

Our research highlights several key aspects of deepfake fraud. It explores the main deepfake techniques used by fraudsters to bypass Know Your Customer (KYC) and biometric verification systems. It also outlines observed behavior patterns of deepfake fraud, based on insights from the Group-IB Fraud Protection Team. The financial and societal impacts of deepfake fraud are evaluated, emphasizing its growing urgency. Recommendations are provided for financial institutions to enhance their security systems against deepfake-based fraud. Additionally, the document calls for greater awareness within the financial sector about the emerging threat of deepfake technology and the importance of proactive defense measures.

Key discoveries in the blog

- Financial and Societal Impact of Deepfake Fraud: The widespread use of deepfake technologies for fraud poses significant financial risks, with potential losses in Indonesia alone estimated at $138.5 million USD. The social implications include threats to personal security, financial institution integrity, and national security.

- Deepfake Technology Bypasses Biometric Security: Fraudsters used AI-generated deepfake images to bypass biometric verification systems, including facial recognition and liveness detection, to impersonate victims and fraudulently access financial services.

- Advanced Fraud Methods Involving App Cloning: The use of app cloning allowed fraudsters to simulate multiple devices and bypass security mechanisms, highlighting vulnerabilities in traditional fraud detection systems.

- Virtual Camera Applications in Fraud: Fraudsters exploited virtual camera software to manipulate biometric data, using pre-recorded videos to mimic real-time facial recognition during KYC processes, deceiving institutions into approving fraudulent transactions.

- AI-Powered Face-Swapping Technologies: AI-driven face-swapping tools enabled fraudsters to replace a victim’s facial features with those of another person, further complicating the detection of fraudulent attempts in biometric verification.

Who may find this blog interesting:

- Fraud & Financial Crimes teams

- Risk & Compliance Specialists

- Cybersecurity teams

- Law Enforcement Investigators

- Police Forces

Case Investigation by Group-IB Fraud Protection

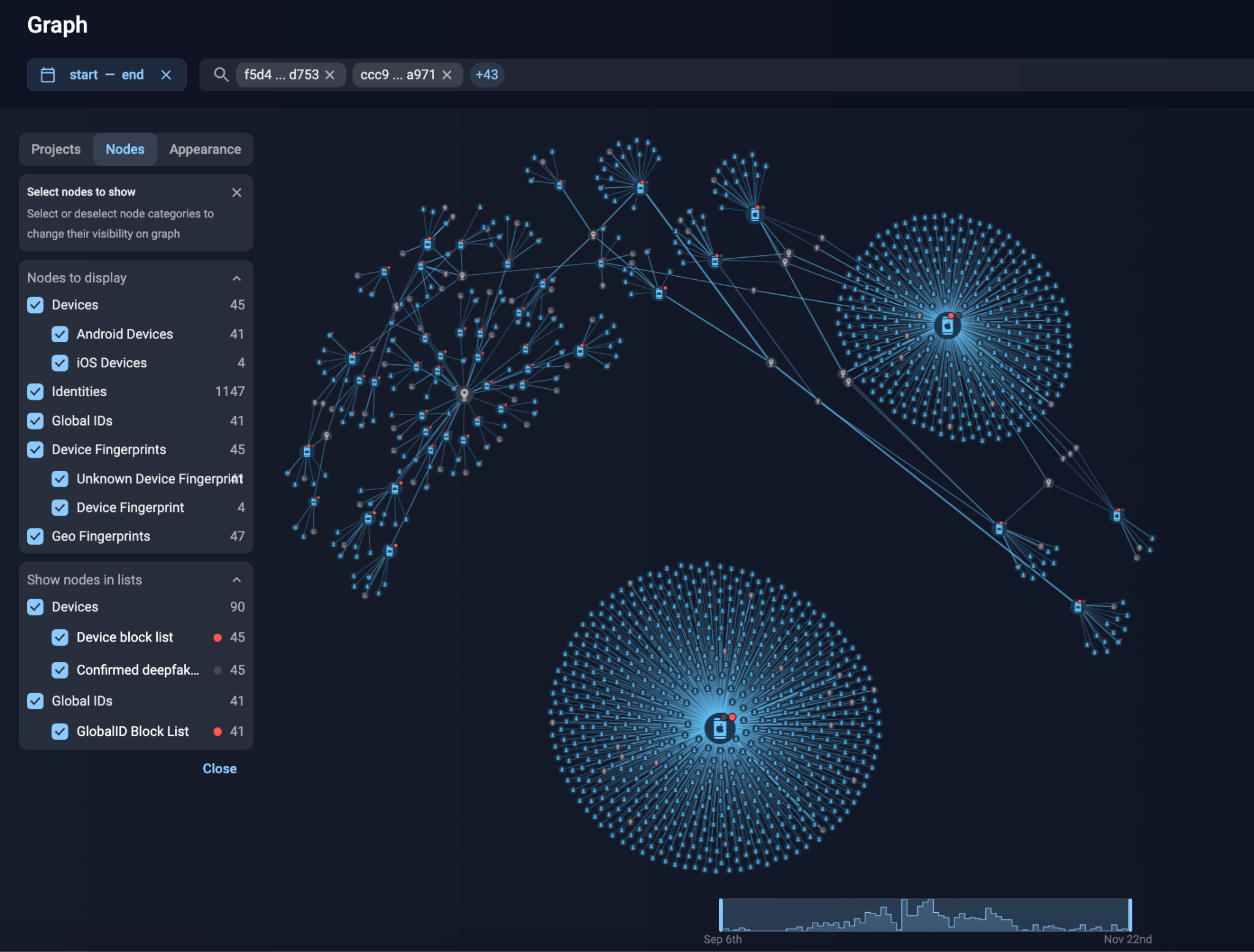

Group-IB’s Fraud Protection team assisted the Indonesian financial institution in identifying over 1,100 deepfake fraud attempts, in which AI-generated deepfake photos were used to bypass their digital KYC process for loan applications. This included the detection of more than 1,100 fraudulent accounts and the identification of 45 specific devices, of which 41 were Android-based, and 4 iOS-based.

Figure 1. Group-IB’s Graph Network Analysis solution identifying the compromised devices.

Usage of App Cloning

Our investigation revealed that many Android devices involved in deepfake fraud were highly likely created using app cloning techniques. App cloning tools allow users to duplicate installed applications in their devices, allowing users to log into different accounts simultaneously. Each cloned application operates within a separate data container on the physical device, ensuring isolation and independent functionality from the original app and other applications.

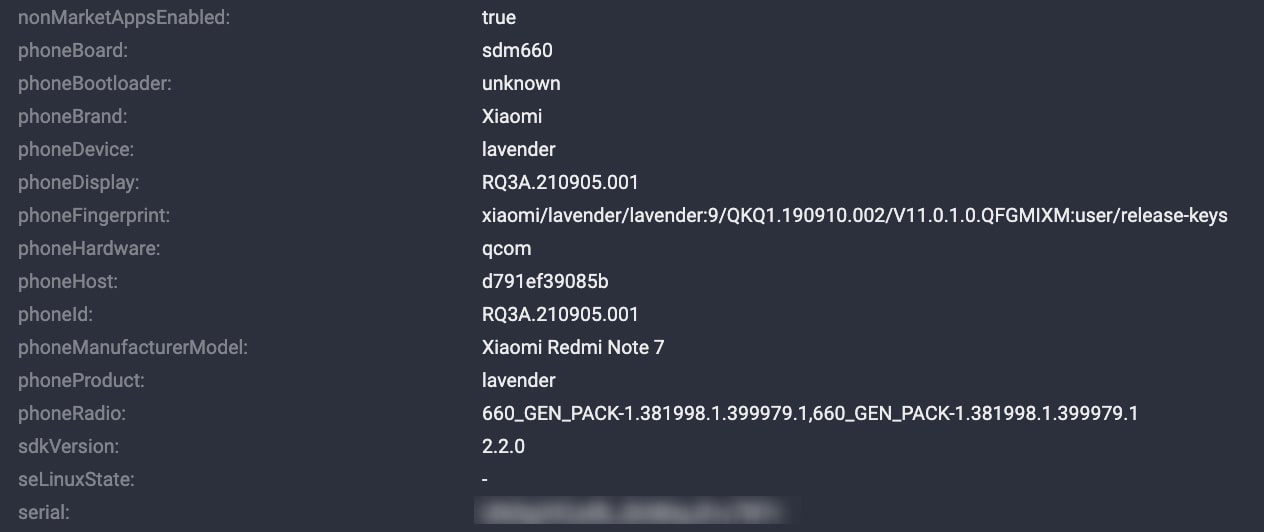

The inference of app cloning usage stems from clone-related events detected by our solution. Furthermore, a detailed analysis of device attributes uncovered identical attributes among multiple Android deepfake devices (as shown in the screenshot below), strongly indicating the use of a single physical device hosting multiple cloned instances in a virtual environment.

Figure 2. A screenshot of device attributes that were identical to multiple other Android devices used in the deepfake fraud.

Virtual Camera Technology and Its Role in Identity Spoofing

Virtual camera technology refers to software-based solutions that generate camera feeds using sources like pre-recorded videos, screen captures, or live streams instead of real-time footage from a physical camera. This technology enables attackers to spoof their identities by tricking facial recognition systems into accepting pre-prepared media as live, legitimate inputs. For instance, Group-IB’s Fraud Protection team identified some confirmed deepfake devices utilizing a virtual camera application known as Video Virtual Camera.

Figure 3. A screenshot of the data of the Video Virtual Camera application used by fraudsters.

Usage of Virtual Camera Application

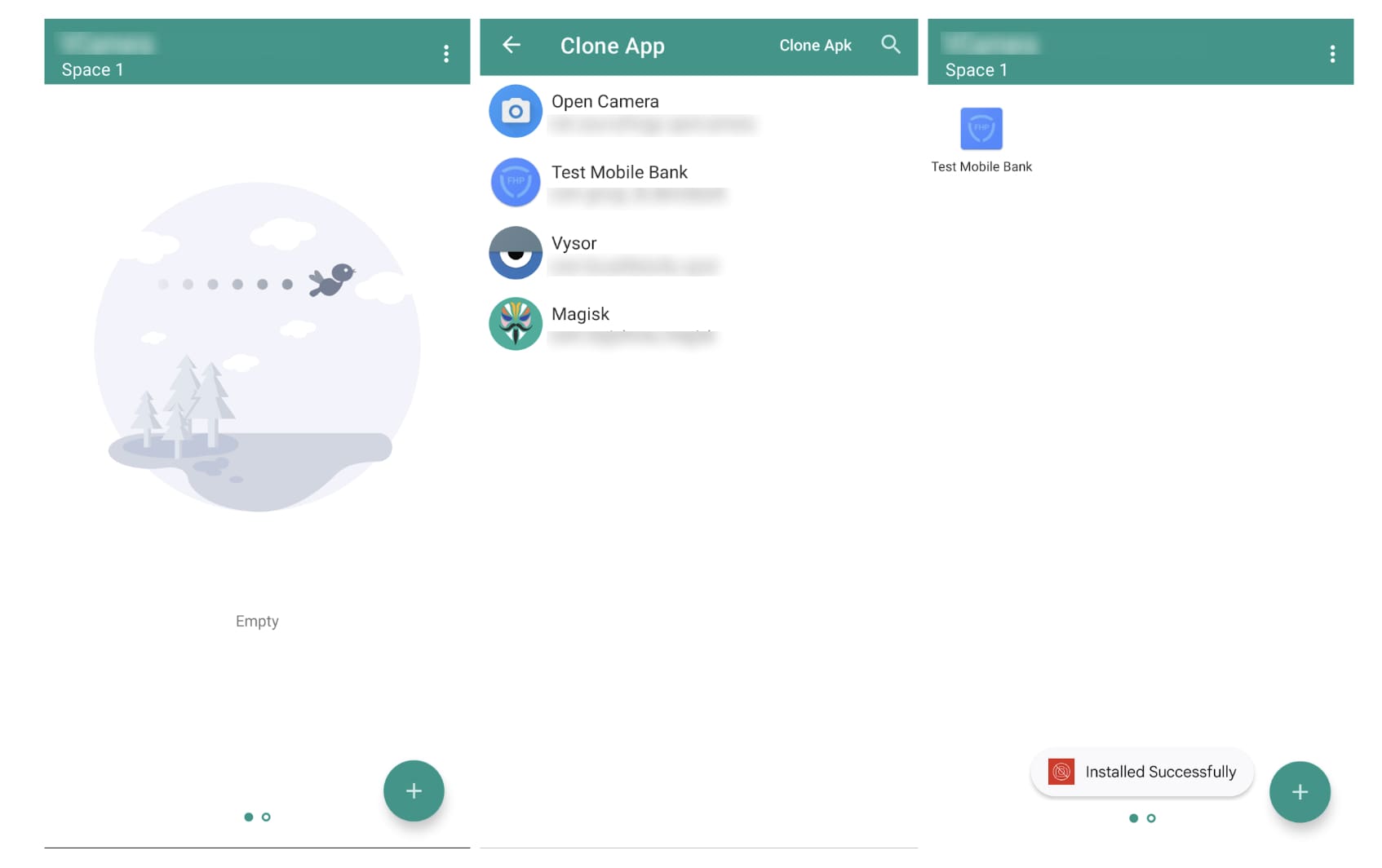

During the investigation into applications installed on detected deepfake devices, the Group-IB Fraud Protection team identified a significant presence of tools related to virtual cameras, app cloning, Android Trojans, automation tools, file and application hiding tools, GPS spoof tools, and AI-powered photo/video editors. This article highlights one such virtual camera application, to demonstrate how fraudsters leverage these tools to bypass the KYC processes of banking organizations.

This virtual camera application offers “Space,” an isolated environment that supports both virtual camera technology and app cloning functionality. Users can create separate “Spaces,” each mimicking an Android environment, and clone any installed application within these individual spaces. Applications inside a Space operate as if they are running on a real device, but in reality, they exist within a simulated, self-contained system.

Figure 4. Screenshots of a virtual camera application used by fraudsters to bypass KYC processes.

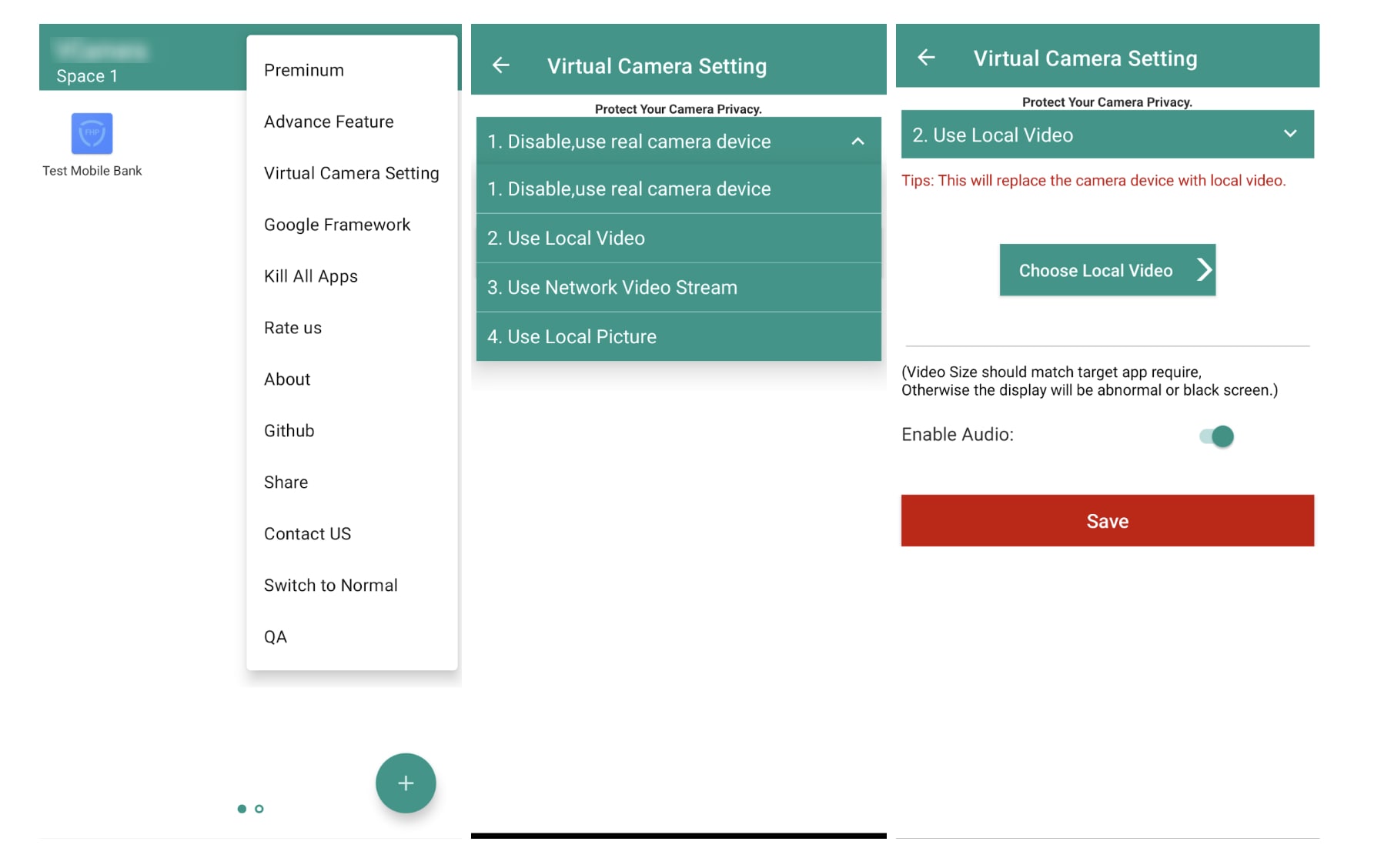

The next step involves configuring the virtual camera, where users can select an option to replace the camera feed with pre-prepared local video files. When an application requiring camera access runs within a Space, the Space can host a “virtual camera” by redirecting what the app perceives as the camera feed. However, to work properly, many virtual cameras require stringent conditions to function correctly. In this case, the pre-prepared video must meet the size and format requirements of the target application; otherwise, the display may appear distorted or result in a black screen.

Figure 5. Screenshots of how the virtual camera application can be exploited by fraudsters to manipulate digital verification systems.

This example above illustrates how fraudsters exploit virtual camera applications to manipulate digital verification systems, circumventing security measures intended to protect financial institutions and their customers.

Navigating the Challenges of Digital KYC: Balancing Convenience and Security

KYC (Know Your Customer) verification is a critical process that organizations use to authenticate the identity of new customers and assess potential risks associated with them. The process typically includes Identity Verification, Biometric Verification, and Proof of Address Verification.

Identity Verification requires clients to provide official documents, such as a passport, national ID, or driver’s license. Biometric Verification follows, using technologies like facial recognition or fingerprint scanning to ensure the individual matches the provided identification. Proof of Address Verification may also be required, where customers submit utility bills or bank statements to confirm their residential address.

Nowadays, most financial institutions offer digital KYC processes, allowing customers to authenticate their identity online without physical presence. While digital KYC brings convenience, it also presents hidden risks, as fraudsters have more opportunities to circumvent the verification processes and commit fraud.

Enhancing Facial Recognition Security: Challenges and Attack Methods in KYC Systems

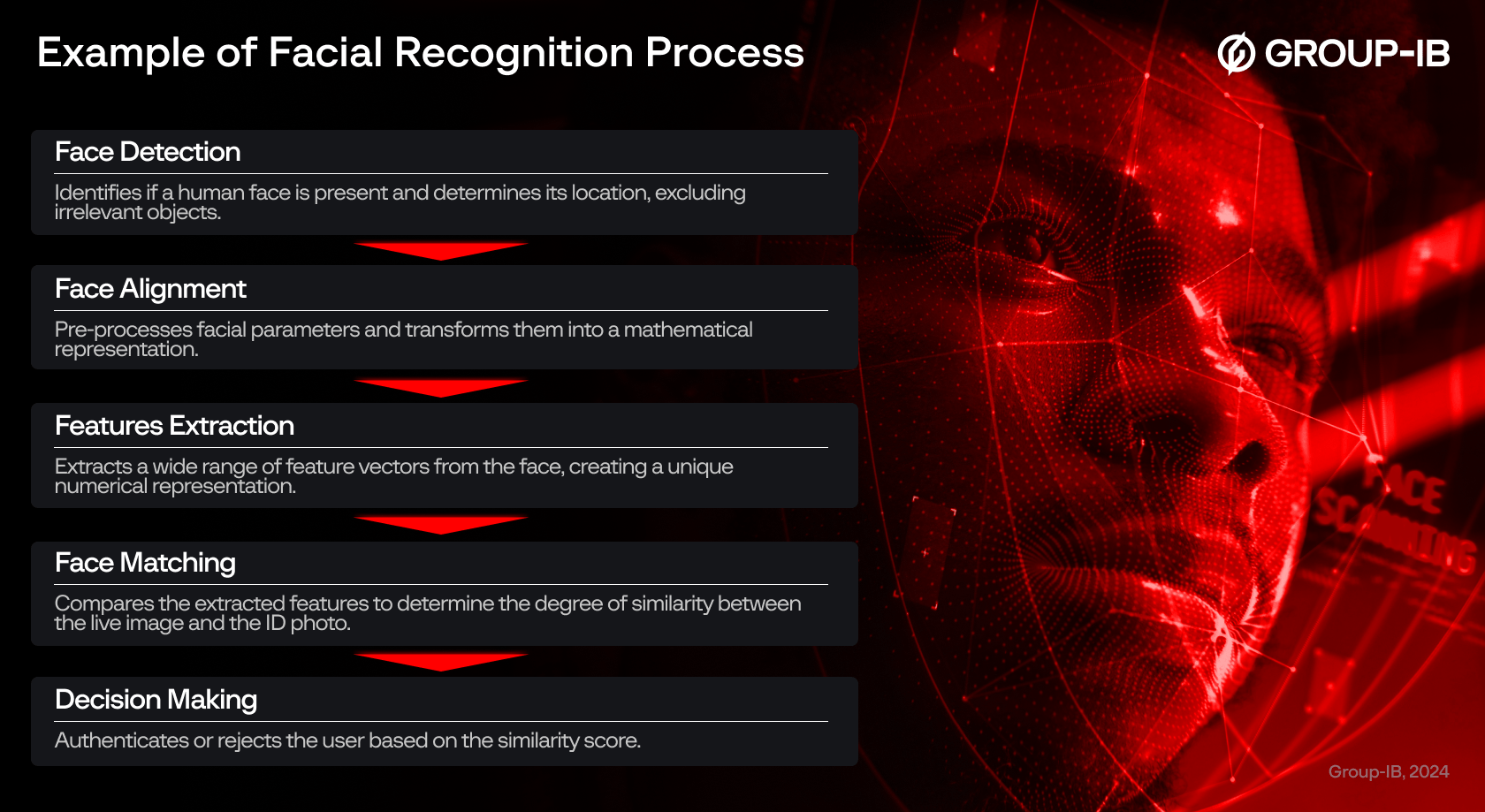

Facial recognition, including liveness detection, is a common biometric verification tool used in KYC systems. Users are prompted to take a selfie, and liveness detection ensures their physical presence. The system then compares the facial features from the selfie with the ID photo submitted during the Identity Verification process. If the features match, the user is authenticated; if not, the user is asked to retake the selfie or is denied access.

A typical facial recognition process involves several sub-processes. Most attacks detected on facial recognition systems target the face detection stage, where attackers attempt to deceive the system by replacing real-time facial data with pre-recorded or altered data through techniques such as virtual camera injection or AI-driven facial swapping.

Figure 6. An example of a facial recognition process.

Face-Swapping Technologies and Malware in Digital KYC Bypasses

Leveraging advanced AI models, face-swapping technologies enable attackers to replace one person’s face with another’s in real time using just a single photo. This creates the illusion that the swapped face is the legitimate identity of the individual in a video. These technologies can effectively deceive facial recognition systems due to their seamless, natural-looking swaps and the ability to convincingly mimic real-time expressions and movements.

A notable example is the GoldPickaxe malware family, identified by Group-IB’s Threat Intelligence unit. Distributed primarily through social engineering tactics, this trojan infects devices to harvest facial recognition data, identity documents, and intercepted SMS communications. Attackers then use AI-driven face-swapping services to create deepfakes based on stolen biometric data, combining them with stolen ID documents and intercepted SMS codes to compromise victims’ banking accounts. Similarly, the Android Remote Access Malware Gigabud—which is seemingly developed by the same attackers or an associate—has been found capable of stealing facial recognition data, contributing to digital KYC bypass attempts as well.

AI-Driven Image and Video Generators in KYC Exploitation

AI-driven image and video generators can create highly realistic synthetic media by leveraging advanced artificial intelligence algorithms. These tools are increasingly used in the Identity Verification and Biometric Verification processes of KYC. The key techniques involve training AI models on extensive datasets of real human faces, enabling the system to replicate intricate visual features such as facial structures, expressions, and subtle details like skin texture.

A report by 404 Media highlighted a notable example of AI-driven image exploitation in KYC. The report alleges that OnlyFake, an AI-powered image generator capable of producing highly realistic synthetic IDs almost instantly for as little as $15, successfully created IDs that bypassed the identity verification system of a major global cryptocurrency exchange.

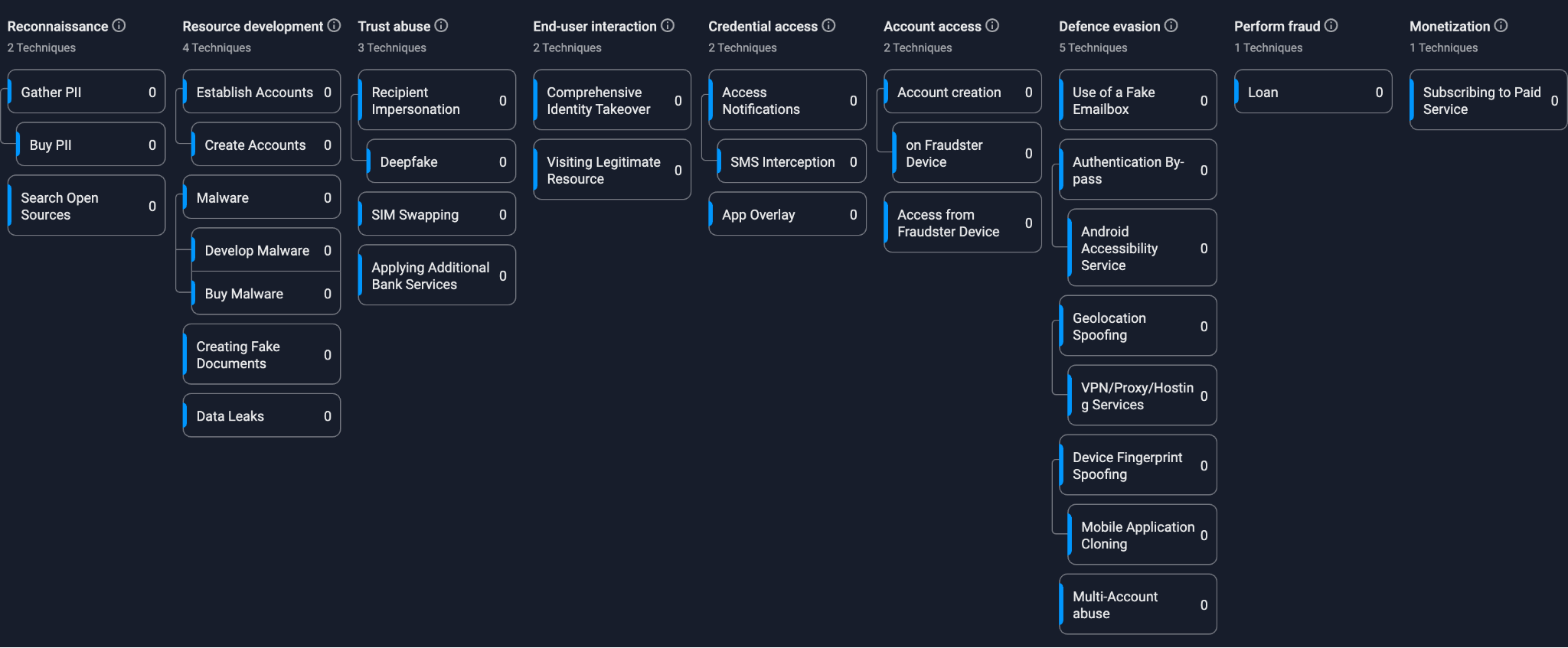

Fraud Matrix of Deepfake-Driven ID Takeover

|

KYC Procedure |

Normal user |

Fraudster |

| Identity Verification | Clients provide official documents like a passport, national ID, or driver’s license | Stolen ID documents or AI-driven image generator |

| Biometric Verification | Using facial recognition or fingerprint to ensure the individual matches the identification | Camera injection and face-swapping technologies |

| Proof of Address Verification | Customers are required to submit utility bills or bank statements to confirm their residential address | Document forgery |

Figure 7. Fraud matrix of a deep fake-driven ID takeover

Estimating Financial and Societal Impacts of Deepfake Fraud

Based on a detected fraud rate of 0.05% (over 1,000 fraud cases among 2 million users) from the aforementioned Indonesian bank, we estimated the potential financial losses from deepfake fraud in loan applications on a national scale. Assuming approximately 60% of Indonesia’s population (277.5 million) is economically active and eligible for loan applications—roughly 166.2 million individuals aged 16 to 70—this would result in an estimated 83,100 fraud cases nationwide (0.05% x 166.2 million). With an average fraudulent loan size of $5,000, the estimated financial damage could reach US$138.5 million over three months in one Indonesia.

From a social perspective, deepfake technology presents significant risks across various sectors. Individuals are increasingly vulnerable to fraud as scammers use deepfake videos for social engineering attacks, manipulating people into sharing sensitive information, transferring funds, or downloading malware. In the business sector, deepfakes can be used to impersonate employees, executives, or partners, allowing fraudsters to gain privileged access to confidential systems, leading to data breaches, financial theft, and reputational harm.

For financial institutions, the ability to bypass KYC verification through deepfakes exposes them to heightened risks of fraud, including fake loan applications, money laundering, and other criminal activities. This not only undermines security but also erodes trust between customers and financial entities, leading to significant reputational damage and potential regulatory consequences.

At the government level, deepfakes pose serious national security threats by enabling disinformation campaigns, espionage, and the manipulation of public opinion. The rise in fraudulent activities driven by deepfakes also increases the burden on law enforcement and regulatory bodies, requiring greater resources and more sophisticated tools to combat these evolving threats.

Challenges for AI-Driven Deepfake Detection

The antifraud industry faces significant challenges in detecting deepfake fraud due to the sophisticated and rapidly evolving nature of deepfake technologies. These tools are capable of producing highly realistic media that can mimic facial expressions, voice patterns, and even subtle details like eye movements. Such advancements make it increasingly difficult for traditional detection systems to distinguish between authentic and synthetic content, posing a serious threat to security systems reliant on visual and biometric verification methods. Here, we highlight some of the key challenges that the financial industry faces:

Lack of Direct Detection Tools for Manipulative Technologies

The industry currently lacks advanced tools for directly detecting manipulative technologies such as virtual camera applications, facial-swapping techniques, and other AI-driven manipulations. This is primarily due to two key factors: (1) the rapid evolution of deepfake technology, which continuously improves in realism and complexity; and (2) the availability of open-source AI models and tools, which lower the barrier for creating sophisticated deepfakes. As a result, anti-fraud systems are struggling to keep pace with these advancements, making it increasingly difficult to detect and mitigate deepfake-related fraud.

Difficulty in Real-Time Detection

Detecting deepfakes in real-time scenarios presents additional challenges. For instance, most anti-fraud systems assign a unique device ID to each cloned instance, distinguishing it from the primary app. While this approach helps identify instance-level activities, it complicates the timely detection of fraudulent behavior, as each instance appears as a separate device. This fragmentation makes it more difficult to track and correlate malicious actions effectively, hindering the system’s ability to detect and respond to fraud in a timely manner.

Limited Access to Training Data for Detection

Effective AI-driven detection systems require large datasets of both synthetic and genuine media for training. However, obtaining diverse, high-quality deepfake datasets remains a challenge due to privacy concerns and the complexities of generating realistic fake data for research purposes. The lack of access to these datasets limits the ability of detection models to adapt to and accurately identify newer types of deepfakes, reducing the overall effectiveness of anti-fraud systems in recognizing emerging threats.

The Need for Robust Solutions

These gaps in detection capabilities leave financial institutions and other organizations vulnerable to increasingly sophisticated fraud tactics. Addressing these challenges requires the development of robust, multi-dimensional, AI-enhanced anti-fraud measures capable of adapting to the rapid evolution of deepfake technologies and providing real-time, comprehensive protection.

Conclusion

The emergence of deepfake technologies has introduced unprecedented challenges for financial institutions, disrupting traditional security measures and exposing vulnerabilities in identity verification processes. Deepfake fraud, enabled by tools such as AI-driven image and video generators, virtual camera applications, and facial-swapping technologies, has become a sophisticated method for deceiving KYC protocols, leading to significant financial, reputational, and operational risks.

Our investigation highlights the multifaceted risks posed by deepfake fraud, from the exploitation of app cloning to the use of advanced AI tools that evade detection. These tactics enable fraudsters to impersonate legitimate users, manipulate biometric systems, and exploit gaps in existing anti-fraud measures, as evidenced by real-world cases such as the detection of over 1,100 deepfake accounts by Group-IB Fraud Protection.

The analysis underscores the financial implications of deepfake fraud, with estimated damages in Indonesia alone reaching $138.5 million USD over three months. This alarming figure not only reflects the scale of the threat but also the urgent need for proactive measures to combat it.

To address these challenges, financial institutions must move beyond single-method verification, enhancing account verification processes and adopting a multi-layered approach that integrates advanced anti-fraud solutions. By implementing these strategies, financial institutions can strengthen their defenses against deepfake fraud, safeguarding both their customers and their reputation.

However, this is a collective effort that requires collaboration across the financial sector, regulatory bodies, and technology providers to build a resilient ecosystem capable of adapting to the rapid advancements in AI-driven fraud. The stakes are high, but with vigilance, innovation, and a commitment to proactive defense, the industry can mitigate the risks posed by this formidable threat.

Recommendations

Financial institutions must adopt proactive measures to mitigate risks caused by evolving deepfake techniques and relying solely on a single verification method is no longer sufficient. Effective defenses require a multi-layered approach to validate account ownership and detect fraudulent behavior, such as Group-IB’s Fraud Protection.

For Group-IB customers: Please visit our Fraud Protection Intelligence portal for a more comprehensive report.

Below are our key recommendations:

Enhance Account Verification Processes

- Awareness of Deepfake Account Registration: Institutions must acknowledge the risk of deepfake fraud in digital onboarding processes and implement multiple verification methods to enhance security.

- Require Physical Presence for High-Risk Activities: For high-value transactions or loan applications from new users, consider requiring in-person verification at a bank branch to ensure a more robust identity validation.

Deploy Multi-Dimensional Anti-Fraud Solutions

Integrating advanced anti-fraud systems with multi-layered detection capabilities is essential. These systems should include the following features:

- Device Fingerprinting: Assign a unique identifier to devices based on both hardware and software characteristics. This approach can detect fraudulent devices, even when cloned instances are used, by identifying them as originating from the same source.

- Device Intelligence: Capable of assessing whether the device is new to the system; analyzing technical specifications and device models to link suspicious devices to known fraudulent activities.

- Application Monitoring: Examine the source of application downloads and assess the risk level of installed applications; Implement advanced detection mechanisms to identify malware or suspicious apps, especially those targeting biometric data.

- AI-Driven Anomaly Detection: Use AI models to analyze user behavior for anomalies, such as deviations in typing speed or navigation patterns compared to historical data.

- Emulator and Clone Detection: Identify rooted devices, emulators, or cloned environments used to bypass security measures.

- IP Intelligence: Assign risk scores to IP addresses and flag those associated with fraudulent activities; Monitor for shared IPs among suspicious accounts.

- Geolocation and Activity Tracking: Track users’ geolocation, activity patterns, and movement intensity to detect anomalies such as improbable travel speeds or locations; Capable to detect device’s real geolocation even with VPN and GPS spoofing tools.

- Cross-Device Tracking: Monitor user behavior across multiple channels (e.g., web and mobile) to identify inconsistencies or suspicious activity.

- Device Environment Monitoring: Identify and monitor suspicious activities within the device environment, including bot activity, the use of hosting services, remote access tools, screen-sharing applications, accessibility services, and overlay activities. These techniques are often exploited by fraudsters to orchestrate and manage fraudulent operations.

Leverage Collaborative Databases (Global ID Blocklist)

Maintain and contribute to a global database of flagged fraudulent accounts, devices, geohashes, and IPs. Sharing this information with other financial institutions helps prevent cross-bank fraud and strengthens the industry’s collective defenses.

Invest in Advanced Fraud Detection Tools

Adopt cutting-edge solutions—such as Group-IB’s Fraud Protection—that leverage artificial intelligence, behavioral analytics, and advanced device monitoring to detect fraud attempts before they succeed. These tools should integrate seamlessly with existing systems and provide real-time alerts to fraud teams.

By implementing these recommendations, financial institutions can strengthen their defenses against deepfake fraud, safeguard their customers, and maintain trust in their services.